In this tutorial, we’ll build a fully functional Retrieval-Augmented Generation (RAG) pipeline using open-source tools that run seamlessly on Google Colab. First, we will look into how to set up Ollama and use models through Colab. Integrating the DeepSeek-R1 1.5B large language model served through Ollama, the modular orchestration of LangChain, and the high-performance ChromaDB vector store allows users to query real-time information extracted from uploaded PDFs. With a combination of local language model reasoning and retrieval of factual data from PDF documents, the pipeline demonstrates a powerful, private, and cost-effective alternative.

!pip install colab-xterm

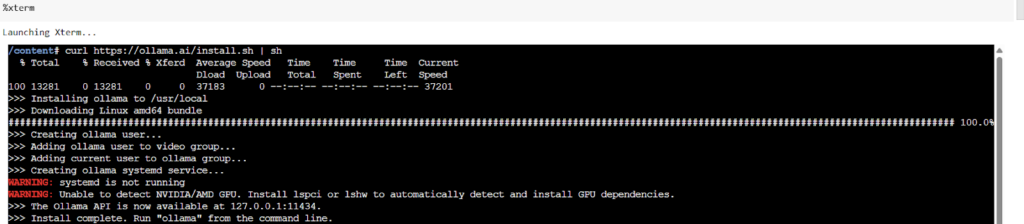

%load_ext colabxtermWe use the colab-xterm extension to enable terminal access directly within the Colab environment. By installing it with !pip install collab and loading it via %load_ext colabxterm, users can open an interactive terminal window inside Colab, making it easier to run commands like llama serve or monitor local processes.

%xtermThe %xterm magic command is used after loading the collab extension to launch an interactive terminal window within the Colab notebook interface. This allows users to execute shell commands in real time, just like a regular terminal, making it especially useful for running background services like llama serve, managing files, or debugging system-level operations without leaving the notebook.

Here, we install ollama using curl https://ollama.ai/install.sh | sh.

Then, we start the ollama using ollama serve.

At last, we download the DeepSeek-R1:1.5B through ollama locally that can be utilized for building the RAG pipeline.

!pip install langchain langchain-community sentence-transformers chromadb faiss-cpuTo set up the core components of the RAG pipeline, we install essential libraries, including langchain, langchain-community, sentence-transformers, chromadb, and faiss-cpu. These packages enable document processing, embedding, vector storage, and retrieval functionalities required to build an efficient and modular local RAG system.

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import Chroma

from langchain_community.embeddings import HuggingFaceEmbeddings

from langchain_community.llms import Ollama

from langchain.chains import RetrievalQA

from google.colab import files

import os

from langchain_core.prompts import ChatPromptTemplate

from langchain_ollama.llms import OllamaLLMWe import key modules from the langchain-community and langchain-ollama libraries to handle PDF loading, text splitting, embedding generation, vector storage with Chroma, and LLM integration via Ollama. It also includes Colab’s file upload utility and prompt templates, enabling a seamless flow from document ingestion to query answering using a locally hosted model.

print("Please upload your PDF file...")

uploaded = files.upload()

file_path = list(uploaded.keys())[0]

print(f"File '{file_path}' successfully uploaded.")

if not file_path.lower().endswith('.pdf'):

print("Warning: Uploaded file is not a PDF. This may cause issues.")To allow users to add their knowledge sources, we prompt for a PDF upload using google.colab.files.upload(). It verifies the uploaded file type and provides feedback, ensuring that only PDFs are processed for further embedding and retrieval.

!pip install pypdf

import pypdf

loader = PyPDFLoader(file_path)

documents = loader.load()

print(f"Successfully loaded {len(documents)} pages from PDF")To extract content from the uploaded PDF, we install the pypdf library and use PyPDFLoader from LangChain to load the document. This process converts each page of the PDF into a structured format, enabling downstream tasks like text splitting and embedding.

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200

)

chunks = text_splitter.split_documents(documents)

print(f"Split documents into {len(chunks)} chunks")The loaded PDF is split into manageable chunks using RecursiveCharacterTextSplitter, with each chunk sized at 1000 characters and a 200-character overlap. This ensures better context retention across chunks, which improves the relevance of retrieved passages during question answering.

embeddings = HuggingFaceEmbeddings(

model_name="all-MiniLM-L6-v2",

model_kwargs={'device': 'cpu'}

)

persist_directory = "./chroma_db"

vectorstore = Chroma.from_documents(

documents=chunks,

embedding=embeddings,

persist_directory=persist_directory

)

vectorstore.persist()

print(f"Vector store created and persisted to {persist_directory}")The text chunks are embedded using the all-MiniLM-L6-v2 model from sentence-transformers, running on CPU to enable semantic search. These embeddings are then stored in a persistent ChromaDB vector store, allowing efficient similarity-based retrieval across sessions.

llm = OllamaLLM(model="deepseek-r1:1.5b")

retriever = vectorstore.as_retriever(

search_type="similarity",

search_kwargs={"k": 3}

)

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

return_source_documents=True

)

print("RAG pipeline created successfully!")The RAG pipeline is finalized by connecting the local DeepSeek-R1 model (via OllamaLLM) with the Chroma-based retriever. Using LangChain’s RetrievalQA chain with a “stuff” strategy, the model retrieves the top 3 most relevant chunks to a query and generates context-aware answers, completing the local RAG setup.

def query_rag(question):

result = qa_chain({"query": question})

print("nQuestion:", question)

print("nAnswer:", result["result"])

print("nSources:")

for i, doc in enumerate(result["source_documents"]):

print(f"Source {i+1}:n{doc.page_content[:200]}...n")

return result

question = "What is the main topic of this document?"

result = query_rag(question)

To test the RAG pipeline, a query_rag function takes a user question, retrieves relevant context using the retriever, and generates an answer using the LLM. It also displays the top source documents, providing transparency and traceability for the model’s response.

In conclusion, this tutorial combines ollama, the retrieval power of ChromaDB, the orchestration capabilities of LangChain, and the reasoning abilities of DeepSeek-R1 via Ollama. It showcased building a lightweight yet powerful RAG system that runs efficiently on Google Colab’s free tier. The solution enables users to ask questions grounded in up-to-date content from uploaded documents, with answers generated through a local LLM. This architecture provides a foundation for building scalable, customizable, and privacy-friendly AI assistants without incurring cloud costs or compromising performance.

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 85k+ ML SubReddit.

The post A Code Implementation to Use Ollama through Google Colab and Building a Local RAG Pipeline on Using DeepSeek-R1 1.5B through Ollama, LangChain, FAISS, and ChromaDB for Q&A appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]