Hallucination remains a significant challenge in deploying Large Vision-Language Models (LVLMs), as these models often generate text misaligned with visual inputs. Unlike hallucination in LLMs, which arises from linguistic inconsistencies, LVLMs struggle with cross-modal discrepancies, leading to inaccurate image descriptions or incorrect spatial relationships. These models leverage vision encoders, such as CLIP, alongside pretrained text decoders to map visual information into language. Despite their strong performance in tasks like image captioning, visual question answering, and medical treatment planning, LVLMs remain prone to hallucination, which limits their real-world applicability. The issue stems from various factors, including statistical biases in pretraining, an over-reliance on language priors, and feature learning biases. However, existing research often fails to account for the unique architecture of LVLMs, treating their hallucination mechanisms similarly to those in LLMs despite the distinct role of visual input processing.

To mitigate hallucination in LVLMs, researchers have explored both training-based and training-free approaches. Training-based solutions focus on enhancing model alignment with ground truth through additional supervision, but they require extensive datasets and computational resources. In contrast, training-free methods, such as self-feedback correction and auxiliary model integration, have gained popularity due to their efficiency. Some approaches refine the text decoding process to reduce inconsistencies, but these often fail to address hallucination from the visual encoder. As LVLMs evolve, developing targeted solutions that consider visual and textual components will be crucial for improving their robustness and reliability in real-world applications.

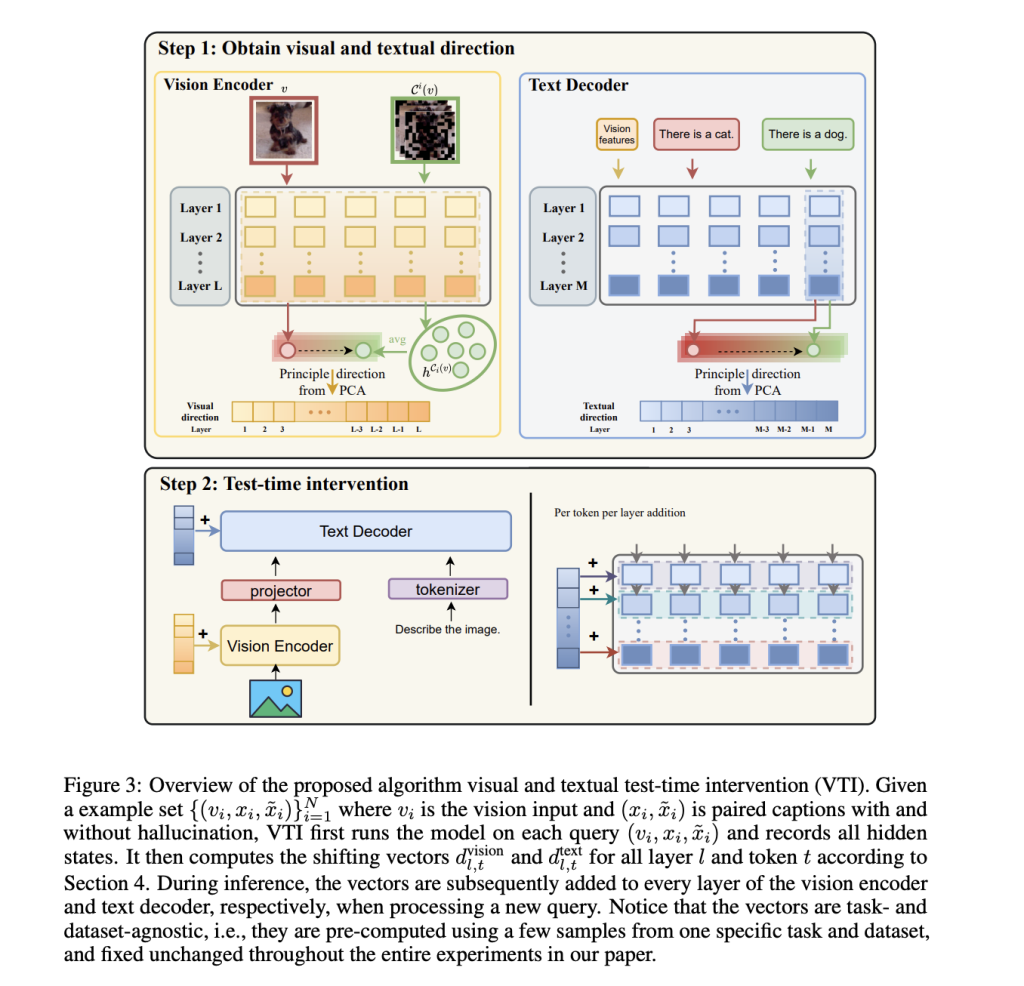

Researchers from Stanford University investigate the mechanisms behind hallucinations in LVLMs, focusing on the instability of vision encoders and their impact on text decoders. They introduce Visual and Textual Intervention (VTI), a test-time technique stabilizing vision features by modifying latent space representations. Unlike traditional smoothing methods, VTI pre-computes transformation directions from perturbed images and applies them to new queries, reducing hallucinations without extra training costs. Experimental results show that VTI consistently outperforms baseline approaches across multiple benchmarks, emphasizing the importance of vision feature stability in mitigating hallucinations and improving LVLM reliability.

LVLMs comprise a vision encoder and a text decoder, where unstable vision features can lead to hallucinations. Researchers identify that perturbations in vision embeddings cause inconsistencies in generated text. To address this, they propose VTI, which pre-computes stable feature shifts using Principal Component Analysis (PCA) on perturbed image embeddings. These shifts are then applied to new queries, improving feature stability without additional training. VTI also adjusts text decoder embeddings to reduce hallucinations. Experiments confirm its effectiveness in mitigating hallucinations while maintaining computational efficiency across diverse tasks and datasets.

The study evaluates the effectiveness of VTI in mitigating hallucinations in LVLMs. Using 80 COCO image-text pairs, the method generalizes across tasks and datasets. Experiments on POPE, CHAIR, and MMHAL-Bench demonstrate VTI’s superiority over baseline methods like OPERA and VCD. Results show that visual intervention stabilizes feature representations while textual intervention enhances image attention. Their combination improves accuracy while maintaining text richness. Additionally, an ablation study on α and β confirms their impact on reducing hallucinations. VTI effectively addresses multimodal hallucinations without compromising content quality.

In conclusion, the study presents VTI as an effective method to mitigate hallucinations in LVLMs. Unlike hallucinations in LLMs, those in LVLMs stem from misalignments between visual inputs and textual outputs, often due to separately pre-trained image encoders and text decoders. VTI stabilizes vision features by adjusting latent space representations during inference, requiring no additional training. Experimental results confirm its superiority over baseline methods in reducing hallucinations while maintaining output quality. These findings emphasize the importance of robust feature representation, paving the way for more accurate and reliable LVLM applications in real-world settings.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Mitigating Hallucinations in Large Vision-Language Models: A Latent Space Steering Approach appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]