Large vision-language models (LVLMs) integrate large language models with image processing capabilities, enabling them to interpret images and generate coherent textual responses. While they excel at recognizing visual objects and responding to prompts, they often falter when presented with problems requiring multi-step reasoning. Vision-language tasks like understanding charts, solving visual math questions, or interpreting diagrams demand more than recognition; they need the ability to follow logical steps based on visual cues. Despite advancements in model architecture, current systems consistently struggle to produce accurate and interpretable answers in such complex scenarios.

A major limitation in current vision-language models is their inability to perform complex reasoning that involves multiple steps of logical deduction, especially when interpreting images in conjunction with textual queries. These models cannot often internally verify or correct their reasoning, leading to incorrect or shallow outputs. Also, the reasoning chains these models follow are typically not transparent or verifiable, making it difficult to ensure the robustness of their conclusions. The challenge lies in bridging this reasoning gap, which text-only models have begun to address effectively through reinforcement learning techniques but vision-language models have yet to embrace fully.

Before this study, efforts to enhance reasoning in such systems mostly relied on standard fine-tuning or prompting techniques. Though helpful in basic tasks, these approaches often resulted in verbose or repetitive outputs with limited depth. Vision-language models like Qwen2.5-VL-7B showed promise due to their visual instruction-following abilities but lacked the multi-step reasoning comparable to their text-only counterparts, such as DeepSeek-R1. Even when prompted with structured queries, these models struggled to reflect upon their outputs or validate intermediate reasoning steps. This was a significant bottleneck, particularly for use cases requiring structured decision-making, such as visual problem-solving or educational support tools.

Researchers from the University of California, Los Angeles, introduced a model named OpenVLThinker-7B. This model was developed through a novel training method that combines supervised fine-tuning (SFT) and reinforcement learning (RL) in an iterative loop. The process started by generating image captions using Qwen2.5-VL-3B and feeding these into a distilled version of DeepSeek-R1 to produce structured reasoning chains. These outputs formed the training data for the first round of SFT, guiding the model in learning basic reasoning structures. Following this, a reinforcement learning stage using Group Relative Policy Optimization (GRPO) was applied to refine the model’s reasoning based on reward feedback. This combination enabled the model to progressively self-improve, using each iteration’s refined outputs as new training data for the next cycle.

The method involved careful data curation and multiple training phases. In the first iteration, 25,000 examples were used for SFT, sourced from datasets like FigureQA, Geometry3K, TabMWP, and VizWiz. These examples were filtered to remove overly verbose or redundant reflections, improving training quality. GRPO was then applied to a smaller, more difficult dataset of 5,000 samples. This led to a performance increase from 62.5% to 65.6% accuracy on the MathVista benchmark. In the second iteration, another 5,000 high-quality examples were used for SFT, raising accuracy to 66.1%. A second round of GRPO pushed performance to 69.4%. Across these phases, the model was evaluated on multiple benchmarks, MathVista, MathVerse, and MathVision, showing consistent performance gains with each iteration.

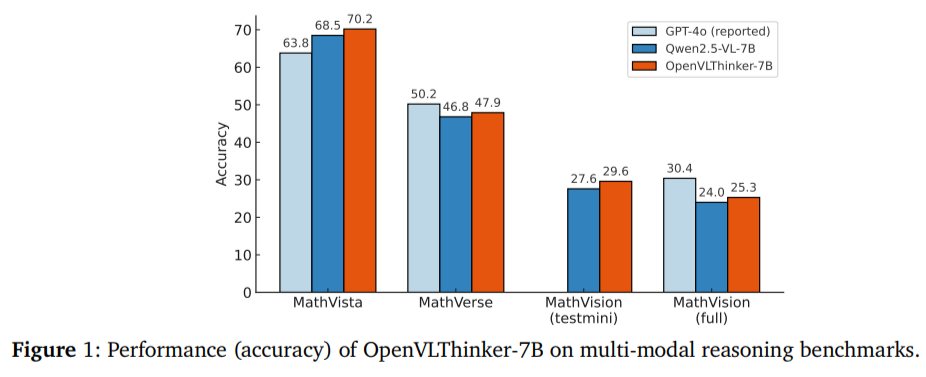

Quantitatively, OpenVLThinker-7B outperformed its base model, Qwen2.5-VL-7B, significantly. On MathVista, it reached 70.2% accuracy compared to the base model’s 50.2%. On MathVerse, the improvement was from 46.8% to 68.5%. MathVision full test accuracy rose from 24.0% to 29.6%, and MathVision testmini improved from 25.3% to 30.4%. These improvements indicate that the model learned to follow reasoning patterns and generalized better to unseen multimodal tasks. Each iteration of training contributed measurable gains, showcasing the strength of combining fine-tuning with reward-based learning in a looped structure.

The core of this model’s strength lies in its iterative structure. Rather than relying solely on vast datasets, it focuses on quality and structure. Each cycle of SFT and RL improves the model’s capacity to understand the relationship between images, questions, and answers. Self-verification and correction behaviors, initially lacking in standard LVLMs, emerged as a byproduct of reinforcement learning with verifiable reward signals. This allowed OpenVLThinker-7B to produce reasoning traces that were logically consistent and interpretable. Even subtle improvements, such as reduced redundant self-reflections or increased accuracy with shorter reasoning chains, contributed to its overall performance gains.

Some Key Takeaways from the Research:

- UCLA researchers developed OpenVLThinker-7B using a combined SFT and RL approach, starting from the Qwen2.5-VL-7B base model.

- Used iterative training cycles involving caption generation, reasoning distillation, and alternating SFT and GRPO reinforcement learning.

- The initial SFT used 25,000 filtered examples, while the RL phases used smaller sets of 5,000 harder samples from datasets like Geometry3K and SuperCLEVR.

- On MathVista, accuracy improved from 50.2% (base model) to 70.2%. MathVerse accuracy jumped from 46.8% to 68.5%, and other datasets also saw notable gains.

- GRPO effectively refined reasoning behaviors by rewarding correct answers, reducing verbosity, and improving logical consistency.

- Each training iteration led to incremental performance gains, confirming the effectiveness of the self-improvement strategy.

- Establishes a viable route to bring R1-style multi-step reasoning into multimodal models, useful for educational, visual analytics, and assistive tech applications.

Check out the Paper, Model on Hugging Face and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post UCLA Researchers Released OpenVLThinker-7B: A Reinforcement Learning Driven Model for Enhancing Complex Visual Reasoning and Step-by-Step Problem Solving in Multimodal Systems appeared first on MarkTechPost.

Source: Read MoreÂ