Large language models (LLMs) have become vital across domains, enabling high-performance applications such as natural language generation, scientific research, and conversational agents. Underneath these advancements lies the transformer architecture, where alternating layers of attention mechanisms and feed-forward networks (FFNs) sequentially process tokenized input. However, with an increase in size and complexity, the computational burden required for inference grows substantially, creating an efficiency bottleneck. Efficient inference is now a critical concern, with many research groups focusing on strategies that can reduce latency, increase throughput, and cut computational costs while maintaining or improving model performance.

At the center of this efficiency problem lies the inherently sequential structure of transformers. Each layer’s output feeds into the next, demanding strict order and synchronization, which is especially problematic at scale. As model sizes expand, the cost of sequential computation and communication across GPUs grows, leading to reduced efficiency and increased deployment cost. This challenge is amplified in scenarios requiring fast, multi-token generation, such as real-time AI assistants. Reducing this sequential load while maintaining model capabilities presents a key technical hurdle. Unlocking new parallelization strategies that preserve accuracy yet significantly reduce computation depth is essential to broadening the accessibility and scalability of LLMs.

Several techniques have emerged to improve efficiency. Quantization reduces the precision of numerical representations to minimize memory and computation needs, though it often risks accuracy losses, especially at low bit-widths. Pruning eliminates redundant parameters and simplifies models but potentially harms accuracy without care. Mixture-of-Experts (MoE) models activate only a subset of parameters per input, making them highly efficient for specific workloads. Still, they can underperform at intermediate batch sizes due to low hardware utilization. While valuable, these strategies have trade-offs that limit their universal applicability. Consequently, the field seeks methods that offer broad efficiency improvements with fewer compromises, especially for dense architectures that are simpler to train, deploy, and maintain.

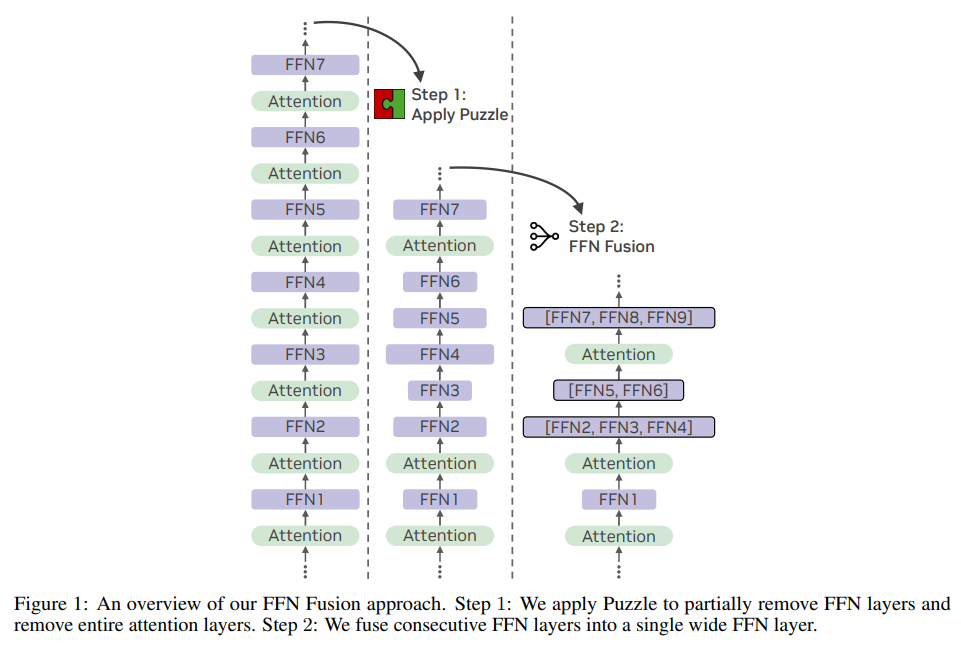

Researchers at NVIDIA introduced a new architectural optimization technique named FFN Fusion, which addresses the sequential bottleneck in transformers by identifying FFN sequences that can be executed in parallel. This approach emerged from the observation that when attention layers are removed using a Puzzle tool, models often retain long sequences of consecutive FFNs. These sequences show minimal interdependency and, therefore, can be processed simultaneously. By analyzing the structure of LLMs such as Llama-3.1-405B-Instruct, researchers created a new model called Ultra-253B-Base by pruning and restructuring the base model through FFN Fusion. This method results in a significantly more efficient model that maintains competitive performance.

FFN Fusion fuses multiple consecutive FFN layers into a single, wider FFN. This process is grounded in mathematical equivalence: by concatenating the weights of several FFNs, one can produce a single module that behaves like the sum of the original layers but can be computed in parallel. For instance, if three FFNs are stacked sequentially, each dependent on the output of the previous one, their fusion removes these dependencies by ensuring all three operate on the same input and their outputs are aggregated. The theoretical foundation for this method shows that the fused FFN maintains the same representational capacity. Researchers performed dependency analysis using cosine distance between FFN outputs to identify regions with low interdependence. These regions were deemed optimal for fusion, as minimal change in token direction between layers indicated the feasibility of parallel processing.

Applying FFN Fusion to the Llama-405B model resulted in Ultra-253B-Base, which delivered notable gains in speed and resource efficiency. Specifically, the new model achieved a 1.71x improvement in inference latency and reduced per-token computational cost by 35x at a batch size of 32. This efficiency did not come at the expense of capability. Ultra-253B-Base scored 85.17% on MMLU, 72.25% on MMLU-Pro, 84.92% on Arena Hard, 86.58% on HumanEval, and 9.19 on MT-Bench. These results often matched or exceeded the original 405B-parameter model, even though Ultra-253B-Base contained only 253 billion parameters. Memory usage also improved with a 2× reduction in kv-cache requirements. The training process involved distilling 54 billion tokens at an 8k context window, followed by staged fine-tuning at 16k, 32k, and 128k contexts. These steps ensured the fused model maintained high accuracy while benefiting from reduced size.

This research demonstrates how thoughtful architectural redesign can unlock significant efficiency gains. Researchers showed that FFN layers in transformer architectures are often more independent than previously assumed. Their method of quantifying inter-layer dependency and transforming model structures allowed for broader application across models of various sizes. The technique was also validated on a 70B-parameter model, proving generalizability. Further experiments indicated that while FFN layers can often be fused with minimal impact, full block parallelization, including attention, introduces more performance degradation due to stronger interdependencies.

Several Key Takeaways from the Research on FFN Fusion:

- The FFN Fusion technique reduces sequential computation in transformers by parallelizing low-dependency FFN layers.

- Fusion is achieved by replacing sequences of FFNs with a single wider FFN using concatenated weights.

- Ultra-253B-Base, derived from Llama-3.1-405B, achieves 1.71x faster inference and 35x lower per-token cost.

- Benchmark results include: 85.17% (MMLU), 72.25% (MMLU-Pro), 86.58% (HumanEval), 84.92% (Arena Hard), and 9.19 (MT-Bench).

- Memory usage is cut by half due to kv-cache optimization.

- FFN Fusion is more effective at larger model scales and works well with techniques like pruning and quantization.

- Full transformer block parallelization shows potential but requires further research due to stronger interdependencies.

- A systematic method using cosine distance helps identify which FFN sequences are safe to fuse.

- The technique is validated across different model sizes, including 49B, 70B, and 253B.

- This approach lays the foundation for more parallel-friendly and hardware-efficient LLM designs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post NVIDIA AI Researchers Introduce FFN Fusion: A Novel Optimization Technique that Demonstrates How Sequential Computation in Large Language Models LLMs can be Effectively Parallelized appeared first on MarkTechPost.

Source: Read MoreÂ