Despite the growing interest in Multi-Agent Systems (MAS), where multiple LLM-based agents collaborate on complex tasks, their performance gains remain limited compared to single-agent frameworks. While MASs are explored in software engineering, drug discovery, and scientific simulations, they often struggle with coordination inefficiencies, leading to high failure rates. These failures reveal key challenges, including task misalignment, reasoning-action mismatches, and ineffective verification mechanisms. Empirical evaluations show that even state-of-the-art open-source MASs, such as ChatDev, can exhibit low success rates, raising questions about their reliability. Unlike single-agent frameworks, MASs must address inter-agent misalignment, conversation resets, and incomplete task verification, significantly impacting their effectiveness. Additionally, current best practices, such as best-of-N sampling, often outperform MASs, emphasizing the need for a deeper understanding of their limitations.

Existing research has tackled specific challenges in agentic systems, such as improving workflow memory, enhancing state control, and refining communication flows. However, these approaches do not offer a holistic strategy for improving MAS reliability across domains. While various benchmarks assess agentic systems based on performance, security, and trustworthiness, there is no consensus on how to build robust MASs. Prior studies highlight the risks of overcomplicating agentic frameworks and stress the importance of modular design, yet systematic investigations into MAS failure modes remain scarce. This work contributes by providing a structured taxonomy of MAS failures and suggesting design principles to enhance their reliability, paving the way for more effective multi-agent LLM systems.

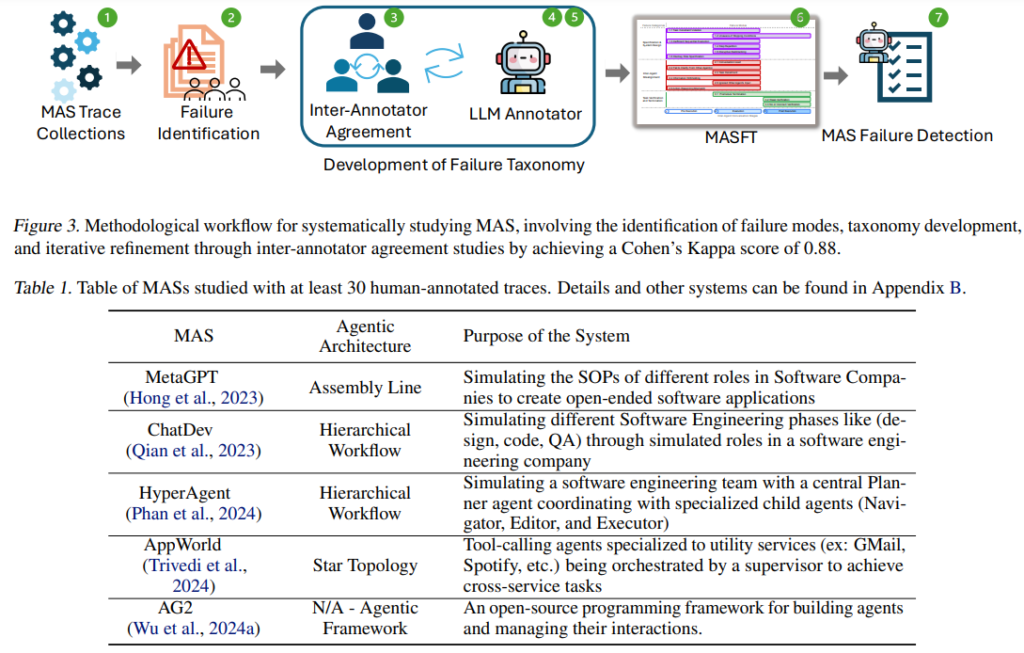

Researchers from UC Berkeley and Intesa Sanpaolo present the first comprehensive study of MAS challenges, analyzing five frameworks across 150 tasks with expert annotators. They identify 14 failure modes, categorized into system design flaws, inter-agent misalignment, and task verification issues, forming the Multi-Agent System Failure Taxonomy (MASFT). They develop an LLM-as-a-judge pipeline to facilitate evaluation, achieving high agreement with human annotators. Despite interventions like improved agent specification and orchestration, MAS failures persist, underscoring the need for structural redesigns. Their work, including datasets and annotations, is open-sourced to guide future MAS research and development.

The study explores failure patterns in MAS and categorizes them into a structured taxonomy. Using the Grounded Theory (GT) approach, researchers analyze MAS execution traces iteratively, refining failure categories through inter-annotator agreement studies. They developed an LLM-based annotator for automated failure detection, achieving 94% accuracy. Failures are classified into system design flaws, inter-agent misalignment, and inadequate task verification. The taxonomy is validated through iterative refinement, ensuring reliability. Results highlight diverse failure modes across MAS architectures, emphasizing the need for improved coordination, clearer role definitions, and robust verification mechanisms to enhance MAS performance.

Strategies are categorized into tactical and structural approaches to enhance MASs and reduce failures. Tactical methods involve refining prompts, agent organization, interaction management, and improving clarity and verification steps. However, their effectiveness varies. Structural strategies focus on system-wide improvements, such as verification mechanisms, standardized communication, reinforcement learning, and memory management. Two case studies—MathChat and ChatDev—demonstrate these approaches. MathChat refines prompts and agent roles, improving results inconsistently. ChatDev enhances role adherence and modifies framework topology for iterative verification. While these interventions help, significant improvements require deeper structural modifications, emphasizing the need for further research in MAS reliability.

In conclusion, the study comprehensively analyzes failure modes in MASs using LLMs. By examining over 150 traces, the research identifies 14 distinct failure modes: specification and system design, inter-agent misalignment, and task verification and termination. An automated LLM Annotator is introduced to analyze MAS traces, demonstrating reliability. Case studies reveal that simple fixes often fall short, necessitating structural strategies for consistent improvements. Despite growing interest in MASs, their performance remains limited compared to single-agent systems, underscoring the need for deeper research into agent coordination, verification, and communication strategies.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Understanding and Mitigating Failure Modes in LLM-Based Multi-Agent Systems appeared first on MarkTechPost.

Source: Read MoreÂ