Language models (LMs) face a fundamental challenge in how to perceive textual data through tokenization. Current subword tokenizers segment text into vocabulary tokens that cannot bridge whitespace, adhering to an artificial constraint that treats space as a semantic boundary. This practice ignores the reality that meaning often exceeds individual words – multi-word expressions like “a lot of” function as single semantic units, with English speakers mentally storing thousands of such phrases. Cross-linguistically, the same concepts may be expressed as single or multiple words, depending on the language. Notably, some languages like Chinese and Japanese use no whitespace, allowing tokens to span multiple words or sentences without apparent performance degradation.

Previous research has explored several approaches beyond traditional subword tokenization. Some studies investigated processing text at multiple granularity levels or creating multi-word tokens through frequency-based n-gram identification. Other researchers have explored multi-token prediction (MTP), allowing language models to predict various tokens in a single step, which confirms models’ capability to process more than one subword simultaneously. However, these approaches require architectural modifications and fix the number of tokens predicted per step. Some researchers have pursued tokenizer-free approaches, modeling text directly as byte sequences. However, this significantly increases sequence lengths and computational requirements, leading to complex architectural solutions.

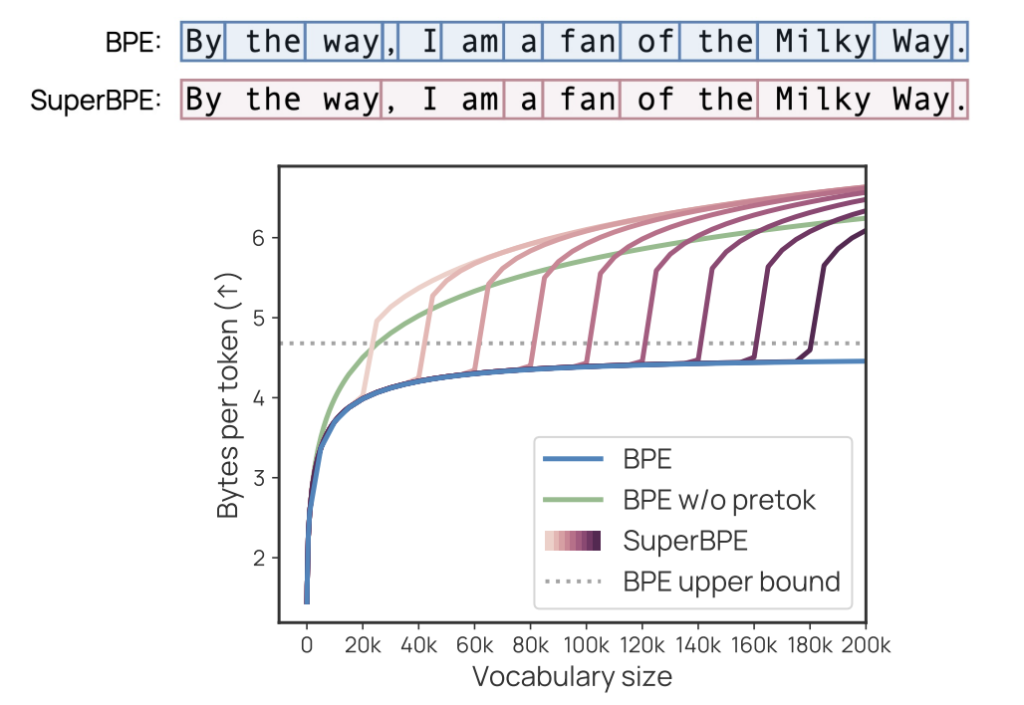

Researchers from the University of Washington, NVIDIA, and the Allen Institute for AI have proposed SuperBPE, a tokenization algorithm that creates a vocabulary containing both traditional subword tokens and innovative “superword” tokens that span multiple words. This approach enhances the popular byte-pair encoding (BPE) algorithm by implementing a pretokenization curriculum by initially maintaining whitespace boundaries to learn subword tokens, then removing these constraints to allow for superword token formation. While standard BPE quickly reaches diminishing returns and begins using increasingly rare subwords as vocabulary size grows, SuperBPE continues discovering common multi-word sequences to encode as single tokens, improving encoding efficiency.

SuperBPE operates through a two-stage training process that modifies the pretokenization step of traditional BPE, mentioned above. This approach intuitively builds semantic units and combines them into common sequences for greater efficiency. Setting t=T (t is transition point and T is target size) produces standard BPE, while t=0 creates a naive whitespace-free BPE. Training SuperBPE requires more computational resources than standard BPE because, without whitespace pretokenization, the training data consists of extremely long “words” with minimal deduplication. However, this increased training cost a few hours on 100 CPUs and occurs only once, which is negligible compared to the resources required for language model pretraining.

SuperBPE shows impressive performance across 30 benchmarks spanning knowledge, reasoning, coding, reading comprehension, etc. All SuperBPE models outperform the BPE baseline, with the strongest 8B model achieving an average improvement of 4.0% and surpassing the baseline on 25 out of 30 individual tasks. Multiple-choice tasks show substantial gains, with a +9.7% improvement. The only statistically significant underperformance occurs in the LAMBADA task, where SuperBPE experiences a final accuracy drop from 75.8% to 70.6%. Moreover, all reasonable transition points yield stronger results than the baseline. The most encoding-efficient transition point delivers a +3.1% performance improvement while reducing inference computing by 35%.

In conclusion, researchers introduced SuperBPE, a more effective tokenization approach developed by enhancing the standard BPE algorithm to incorporate superword tokens. Despite tokenization serving as the fundamental interface between language models and text, tokenization algorithms have remained relatively static. SuperBPE challenges this status quo by recognizing that tokens can extend beyond traditional subword boundaries to include multi-word expressions. SuperBPE tokenizers enable language models to achieve superior performance across numerous downstream tasks while reducing inference computational costs. These advantages require no modifications to the underlying model architecture, making SuperBPE a seamless replacement for traditional BPE in modern language model development pipelines.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post SuperBPE: Advancing Language Models with Cross-Word Tokenization appeared first on MarkTechPost.

Source: Read MoreÂ