The widespread adoption of Large Language Models (LLMs) has significantly changed the landscape of content creation and consumption. However, it has also introduced critical challenges regarding accuracy and factual reliability. The content generated by LLMs often includes claims that lack proper verification, potentially leading to misinformation. Therefore, accurately extracting claims from these outputs for effective fact-checking has become essential, albeit challenging due to inherent ambiguities and context dependencies.

Microsoft AI Research has recently developed Claimify, an advanced claim-extraction method based on LLMs, specifically designed to enhance accuracy, comprehensiveness, and context-awareness in extracting claims from LLM outputs. Claimify addresses the limitations of existing methods by explicitly dealing with ambiguity. Unlike other approaches, it identifies sentences with multiple possible interpretations and only proceeds with claim extraction when the intended meaning is clearly determined within the given context. This careful approach ensures higher accuracy and reliability, particularly benefiting subsequent fact-checking efforts.

From a technical standpoint, Claimify employs a structured pipeline comprising three key stages: Selection, Disambiguation, and Decomposition. During the Selection stage, Claimify leverages LLMs to identify sentences that contain verifiable information, filtering out those without factual content. In the Disambiguation stage, it uniquely focuses on detecting and resolving ambiguities, such as unclear references or multiple plausible interpretations. Claims are extracted only if ambiguities can be confidently resolved. The final stage, Decomposition, involves converting each clarified sentence into precise, context-independent claims. This structured process enhances both the accuracy and completeness of the resulting claims.

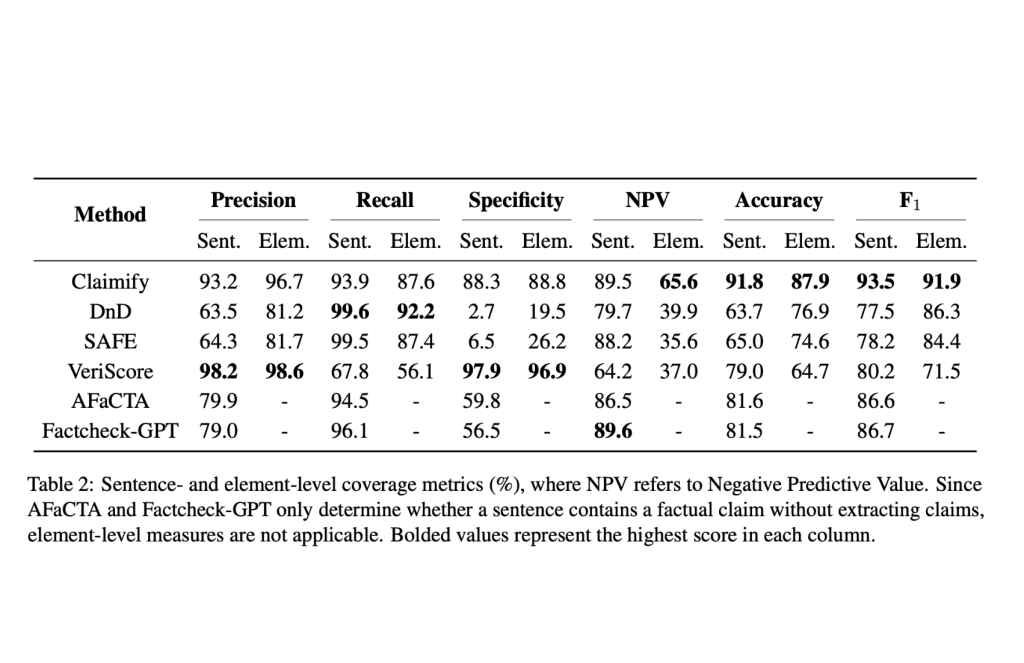

In evaluations using the BingCheck dataset—which covers a broad range of topics and complex LLM-generated responses—Claimify demonstrated notable improvements over previous methods. It achieved a high entailment rate of 99%, indicating a strong consistency between the extracted claims and the original content. Regarding coverage, Claimify captured 87.6% of verifiable content while maintaining a high precision rate of 96.7%, outperforming comparable approaches. Its systematic approach to decontextualization also ensured that essential contextual details were retained, resulting in better-grounded claims compared to prior methods.

Overall, Claimify represents a meaningful advancement in the automated extraction of reliable claims from LLM-generated content. By methodically addressing ambiguity and contextuality through a structured and careful evaluation framework, Claimify establishes a new standard for accuracy and reliability. As reliance on LLM-produced content continues to grow, tools like Claimify will play an increasingly crucial role in ensuring the trustworthiness and factual integrity of this content.

Check out the Paper and Technical details. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

The post Microsoft AI Introduces Claimify: A Novel LLM-based Claim-Extraction Method that Outperforms Prior Solutions to Produce More Accurate, Comprehensive, and Substantiated Claims from LLM Outputs appeared first on MarkTechPost.

Source: Read MoreÂ