In this tutorial, we will build an interactive text-to-image generator application accessed through Google Colab and a public link using Hugging Face’s Diffusers library and Gradio. You’ll learn how to transform simple text prompts into detailed images by leveraging the state-of-the-art Stable Diffusion model and GPU acceleration. We’ll walk through setting up the environment, installing dependencies, caching the model, and creating an intuitive application interface that allows real-time parameter adjustments.

!pip install diffusers transformers accelerate gradioFirst, we install four essential Python packages using pip. Diffusers provides tools for working with diffusion models, Transformers offers pretrained models for various tasks, Accelerate optimizes performance on different hardware setups, and Gradio enables the creation of interactive machine learning interfaces. These libraries form the backbone of our text-to-image generation demo in Google Colab. Set the runtime to GPU.

import torch

from diffusers import StableDiffusionPipeline

import gradio as gr

# Global variable to cache the pipeline

pipe = NoneNo, we import necessary libraries: torch for tensor computations and GPU acceleration, StableDiffusionPipeline from the Diffusers library for loading and running the Stable Diffusion model, and gradio for building interactive demos. Also, a global variable pipe is initialized to None to cache the loaded model pipeline later, which helps avoid reloading the model on every inference call.

print("CUDA available:", torch.cuda.is_available())The above code line indicates whether a CUDA-enabled GPU is available. It uses PyTorch’s torch.cuda.is_available() function returns True if a GPU is detected and ready for computations and False otherwise, helping ensure that your code can leverage GPU acceleration.

pipe = StableDiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16

)

pipe = pipe.to("cuda")The above code snippet loads the Stable Diffusion pipeline using a pretrained model from “runwayml/stable-diffusion-v1-5”. It sets its data type to a 16-bit floating point (torch.float16) to optimize memory usage and performance. It then moves the entire pipeline to the GPU (“cuda”) to leverage hardware acceleration for faster image generation.

def generate_sd_image(prompt, num_inference_steps=50, guidance_scale=7.5):

"""

Generate an image from a text prompt using Stable Diffusion.

Args:

prompt (str): Text prompt to guide image generation.

num_inference_steps (int): Number of denoising steps (more steps can improve quality).

guidance_scale (float): Controls how strongly the prompt is followed.

Returns:

PIL.Image: The generated image.

"""

global pipe

if pipe is None:

print("Loading Stable Diffusion model... (this may take a while)")

pipe = StableDiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16,

revision="fp16"

)

pipe = pipe.to("cuda")

# Use autocast for faster inference on GPU

with torch.autocast("cuda"):

image = pipe(prompt, num_inference_steps=num_inference_steps, guidance_scale=guidance_scale).images[0]

return image

Above function, generate_sd_image, takes a text prompt along with parameters for inference steps and guidance scale to generate an image using Stable Diffusion. It checks if the model pipeline is already loaded in the global pipe variable; if not, it loads and caches the model with half-precision (FP16) and moves it to the GPU. It then utilizes torch.autocast for efficient mixed-precision inference and returns the generated image.

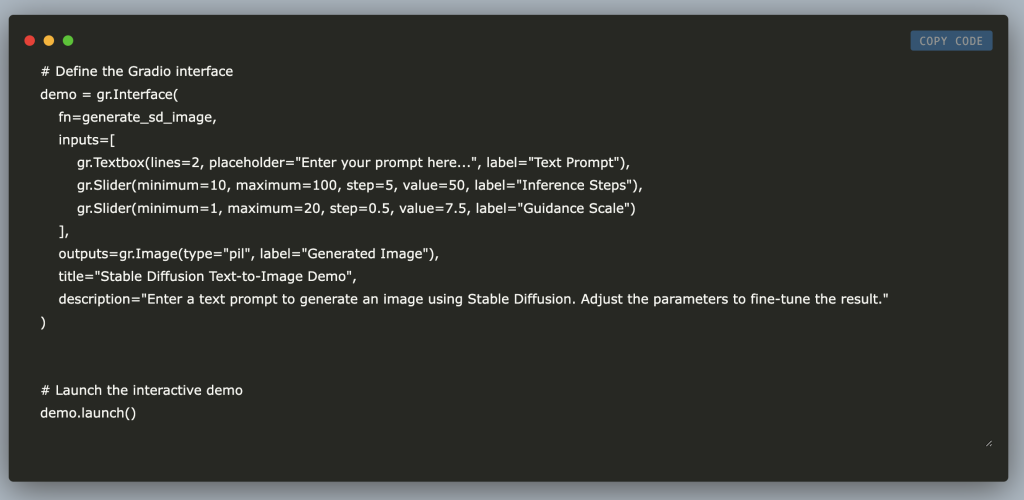

# Define the Gradio interface

demo = gr.Interface(

fn=generate_sd_image,

inputs=[

gr.Textbox(lines=2, placeholder="Enter your prompt here...", label="Text Prompt"),

gr.Slider(minimum=10, maximum=100, step=5, value=50, label="Inference Steps"),

gr.Slider(minimum=1, maximum=20, step=0.5, value=7.5, label="Guidance Scale")

],

outputs=gr.Image(type="pil", label="Generated Image"),

title="Stable Diffusion Text-to-Image Demo",

description="Enter a text prompt to generate an image using Stable Diffusion. Adjust the parameters to fine-tune the result."

)

# Launch the interactive demo

demo.launch()

Here, we define a Gradio interface that connects the generate_sd_image function to an interactive web UI. It provides three input widgets, a textbox for entering the text prompt, and sliders for adjusting the number of inference steps and guidance scale. In contrast, the output widget displays the generated image. The interface also includes a title and descriptive text to guide users, and the interactive demo is finally launched.

You can also access the web app through a public URL: https://7dc6833297cf83b160.gradio.live/ (Active for 72 hrs). A similar link will be generated for your code as well.

In conclusion, this tutorial demonstrated how to integrate Hugging Face’s Diffusers with Gradio to create a powerful, interactive text-to-image application in Google Colab and a web application. From setting up the GPU-accelerated environment and caching the Stable Diffusion model to building an interface for dynamic user interaction, you have a solid foundation to experiment with and further develop advanced generative models.

Here is the Colab Notebook for the above project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

Recommended Read- LG AI Research Releases NEXUS: An Advanced System Integrating Agent AI System and Data Compliance Standards to Address Legal Concerns in AI Datasets

Recommended Read- LG AI Research Releases NEXUS: An Advanced System Integrating Agent AI System and Data Compliance Standards to Address Legal Concerns in AI Datasets

The post Steps to Build an Interactive Text-to-Image Generation Application using Gradio and Hugging Face’s Diffusers appeared first on MarkTechPost.

Source: Read MoreÂ