Efficiently handling long contexts has been a longstanding challenge in natural language processing. As large language models expand their capacity to read, comprehend, and generate text, the attention mechanism—central to how they process input—can become a bottleneck. In a typical Transformer architecture, this mechanism compares every token to every other token, resulting in computational costs that scale quadratically with sequence length. This problem grows more pressing as we apply language models to tasks that require them to consult vast amounts of textual information: long-form documents, multi-chapter books, legal briefs, or large code repositories. When a model must navigate tens or even hundreds of thousands of tokens, the cost of naively computing full attention becomes prohibitive.

Previous efforts to address this issue often rely on imposing fixed structures or approximations that may compromise quality in certain scenarios. For example, sliding-window mechanisms confine tokens to a local neighborhood, which can obscure important global relationships. Meanwhile, approaches that radically alter the fundamental architecture—such as replacing softmax attention with entirely new constructs—can demand extensive retraining from scratch, making it difficult to benefit from existing pre-trained models. Researchers have sought a method that maintains the key benefits of the original Transformer design—its adaptability and ability to capture wide-ranging dependencies—without incurring the immense computational overhead associated with traditional full attention on extremely long sequences.

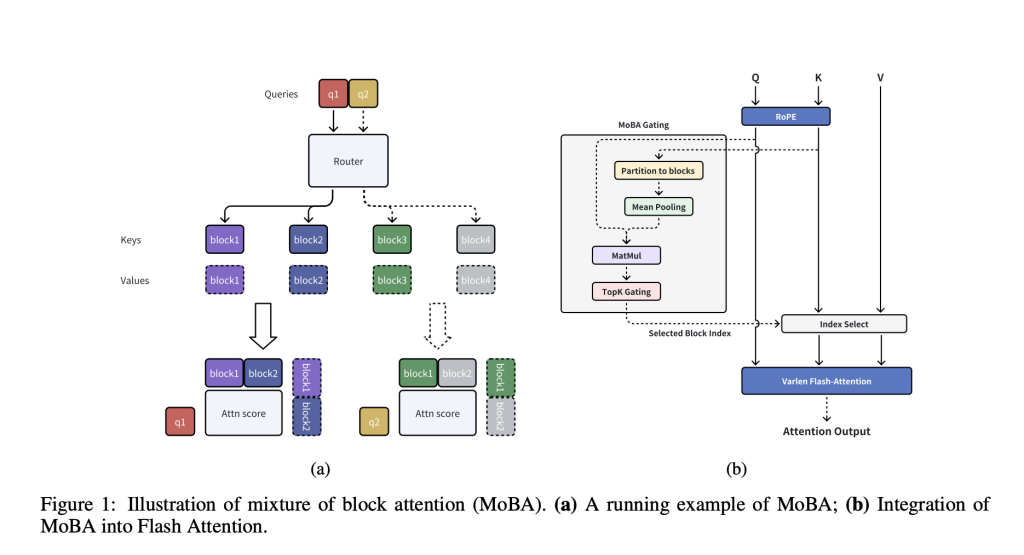

Researchers from Moonshot AI, Tsinghua University, and Zhejiang University introduce Mixture of Block Attention (MoBA), an innovative approach that applies the principles of Mixture of Experts (MoE) to the attention mechanism. By partitioning the input into manageable “blocks” and using a trainable gating system to decide which blocks are relevant for each query token, MoBA addresses the inefficiency that arises when a model has to compare every token to every other token. Unlike approaches that rigidly enforce local or windowed attention, MoBA allows the model to learn where to focus. This design is guided by the principle of “less structure,” meaning the architecture does not predefine exactly which tokens should interact. Instead, it delegates those decisions to a learned gating network.

A key feature of MoBA is its capacity to function seamlessly with existing Transformer-based models. Rather than discarding the standard self-attention interface, MoBA operates as a form of “plug-in” or substitute. It maintains the same number of parameters, so it does not bloat the architecture, and it preserves causal masking to ensure correctness in autoregressive generation. In practical deployments, MoBA can be toggled between sparse and full attention, enabling the model to benefit from speedups when tackling extremely long inputs while preserving the fallback to standard full attention in layers or phases of training where it might be desirable.

Technical Details and Benefits

MoBA centers on dividing the context into blocks, each of which spans a consecutive range of tokens. The gating mechanism computes an “affinity” score between a query token and each block, typically by comparing the query with a pooled representation of the block’s keys. It then chooses the top-scoring blocks. As a result, only those tokens in the most relevant blocks contribute to the final attention distribution. The block that contains the query itself is always included, ensuring local context remains accessible. At the same time, a causal mask is enforced so that tokens do not attend to positions in the future, preserving the left-to-right autoregressive property.

Because of this procedure, MoBA’s attention matrix is significantly sparser than in the original Transformer. Yet, it remains flexible enough to allow queries to attend to faraway information when needed. For instance, if a question posed near the end of a text can only be answered by referencing details near the beginning, the gating mechanism can learn to assign a high score to the relevant earlier block. Technically, this block-based method reduces the number of token comparisons to sub-quadratic scales, bringing efficiency gains that become especially evident as context lengths climb into the hundreds of thousands or even millions of tokens.

Another appealing aspect of MoBA is its compatibility with modern accelerators and specialized kernels. In particular, the authors combine MoBA with FlashAttention, a high-performance library for fast, memory-efficient exact attention. By carefully grouping the query–key–value operations according to which blocks have been selected, they can streamline computations. The authors report that at one million tokens, MoBA can yield roughly a sixfold speedup compared to conventional full attention, underscoring its practicality in real-world use cases.

Results and Insights

According to the technical report, MoBA demonstrates performance on par with full attention across a variety of tasks, while offering significant computational savings when dealing with long sequences. Tests on language modeling data show that MoBA’s perplexities remain close to those of a full-attention Transformer at sequence lengths of 8,192 or 32,768 tokens. Critically, as the researchers gradually extend context lengths to 128,000 and beyond, MoBA retains robust long-context comprehension. The authors present “trailing token” evaluations, which concentrate on the model’s ability to predict tokens near the end of a long prompt—an area that typically highlights weaknesses of methods relying on heavy approximations. MoBA effectively manages these trailing positions without any drastic loss in predictive quality.

They also explore the sensitivity of the approach to block size and gating strategies. In some experiments, refining the granularity (i.e., using smaller blocks but selecting more of them) helps the model approximate full attention more closely. Even in settings where MoBA leaves out large portions of the context, adaptive gating can identify the blocks that truly matter for the query. Meanwhile, a “hybrid” regime demonstrates a balanced approach: some layers continue to use MoBA for speed, while a smaller number of layers revert to full attention. This hybrid approach can be particularly beneficial when performing supervised fine-tuning, where certain positions in the input might be masked out from the training objective. By preserving full attention in a few upper layers, the model can retain broad context coverage, benefiting tasks that require more global perspective.

Overall, these findings suggest that MoBA is well-suited for tasks that involve extensive context, such as reading comprehension of long documents, large-scale code completion, or multi-turn dialogue systems where the entire conversation history becomes essential. Its practical efficiency gains and minimal performance trade-offs position MoBA as an appealing method for making large language models more efficient at scale.

Conclusion

In conclusion, Mixture of Block Attention (MoBA) provides a pathway toward more efficient long-context processing in large language models, without an extensive overhaul of the Transformer architecture or a drop in performance. By adopting Mixture of Experts ideas within the attention module, MoBA offers a learnable yet sparse way to focus on relevant portions of very long inputs. The adaptability inherent in its design—particularly its seamless switching between sparse and full attention—makes it especially attractive for ongoing or future training pipelines. Researchers can fine-tune how aggressively to trim the attention pattern, or selectively use full attention for tasks that demand exhaustive coverage.

Though much of the attention to MoBA focuses on textual contexts, the underlying mechanism may also hold promise for other data modalities. Wherever sequence lengths are large enough to raise computational or memory concerns, the notion of assigning queries to block experts could alleviate bottlenecks while preserving the capacity to handle essential global dependencies. As sequence lengths in language applications continue to grow, approaches like MoBA may play a critical role in advancing the scalability and cost-effectiveness of neural language modeling.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Moonshot AI Research Introduce Mixture of Block Attention (MoBA): A New AI Approach that Applies the Principles of Mixture of Experts (MoE) to the Attention Mechanism appeared first on MarkTechPost.

Source: Read MoreÂ