Humans possess an innate understanding of physics, expecting objects to behave predictably without abrupt changes in position, shape, or color. This fundamental cognition is observed in infants, primates, birds, and marine mammals, supporting the core knowledge hypothesis, which suggests humans have evolutionarily developed systems for reasoning about objects, space, and agents. While AI surpasses humans in complex tasks like coding and mathematics, it struggles with intuitive physics, highlighting Moravec’s paradox. AI approaches to physical reasoning fall into two categories: structured models, which simulate object interactions using predefined rules, and pixel-based generative models, which predict future sensory inputs without explicit abstractions.

Researchers from FAIR at Meta, Univ Gustave Eiffel, and EHESS explore how general-purpose deep neural networks develop an understanding of intuitive physics by predicting masked regions in natural videos. Using the violation-of-expectation framework, they demonstrate that models trained to predict outcomes in an abstract representation space—such as Joint Embedding Predictive Architectures (JEPAs)—can accurately recognize physical properties like object permanence and shape consistency. In contrast, video prediction models operating in pixel space and multimodal large language models perform closer to random guessing. This suggests that learning in an abstract space, rather than relying on predefined rules, is sufficient to acquire an intuitive understanding of physics.

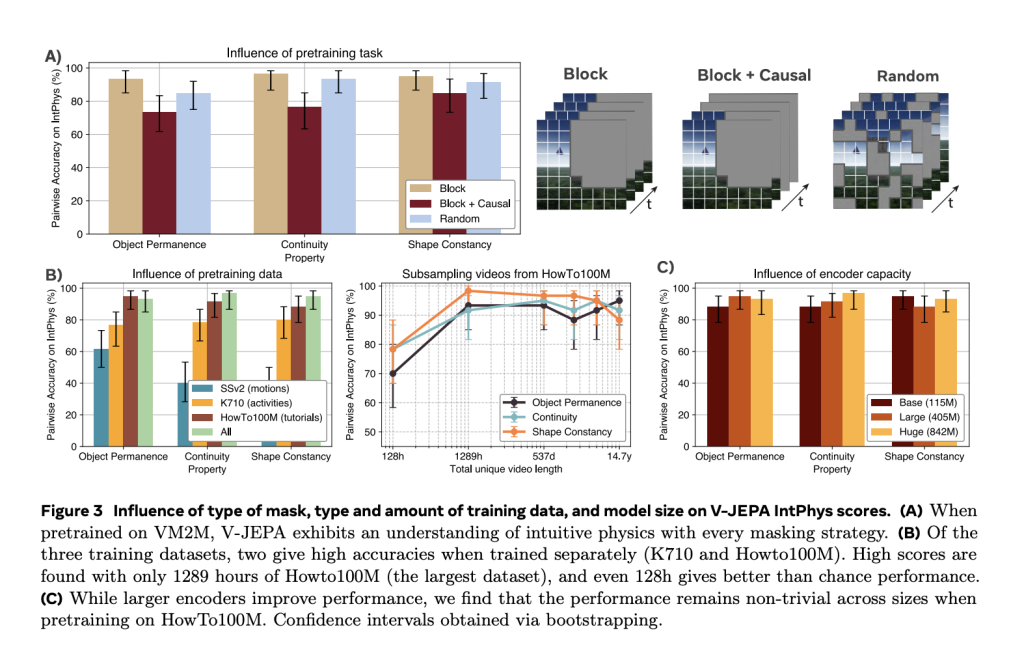

The study focuses on a video-based JEPA model, V-JEPA, which predicts future video frames in a learned representation space, aligning with the predictive coding theory in neuroscience. V-JEPA achieved 98% zero-shot accuracy on the IntPhys benchmark and 62% on the InfLevel benchmark, outperforming other models. Ablation experiments revealed that intuitive physics understanding emerges robustly across different model sizes and training durations. Even a small 115 million parameter V-JEPA model or one trained on just one week of video showed above-chance performance. These findings challenge the notion that intuitive physics requires innate core knowledge and highlight the potential of abstract prediction models in developing physical reasoning.

The violation-of-expectation paradigm in developmental psychology assesses intuitive physics understanding by observing reactions to physically impossible scenarios. Traditionally applied to infants, this method measures surprise responses through physiological indicators like gaze time. More recently, it has been extended to AI systems by presenting them with paired visual scenes, where one includes a physical impossibility, such as a ball disappearing behind an occluder. The V-JEPA architecture, designed for video prediction tasks, learns high-level representations by predicting masked portions of videos. This approach enables the model to develop an implicit understanding of object dynamics without relying on predefined abstractions, as shown through its ability to anticipate and react to unexpected physical events in video sequences.

V-JEPA was tested on datasets such as IntPhys, GRASP, and InfLevel-lab to benchmark intuitive physics comprehension, assessing properties like object permanence, continuity, and gravity. Compared to other models, including VideoMAEv2 and multimodal language models like Qwen2-VL-7B and Gemini 1.5 pro, V-JEPA achieved significantly higher accuracy, demonstrating that learning in a structured representation space enhances physical reasoning. Statistical analyses confirmed its superiority over untrained networks across multiple properties, reinforcing that self-supervised video prediction fosters a deeper understanding of real-world physics. These findings highlight the challenge of intuitive physics for existing AI models and suggest that predictive learning in a learned representation space is key to improving AI’s physical reasoning abilities.

In conclusion, the study explores how state-of-the-art deep learning models develop an understanding of intuitive physics. The model demonstrates intuitive physics comprehension without task-specific adaptation by pretraining V-JEPA on natural videos using a prediction task in a learned representation space. Results suggest this ability arises from general learning principles rather than hardwired knowledge. However, V-JEPA struggles with object interactions, likely due to training limitations and short video processing. Enhancing model memory and incorporating action-based learning could improve performance. Future research may examine models trained on infant-like visual data, reinforcing the potential of predictive learning for physical reasoning in AI.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Learning Intuitive Physics: Advancing AI Through Predictive Representation Models appeared first on MarkTechPost.

Source: Read MoreÂ