Diffusion models have emerged as a crucial generative AI framework, excelling in tasks such as image synthesis, video generation, text-to-image translation, and molecular design. These models function through two stochastic processes: a forward process that incrementally adds noise to data, converting it into Gaussian noise, and a reverse process that reconstructs samples by learning to remove this noise. Key formulations include denoising diffusion probabilistic models (DDPM), score-based generative models (SGM), and score-based stochastic differential equations (SDEs). DDPM employs Markov chains for gradual denoising, while SGM estimates score functions to guide sampling using Langevin dynamics. Score SDEs extend these techniques to continuous-time diffusion. Given the high computational costs, recent research has focused on optimizing convergence rates using metrics like Kullback–Leibler divergence, total variation, and Wasserstein distance, aiming to reduce dependence on data dimensionality.

Recent studies have sought to improve diffusion model efficiency by addressing the exponential dependence on data dimensions. Initial research showed that convergence rates scale poorly with dimensionality, making large-scale applications challenging. To counter this, newer approaches assume L2-accurate score estimates, smoothness properties, and bounded moments to enhance performance. Techniques such as underdamped Langevin dynamics and Hessian-based accelerated samplers have demonstrated polynomial scaling in dimensionality, reducing computational burdens. Other methods leverage ordinary differential equations (ODEs) to refine total variation and Wasserstein convergence rates. Additionally, studies on low-dimensional subspaces show improved efficiency under structured assumptions. These advancements significantly enhance the practicality of diffusion models for real-world applications.

Researchers from Hamburg University’s Department of Mathematics, Computer Science, and Natural Sciences explore how sparsity, a well-established statistical concept, can enhance the efficiency of diffusion models. Their theoretical analysis demonstrates that applying ℓ1-regularization reduces computational complexity by limiting the impact of input dimensionality, leading to improved convergence rates of s^2/tau, where s<<d, instead of the conventional d^2/tau. Empirical experiments on image datasets confirm these theoretical predictions, showing that sparsity improves sample quality and prevents over-smoothing. The study advances diffusion model optimization, offering a more computationally efficient approach through statistical regularization techniques.

The study explains score matching and the discrete-time diffusion process. Score matching is a technique used to estimate the gradient of a probability distribution, which is essential for generative models. A neural network is trained to approximate this gradient, allowing sampling from the desired distribution. The diffusion process gradually adds noise to data, creating a sequence of variables. The reverse process reconstructs data using learned gradients, often through Langevin dynamics. Regularized score matching, particularly with sparsity constraints, improves efficiency. The proposed method speeds up convergence in diffusion models, reducing complexity from the square of data dimensions to a much smaller value.

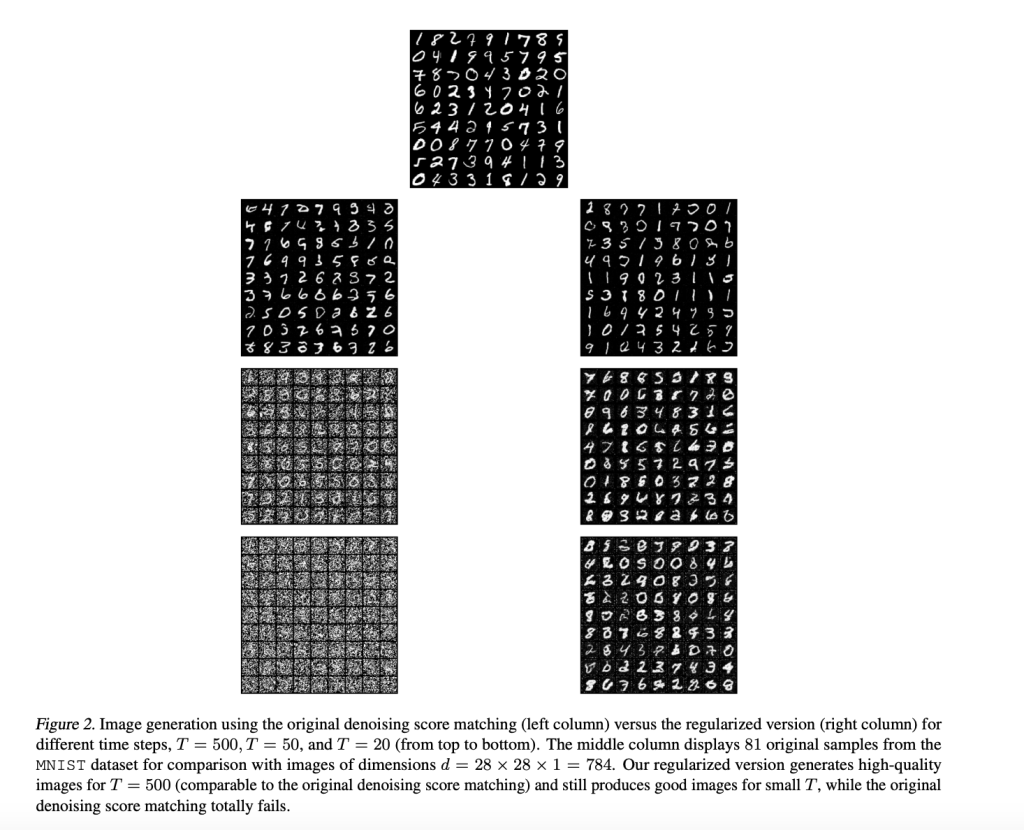

The study explores the impact of regularization in diffusion models, focusing on mathematical proofs and empirical evaluations. It introduces techniques to minimize reverse-step errors and optimize tuning parameters, improving the sampling process’s efficiency. Controlled experiments with three-dimensional Gaussian data show that regularization enhances structure and focus in the generated samples. Similarly, tests on handwritten digit datasets demonstrate that conventional methods struggle with fewer sampling steps, whereas the regularized approach consistently produces high-quality images, even with reduced computational effort.

Further evaluations of fashion-related datasets reveal that standard score matching generates over-smoothed and imbalanced outputs, while the regularized method achieves more realistic and evenly distributed results. The study highlights that regularization reduces computational complexity by shifting dependence from input dimensions to a smaller intrinsic dimension, making diffusion models more efficient. Beyond the applied sparsity-inducing techniques, other forms of regularization could further enhance performance. The findings suggest that incorporating sparsity principles can significantly improve diffusion models, making them computationally feasible while maintaining high-quality outputs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Enhancing Diffusion Models: The Role of Sparsity and Regularization in Efficient Generative AI appeared first on MarkTechPost.

Source: Read MoreÂ