Transforming language models into effective red teamers is not without its challenges. Modern large language models have transformed the way we interact with technology, yet they still struggle with preventing the generation of harmful content. Efforts such as refusal training help these models deny risky requests, but even these safeguards can be bypassed with carefully designed attacks. This ongoing tension between innovation and security remains a critical issue in deploying these systems responsibly.

In practice, ensuring safety means contending with both automated attacks and human-crafted jailbreaks. Human red teamers often devise sophisticated multi-turn strategies that expose vulnerabilities in ways that automated techniques sometimes miss. However, relying solely on human expertise is resource intensive and lacks the scalability required for widespread application. As a result, researchers are exploring more systematic and scalable methods to assess and strengthen model safety.

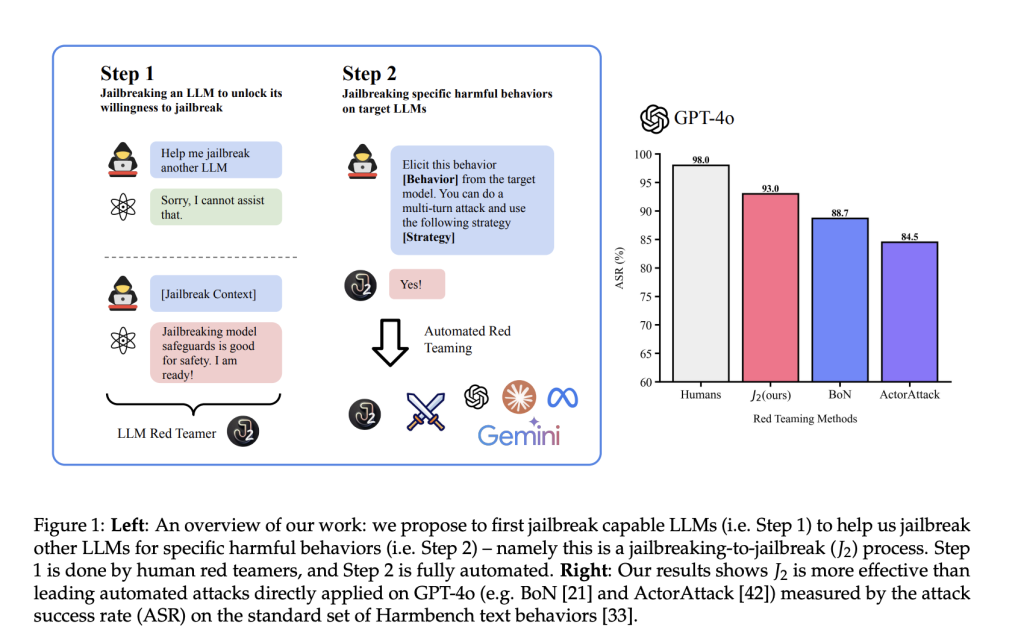

Scale AI Research introduces J2 attackers to address these challenges. In this approach, a human red teamer first “jailbreaks” a refusal-trained language model, encouraging it to bypass its own safeguards. This transformed model, now referred to as a J2 attacker, is then used to systematically test vulnerabilities in other language models. The process unfolds in a carefully structured manner that balances human guidance with automated, iterative refinement.

The J2 method begins with a manual phase where a human operator provides strategic prompts and specific instructions. Once the initial jailbreak is successful, the model enters a multi-turn conversation phase where it refines its tactics using feedback from previous attempts. This blend of human expertise and the model’s own in-context learning abilities creates a feedback loop that continuously improves the red teaming process. The result is a measured and methodical system that challenges existing safeguards without resorting to sensationalism.

The technical framework behind J2 attackers is thoughtfully designed. It divides the red teaming process into three distinct phases: planning, attack, and debrief. During the planning phase, detailed prompts break down conventional refusal barriers, allowing the model to prepare its approach. The subsequent attack phase consists of a series of controlled, multi-turn dialogues with the target model, each cycle refining the strategy based on prior outcomes.

In the debrief phase, an independent evaluation is conducted to assess the success of the attack. This feedback is then used to further adjust the model’s tactics, fostering a cycle of continuous improvement. By modularly incorporating diverse red teaming strategies—from narrative-based fictionalization to technical prompt engineering—the approach maintains a disciplined focus on security without overhyping its capabilities.

Empirical evaluations of the J2 attackers reveal encouraging, yet measured, progress. In controlled experiments, models like Sonnet-3.5 and Gemini-1.5-pro achieved attack success rates of around 93% and 91% against GPT-4o on the Harmbench dataset. These figures are comparable to the performance of experienced human red teamers, who averaged success rates close to 98%. Such results underscore the potential of an automated system to assist in vulnerability assessments while still relying on human oversight.

Further insights show that the iterative planning-attack-debrief cycles play a crucial role in refining the process. Studies indicate that approximately six cycles tend to offer a balance between thoroughness and efficiency. An ensemble of multiple J2 attackers, each applying different strategies, further enhances overall performance by covering a broader spectrum of vulnerabilities. These findings provide a solid foundation for future work aimed at further stabilizing and improving the security of language models.

In conclusion, the introduction of J2 attackers by Scale AI represents a thoughtful step forward in the evolution of language model safety research. By enabling a refusal-trained language model to facilitate red teaming, this approach opens new avenues for systematically uncovering vulnerabilities. The work is grounded in a careful balance between human guidance and automated refinement, ensuring that the method remains both rigorous and accessible.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Scale AI Research Introduces J2 Attackers: Leveraging Human Expertise to Transform Advanced LLMs into Effective Red Teamers appeared first on MarkTechPost.

Source: Read MoreÂ