Large Language Models (LLMs) have shown exceptional capabilities in complex reasoning tasks through recent advancements in scaling and specialized training approaches. While models like OpenAI o1 and DeepSeek R1 have set new benchmarks in addressing reasoning problems, a significant disparity exists in their performance across different languages. The dominance of English and Chinese in training data for foundation models like Llama and Qwen has created a substantial capability gap for low-resource languages. However, these models face challenges such as incorrect character usage and code-switching. These issues become pronounced during reasoning-focused fine-tuning and reinforcement learning processes.

Regional LLM initiatives have emerged to address low-resource language limitations through specialized pretraining and post-training approaches. Projects like Typhoon, Sailor, EuroLLM, Aya, Sea-lion, and SeaLLM have focused on adapting models for specific target languages. However, the data-centric approach to adapting reasoning capabilities lacks transparency in reasoning model data recipes. Moreover, scaling requires substantial computational resources, as evidenced by DeepSeek R1 70B’s requirement of 800K examples for distillation and general SFT, far exceeding academic efforts like Sky-T1 and Bespoke-Stratos. Model merging has emerged as an alternative approach, showing promise in combining multiple specialized models’ weights to improve performance across tasks without additional training.

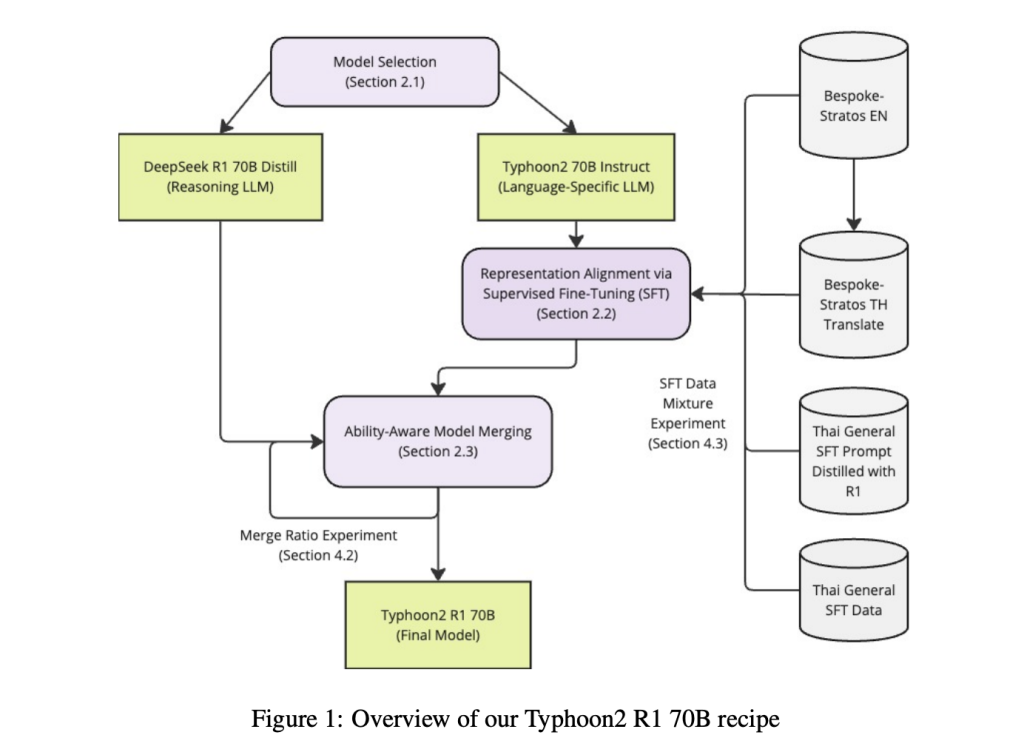

Researchers from SCB 10X R&D and SCBX Group Bangkok, Thailand have proposed an innovative approach to enhance reasoning capabilities in language-specific LLMs, particularly focusing on Thai language models. The research combines data selection and model merging methods to incorporate advanced reasoning capabilities similar to DeepSeek R1 while maintaining target language proficiency. The study addresses the critical challenge of improving reasoning abilities in low-resource language models, using only publicly available datasets and a modest computational budget of $1,201, matching DeepSeek R1’s reasoning capabilities without compromising performance on target language tasks.

The implemented methodology utilizes Typhoon2 70B Instruct and DeepSeek R1 70B Distill as base models. The approach involves applying Supervised Fine-Tuning (SFT) to Typhoon2 70B and merging it with DeepSeek R1 70B. The training configuration employs LoRA with specific parameters: rank 32 and α of 16. The system uses sequence packing with 16,384 maximum lengths, alongside Liger kernels, FlashAttention-2, and DeepSpeed ZeRO-3 to optimize computational efficiency. Training runs on 4×H100 GPUs for up to 15 hours using axolotl4, with model merging performed via Mergekit. The evaluation focuses on two key aspects: reasoning capability and language task performance, utilizing benchmarks like AIME 2024, MATH-500, and LiveCodeBench, with Thai translations for assessment.

Experimental results reveal that DeepSeek R1 70B Distill excels in reasoning tasks like AIME and MATH500 but shows reduced effectiveness in Thai-specific tasks such as MTBench-TH and language accuracy evaluations. Typhoon2 70B Instruct shows strong performance in language-specific tasks but struggles with reasoning challenges, achieving only 10% accuracy in AIME and trailing DeepSeek R1 by over 20% in MATH500. The final model, Typhoon2-R1-70B combines DeepSeek R1’s reasoning capabilities with Typhoon2’s Thai language proficiency, achieving performance within 4% of Typhoon2 on language tasks while maintaining comparable reasoning abilities. This results in performance improvements of 41.6% over Typhoon2 and 12.8% over DeepSeek R1.

In conclusion, researchers present an approach to enhance reasoning capabilities in language-specific models, through the combination of specialized models. While the study proves that SFT and model merging can effectively transfer reasoning capabilities with limited resources, several limitations exist in the current methodology. The research scope was confined to merging DARE in a two-model setup within a single model family, without optimizing instruction tuning despite available high-quality datasets like Tulu3. Significant challenges persist in multilingual reasoning and model merging including the lack of culturally aware reasoning traces. Despite these challenges, the research marks a step toward advancing LLM capabilities in underrepresented languages.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Enhancing Reasoning Capabilities in Low-Resource Language Models through Efficient Model Merging appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)