Reasoning tasks are yet a big challenge for most of the language models. Instilling a reasoning aptitude in models, particularly for programming and mathematical applications that require solid sequential reasoning, seems far distant. This problem could be attributed to the inherent complexity of these tasks that require a multi-step logical deduction approach planned with domain knowledge to find a structured solution path.

LLMs are, therefore, supervised on massive amounts of data with hundreds of thousands of examples. For this reason, training is further based on two assumptions: the first is that learning such a cognitive skill is possible only with multiple supervised examples, and the second is that this training inevitably leads to memorization rather than generalization. Besides, this approach also brings high computational costs and the burden of data collection. This article discusses an approach that utilizes advancements in knowledge foundations and inference-time costs of LLM to eradicate the enormous data requirements.

Researchers from Shanghai Jiao Tong University present a hypothesis Less-Is-More(LIMO), which says that in foundation models where domain knowledge has been comprehensively encoded during the pre-training process, we can instill sophisticated reasoning capabilities in the model through minimal and precise demonstrations of cognitive processes. This hypothesis stems from the recent developments in the LLM space where developers incorporate unprecedented amounts of mathematical content during pre-training, enriching them with maths and programming logic before they step into the work field. Furthermore, the emergence of techniques scaling longer reasoning chains has motivated this research significantly.

According to the LIMO hypothesis, the elicitation threshold for complex reasoning is determined by two key factors:

- The latent presence of prerequisite knowledge within the model’s parameter space (the domain knowledge instilled during the pre-training)

- The effectiveness of minimal exemplars in demonstrating systematic problem-solving processes (post-training inference examples that act as cognitive prompts for solving reasoning tasks with available knowledge)

Thus, LIMO leverages the rich embedded pre-training knowledge and provides detailed reasoning chains through minimal but well-structured chains. The proposed method focuses on the quality and structure of prompts over their quantity, forcing the model to “think” with the help of past lessons rather than simply recalling them. This way, the pipeline challenges the underlying notion that supervised fine-tuning makes the model memorized. The authors further investigated the relationship between reasoning and data and identified critical factors, including the synergy between pre-trained knowledge foundations and test-time computation scaling.

The authors released a comprehensive open-source suite to ensure reproducibility, including their fine-tuned models, evaluation pipelines, training code, and carefully curated datasets with varying quality levels.

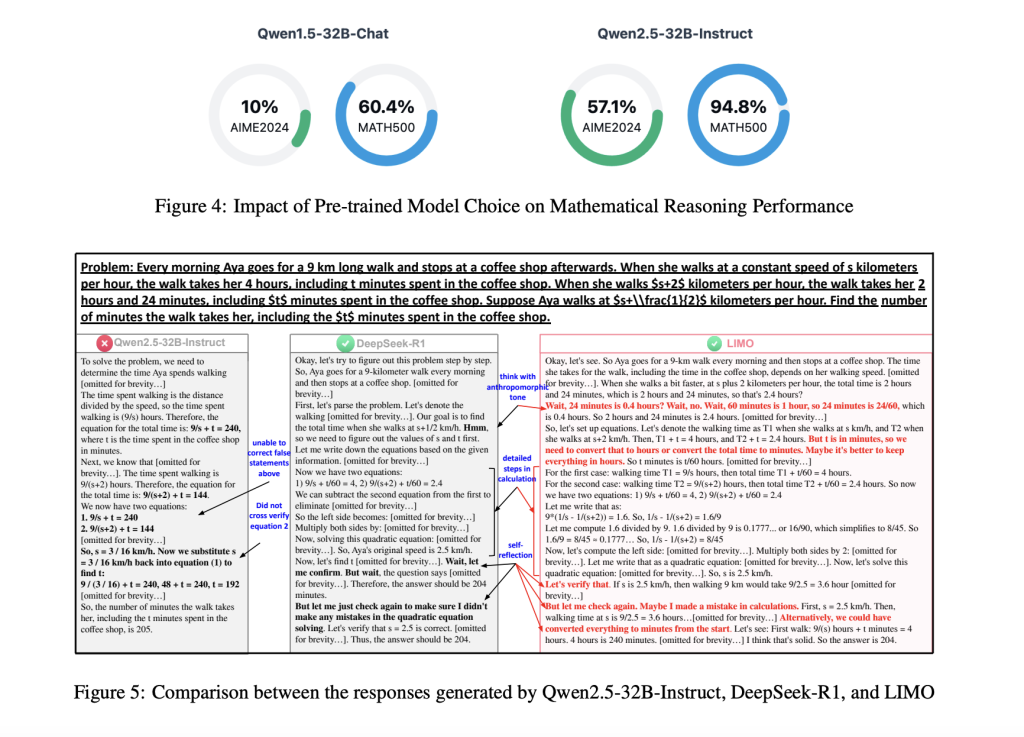

Authors in their experiments attempted to teach models reasoning with just hundreds of examples instead of the previous hundreds of thousands. The authors evaluated LIMO’s performance across 10 benchmarks to assess its out-of-distribution generalization capabilities. LIMO’s performance on these datasets was impressive and promising. Notably, with only 817 curated training samples, LIMO achieved 57.1% accuracy on the highly challenging American Invitational Mathematics Examination (AIME) benchmark and 94.8% on the MATH dataset, superseding the SFT methods that gained 6.5% and 59.2% on respective benchmarks.LIMO thus achieved a 40.5% absolute improvement over models trained on 100 times more data, refuting the first assumption of supervised training to instill reasoning

Conclusion: Researchers gave an insightful hypothesis regarding the reasoning training regime of LLMs through a model LIMO. It challenged the underlying assumptions in SFT to instill reasoning.LIMO demonstrates that less can be more and shows commendable performance on challenging datasets, superseding SFT with skillfully orchestrated cognitive templates.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post LIMO: The AI Model that Proves Quality Training Beats Quantity appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)