Transformer-based models have significantly advanced natural language processing (NLP), excelling in various tasks. However, they struggle with reasoning over long contexts, multi-step inference, and numerical reasoning. These challenges arise from their quadratic complexity in self-attention, making them inefficient for extended sequences, and their lack of explicit memory, which limits their ability to synthesize dispersed information effectively. Existing solutions, such as recurrent memory transformers (RMT) and retrieval-augmented generation (RAG), offer partial improvements but often sacrifice either efficiency or generalization.

Introducing the Large Memory Model (LM2)

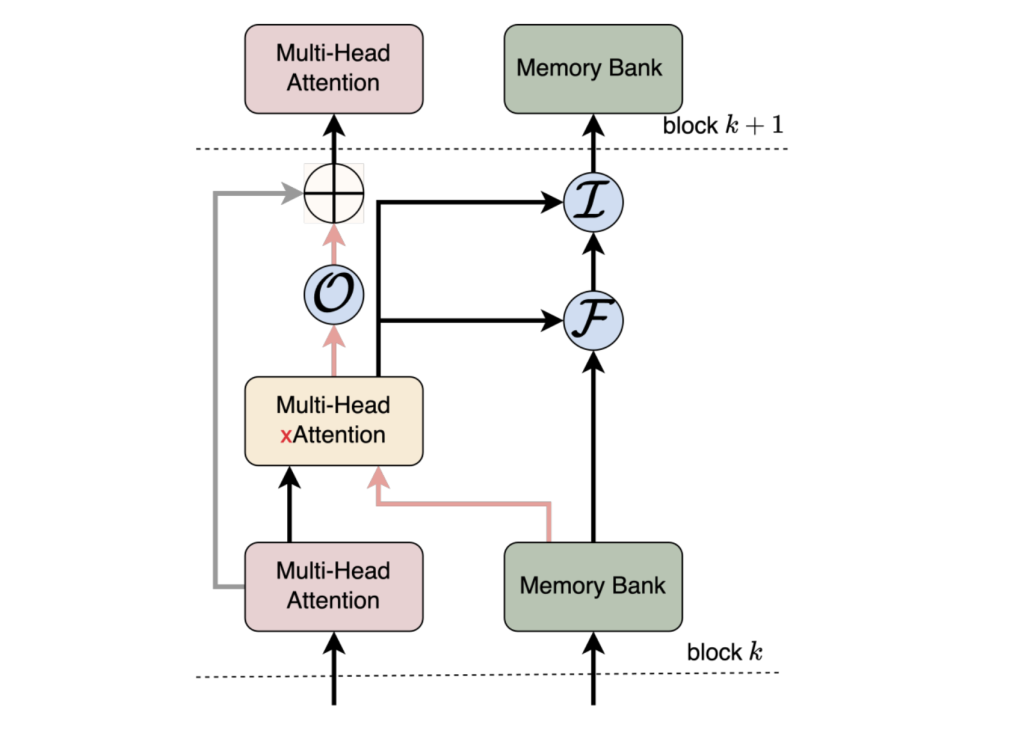

Convergence Labs introduces the Large Memory Model (LM2), a decoder-only Transformer architecture enhanced with an auxiliary memory module to address the shortcomings of conventional models in long-context reasoning. Unlike standard Transformers, which rely solely on attention mechanisms, LM2 incorporates a structured memory system that interacts with input embeddings through cross-attention. The model’s memory updates are regulated by gating mechanisms, allowing it to selectively retain relevant information while preserving generalization capabilities. This design enables LM2 to maintain coherence across long sequences, facilitating improved relational reasoning and inference.

Technical Overview and Benefits

LM2 builds upon standard Transformer architecture by introducing three key innovations:

- Memory-Augmented Transformer: A dedicated memory bank acts as an explicit long-term storage system, retrieving relevant information through cross-attention.

- Hybrid Memory Pathway: Unlike previous models that modify the Transformer’s core structure, LM2 maintains the original information flow while integrating an auxiliary memory pathway.

- Dynamic Memory Updates: The memory module selectively updates its stored information using learnable input, forget, and output gates, ensuring long-term retention without unnecessary accumulation of irrelevant data.

These enhancements allow LM2 to process long sequences more effectively while maintaining computational efficiency. By selectively incorporating relevant memory content, the model mitigates the gradual performance decline often observed in traditional architectures over extended contexts.

Experimental Results and Insights

To evaluate LM2’s effectiveness, it was tested on the BABILong dataset, designed to assess memory-intensive reasoning capabilities. The results indicate substantial improvements:

- Short-context performance (0K context length): LM2 achieves an accuracy of 92.5%, surpassing RMT (76.4%) and vanilla Llama-3.2 (40.7%).

- Long-context performance (1K–4K context length): As context length increases, all models experience some degradation, but LM2 maintains a higher accuracy. At 4K context length, LM2 achieves 55.9%, compared to 48.4% for RMT and 36.8% for Llama-3.2.

- Extreme long-context performance (≥8K context length): While all models decline in accuracy, LM2 remains more stable, outperforming RMT in multi-step inference and relational argumentation.

Beyond memory-specific benchmarks, LM2 was tested on the MMLU dataset, which covers a broad range of academic subjects. The model demonstrated a 5.0% improvement over a pre-trained vanilla Transformer, particularly excelling in Humanities and Social Sciences, where contextual reasoning is crucial. These results indicate that LM2’s memory module enhances reasoning capabilities without compromising general task performance.

Conclusion

The introduction of LM2 offers a thoughtful approach to addressing the limitations of standard Transformers in long-context reasoning. By integrating an explicit memory module, LM2 improves multi-step inference, relational argumentation, and numerical reasoning while maintaining efficiency and adaptability. Experimental results demonstrate its advantages over existing architectures, particularly in tasks requiring extended context retention. Furthermore, LM2 performs well in general reasoning benchmarks, suggesting that memory integration does not hinder versatility. As memory-augmented models continue to evolve, LM2 represents a step toward more effective long-context reasoning in language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Convergence Labs Introduces the Large Memory Model (LM2): A Memory-Augmented Transformer Architecture Designed to Address Long Context Reasoning Challenges appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)