GraphStorm is a low-code enterprise graph machine learning (ML) framework that provides ML practitioners a simple way of building, training, and deploying graph ML solutions on industry-scale graph data. Although GraphStorm can run efficiently on single instances for small graphs, it truly shines when scaling to enterprise-level graphs in distributed mode using a cluster of Amazon Elastic Compute Cloud (Amazon EC2) instances or Amazon SageMaker.

Today, AWS AI released GraphStorm v0.4. This release introduces integration with DGL-GraphBolt, a new graph storage and sampling framework that uses a compact graph representation and pipelined sampling to reduce memory requirements and speed up Graph Neural Network (GNN) training and inference. For the large-scale dataset examined in this post, the inference speedup is 3.6 times faster, and per-epoch training speedup is 1.4 times faster, with even larger speedups possible.

To achieve this, GraphStorm v0.4 with DGL-GraphBolt addresses two crucial challenges of graph learning:

- Memory constraints – GraphStorm v0.4 provides compact and distributed storage of graph structure and features, which may grow in the multi-TB range. For example, a graph with 1 billion nodes with 512 features per node and 10 billion edges will require more than 4 TB of memory to store, which necessitates distributed computation.

- Graph sampling – In multi-layer GNNs, you need to sample neighbors of each node to propagate their representations. This can lead to exponential growth in the number of nodes sampled, potentially visiting the entire graph for a single node’s representation. GraphStorm v0.4 provides efficient, pipelined graph sampling.

In this post, we demonstrate how GraphBolt enhances GraphStorm’s performance in distributed settings. We provide a hands-on example of using GraphStorm with GraphBolt on SageMaker for distributed training. Lastly, we share how to use Amazon SageMaker Pipelines with GraphStorm.

GraphBolt: Pipeline-driven graph sampling

GraphBolt is a new data loading and graph sampling framework developed by the DGL team. It streamlines the operations needed to sample efficiently from a heterogeneous graph and fetch the corresponding features. GraphBolt introduces a new, more compact graph structure representation for heterogeneous graphs, called fused Compressed Sparse Column (fCSC). This can reduce the memory cost of storing a heterogeneous graph by up to 56%, allowing users to fit larger graphs in memory and potentially use smaller, more cost-efficient instances for GNN model training.

GraphStorm v0.4 seamlessly integrates with GraphBolt, allowing users to take advantage of its performance improvements in their GNN workflows. The user just needs to provide the additional argument --use-graphbolt true when launching graph construction and training jobs.

Solution overview

A common model development process is to perform model exploration locally on a subset of your full data, and when you’re satisfied with the results, train the full-scale model. This setup allows for cheaper exploration before training on the full dataset. GraphStorm and SageMaker Pipelines allows you to do that by creating a model pipeline you can run locally to retrieve model metrics, and when you’re ready, run your pipeline on the full data on SageMaker, and produce models, predictions, and graph embeddings to use in downstream tasks. In the next section, we show how to set up such pipelines for GraphStorm.

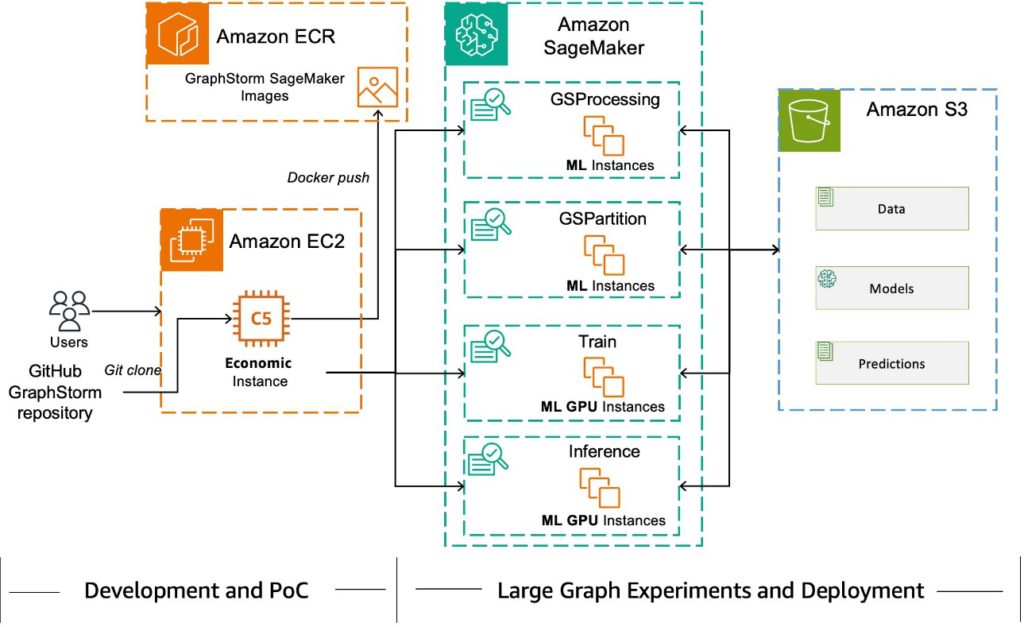

We demonstrate such a setup in the following diagram, where a user can perform model development and initial training on a single EC2 instance, and when they’re ready to train on their full data, hand off the heavy lifting to SageMaker for distributed training. Using SageMaker Pipelines to train models provides several benefits, like reduced costs, auditability, and lineage tracking.

Prerequisites

To run this example, you will need an AWS account, an Amazon SageMaker Studio domain, and the necessary permissions to run BYOC SageMaker jobs.

Set up the environment for SageMaker distributed training

You will use the example code available in the GraphStorm repository to run through this example.

Setting up your environment should take around 10 minutes. First, set up your Python environment to run the examples:

Build a GraphStorm SageMaker CPU image

Next, build and push the GraphStorm PyTorch Docker image that you will use to run the graph construction, training, and inference jobs for smaller-scale data. Your role will need to be able to pull images from the Amazon ECR Public Gallery and create Amazon Elastic Container Registry (Amazon ECR) repositories and push images to your private ECR registry.

Download and prepare datasets

In this post, we use two citation datasets to demonstrate the scalability of GraphStorm. The Open Graph Benchmark (OGB) project hosts a number of graph datasets that can be used to benchmark the performance of graph learning systems. For a small-scale demo, we use the ogbn-arxiv dataset, and for a demonstration of GraphStorm’s large-scale learning capabilities, we use the ogbn-papers100M dataset.

Prepare the ogbn-arxiv dataset

Download the smaller-scale ogbn-arxiv dataset to run a local test before launching larger-scale SageMaker jobs on AWS. This dataset has approximately 170,000 nodes and 1.2 million edges. Use the following code to download the data and prepare it for GraphStorm:

You use the following script to directly download, transform and upload the data to Amazon Simple Storage Service (Amazon S3):

This will create the tabular graph data in Amazon S3, which you can verify by running the following code:

Finally, upload GraphStorm training configuration files for arxiv to use for training and inference:

Prepare the ogbn-papers100M dataset on SageMaker

The papers-100M dataset is a large-scale graph dataset, with 111 million nodes and 3.2 billion edges after adding reverse edges.

To download and preprocess the data as an Amazon SageMaker Processing step, use the following code. You can launch and let the job run in the background while proceeding through the rest of the post, and return to this dataset later. The job should take approximately 45 minutes to run.

This will produce the processed data in s3://$BUCKET_NAME/ogb-papers100M-input, which can then be used as input to GraphStorm. While this job is running, you can create the GraphStorm pipelines.

Create a SageMaker pipeline

Run the following command to create a SageMaker pipeline:

Inspect the pipeline

Running the preceding code will create a SageMaker pipeline configured to run three SageMaker jobs in sequence:

- A GConstruct job that converts the tabular file input to a binary partitioned graph on Amazon S3

- A GraphStorm training job that trains a node classification model and saves the model to Amazon S3

- A GraphStorm inference job that produces predictions for all nodes in the test set, and creates embeddings for all nodes

To review the pipeline, navigate to SageMaker AI Studio, choose the domain and user profile you used to create the pipeline, then choose Open Studio.

In the navigation pane, choose Pipelines. There should be a pipeline named ogbn-arxiv-gs-pipeline. Choose the pipeline, which will take you to the Executions tab for the pipeline. Choose Graph to view the pipeline steps.

Run the SageMaker pipeline locally for ogbn-arxiv

The ogbn-arxiv dataset is small enough that you can run the pipeline locally. Run the following command to start a local execution of the pipeline:

We save the log output to arxiv-local-logs.txt. You will use that later to analyze the training speed.

Running the pipeline should take approximately 5 minutes. When the pipeline is complete, it will print a message like the following:

You can inspect the mean epoch and evaluation time using the provided analyze_training_time.py script and the log file you created:

These numbers will vary depending on your instance type; in this case, these are values reported on an m6in.4xlarge instance.

Create a GraphBolt pipeline

Now you have established a baseline for performance, you can create another pipeline that uses the GraphBolt graph representation to compare the performance.

You can use the same pipeline creation script, but change two variables, providing a new pipeline name and setting --use-graphbolt to “true”:

Analyzing the training logs, you can see the per-epoch time has dropped somewhat:

For such a small graph, the performance gains are modest, around 13% per epoch time. With large data, the potential gains are much greater. In the next section, you will create a pipeline and train a model for papers-100M, a citation graph with 111 million nodes and 3.2 billion edges.

Create a SageMaker pipeline for distributed training

After the SageMaker processing job that prepares the papers-100M data has finished processing and the data is stored in Amazon S3, you can set up a pipeline to train a model on that dataset.

Build the GraphStorm GPU image

For this job, you will use large GPU instances, so you will build and push the GPU image this time:

Deploy and run pipelines for papers-100M

Before you deploy your new pipeline, upload the training YAML configuration for papers-100M to Amazon S3:

Now you are ready to deploy your initial pipeline for papers-100M:

Run the pipeline on SageMaker and let it run in the background:

Your account needs to meet the required quotas for the requested instances. For this post, the defaults are set to four ml.g5.48xlarge for training jobs and one ml.r5.24xlarge instance for a processing job. To adjust your SageMaker service quotas, you can use the Service Quotas console. To run both pipelines in parallel, i.e. without GraphBolt and with GraphBolt, you will need 8 x $TRAIN_GPU_INSTANCE and 2 x $GCONSTRUCT_INSTANCE.

Next, you can deploy and run another pipeline, with GraphBolt enabled:

Compare performance for GraphBolt-enabled training

After both pipelines are complete, which should take approximately 4 hours, you can compare the training times for both cases.

On the Pipelines page of the SageMaker console, there should be two new pipelines named ogb-papers100M-pipeline and ogb-papers100M-graphbolt-pipeline. Choose ogb-papers100M-pipeline, which will take you to the Executions tab for the pipeline. Copy the name of the latest successful execution and use that to run the training analysis script:

Your output will look like the following code:

Now do the same for the GraphBolt-enabled pipeline:

You will see the improved per-epoch and evaluation times:

Without loss in accuracy, the latest version of GraphStorm achieved a speedup of approximately 1.4 times faster per epoch for training, and a speedup of 3.6 times faster in evaluation time! Depending on the dataset, the speedups can be even greater, as shown by the DGL team’s benchmarking.

Conclusion

This post showcased how GraphStorm 0.4, integrated with DGL-GraphBolt, significantly speeds up large-scale GNN training and inference, by 1.4 and 3.6 times faster, respectively, as measured on the papers-100M dataset. As shown in the DGL benchmarks, even larger speedups are possible depending on the dataset.

We encourage ML practitioners working with large graph data to try GraphStorm. Its low-code interface simplifies building, training, and deploying graph ML solutions on AWS, allowing you to focus on modeling rather than infrastructure.

To get started, visit the GraphStorm documentation and GraphStorm GitHub repository.

About the author

Theodore Vasiloudis is a Senior Applied Scientist at Amazon Web Services, where he works on distributed machine learning systems and algorithms. He led the development of GraphStorm Processing, the distributed graph processing library for GraphStorm and is a core developer for GraphStorm. He received his PhD in Computer Science from the KTH Royal Institute of Technology, Stockholm, in 2019.

Theodore Vasiloudis is a Senior Applied Scientist at Amazon Web Services, where he works on distributed machine learning systems and algorithms. He led the development of GraphStorm Processing, the distributed graph processing library for GraphStorm and is a core developer for GraphStorm. He received his PhD in Computer Science from the KTH Royal Institute of Technology, Stockholm, in 2019.

Xiang Song is a Senior Applied Scientist at Amazon Web Services, where he develops deep learning frameworks including GraphStorm, DGL, and DGL-KE. He led the development of Amazon Neptune ML, a new capability of Neptune that uses graph neural networks for graphs stored in a Neptune graph database. He is now leading the development of GraphStorm, an open source graph machine learning framework for enterprise use cases. He received his PhD in computer systems and architecture at the Fudan University, Shanghai, in 2014.

Xiang Song is a Senior Applied Scientist at Amazon Web Services, where he develops deep learning frameworks including GraphStorm, DGL, and DGL-KE. He led the development of Amazon Neptune ML, a new capability of Neptune that uses graph neural networks for graphs stored in a Neptune graph database. He is now leading the development of GraphStorm, an open source graph machine learning framework for enterprise use cases. He received his PhD in computer systems and architecture at the Fudan University, Shanghai, in 2014.

Florian Saupe is a Principal Technical Product Manager at AWS AI/ML research supporting science teams like the graph machine learning group, and ML Systems teams working on large scale distributed training, inference, and fault resilience. Before joining AWS, Florian lead technical product management for automated driving at Bosch, was a strategy consultant at McKinsey & Company, and worked as a control systems and robotics scientist—a field in which he holds a PhD.

Florian Saupe is a Principal Technical Product Manager at AWS AI/ML research supporting science teams like the graph machine learning group, and ML Systems teams working on large scale distributed training, inference, and fault resilience. Before joining AWS, Florian lead technical product management for automated driving at Bosch, was a strategy consultant at McKinsey & Company, and worked as a control systems and robotics scientist—a field in which he holds a PhD.

Source: Read MoreÂ