Today, we are excited to announce that the Falcon 3 family of models from TII are available in Amazon SageMaker JumpStart. In this post, we explore how to deploy this model efficiently on Amazon SageMaker AI.

Overview of the Falcon 3 family of models

The Falcon 3 family, developed by Technology Innovation Institute (TII) in Abu Dhabi, represents a significant advancement in open source language models. This collection includes five base models ranging from 1 billion to 10 billion parameters, with a focus on enhancing science, math, and coding capabilities. The family consists of Falcon3-1B-Base, Falcon3-3B-Base, Falcon3-Mamba-7B-Base, Falcon3-7B-Base, and Falcon3-10B-Base along with their instruct variants.

These models showcase innovations such as efficient pre-training techniques, scaling for improved reasoning, and knowledge distillation for better performance in smaller models. Notably, the Falcon3-10B-Base model achieves state-of-the-art performance for models under 13 billion parameters in zero-shot and few-shot tasks. The Falcon 3 family also includes various fine-tuned versions like Instruct models and supports different quantization formats, making them versatile for a wide range of applications.

Currently, SageMaker JumpStart offers the base versions of Falcon3-3B, Falcon3-7B, and Falcon3-10B, along with their corresponding instruct variants, as well as Falcon3-1B-Instruct.

Get started with SageMaker JumpStart

SageMaker JumpStart is a machine learning (ML) hub that can help accelerate your ML journey. With SageMaker JumpStart, you can evaluate, compare, and select pre-trained foundation models (FMs), including Falcon 3 models. These models are fully customizable for your use case with your data.

Deploying a Falcon 3 model through SageMaker JumpStart offers two convenient approaches: using the intuitive SageMaker JumpStart UI or implementing programmatically through the SageMaker Python SDK. Let’s explore both methods to help you choose the approach that best suits your needs.

Deploy Falcon 3 using the SageMaker JumpStart UI

Complete the following steps to deploy Falcon 3 through the JumpStart UI:

- To access SageMaker JumpStart, use one of the following methods:

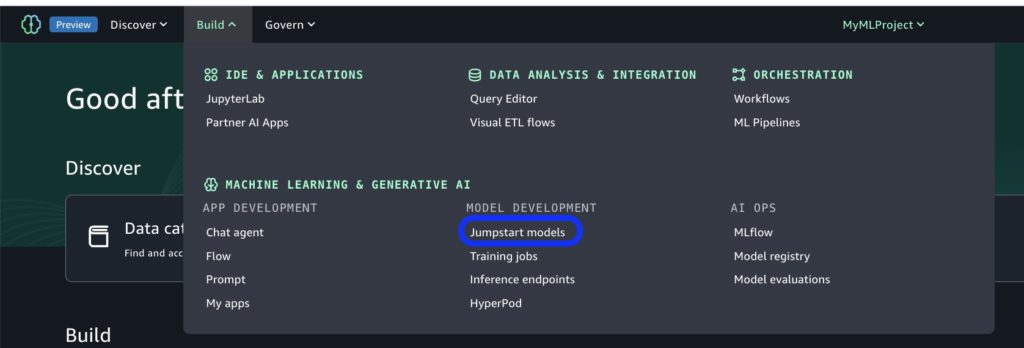

- In Amazon SageMaker Unified Studio, on the Build menu, choose JumpStart models under Model development.

- Alternatively, in Amazon SageMaker Studio, choose JumpStart in the navigation pane.

- In Amazon SageMaker Unified Studio, on the Build menu, choose JumpStart models under Model development.

- Search for Falcon3-10B-Base in the model browser.

- Choose the model and choose Deploy.

- For Instance type, either use the default instance or choose a different instance.

- Choose Deploy.

After some time, the endpoint status will show as InService and you will be able to run inference against it.

After some time, the endpoint status will show as InService and you will be able to run inference against it.

Deploy Falcon 3 programmatically using the SageMaker Python SDK

For teams looking to automate deployment or integrate with existing MLOps pipelines, you can use the SageMaker Python SDK:

Run inference on the predictor:

If you want to set up the ability to scale down to zero after deployment, refer to Unlock cost savings with the new scale down to zero feature in SageMaker Inference.

Clean up

To clean up the model and endpoint, use the following code:

Conclusion

In this post, we explored how SageMaker JumpStart empowers data scientists and ML engineers to discover, access, and run a wide range of pre-trained FMs for inference, including the Falcon 3 family of models. Visit SageMaker JumpStart in SageMaker Studio now to get started. For more information, refer to SageMaker JumpStart pretrained models, Amazon SageMaker JumpStart Foundation Models, and Getting started with Amazon SageMaker JumpStart.

About the authors

Niithiyn Vijeaswaran is a Generative AI Specialist Solutions Architect with the Third-Party Model Science team at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics.

Niithiyn Vijeaswaran is a Generative AI Specialist Solutions Architect with the Third-Party Model Science team at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics.

Marc Karp is an ML Architect with the Amazon SageMaker Service team. He focuses on helping customers design, deploy, and manage ML workloads at scale. In his spare time, he enjoys traveling and exploring new places.

Marc Karp is an ML Architect with the Amazon SageMaker Service team. He focuses on helping customers design, deploy, and manage ML workloads at scale. In his spare time, he enjoys traveling and exploring new places.

Raghu Ramesha is a Senior ML Solutions Architect with the Amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate ML production workloads to SageMaker at scale. He specializes in machine learning, AI, and computer vision domains, and holds a master’s degree in Computer Science from UT Dallas. In his free time, he enjoys traveling and photography.

Raghu Ramesha is a Senior ML Solutions Architect with the Amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate ML production workloads to SageMaker at scale. He specializes in machine learning, AI, and computer vision domains, and holds a master’s degree in Computer Science from UT Dallas. In his free time, he enjoys traveling and photography.

Banu Nagasundaram leads product, engineering, and strategic partnerships for SageMaker JumpStart, SageMaker’s machine learning and GenAI hub. She is passionate about building solutions that help customers accelerate their AI journey and unlock business value.

Banu Nagasundaram leads product, engineering, and strategic partnerships for SageMaker JumpStart, SageMaker’s machine learning and GenAI hub. She is passionate about building solutions that help customers accelerate their AI journey and unlock business value.

Source: Read MoreÂ