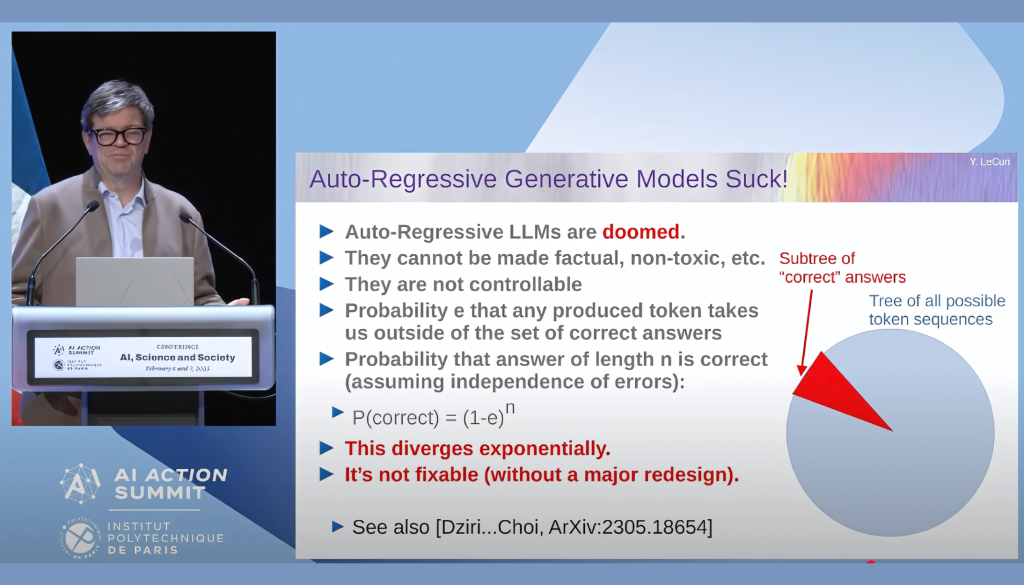

Yann LeCun, Chief AI Scientist at Meta and one of the pioneers of modern AI, recently argued that autoregressive Large Language Models (LLMs) are fundamentally flawed. According to him, the probability of generating a correct response decreases exponentially with each token, making them impractical for long-form, reliable AI interactions.

While I deeply respect LeCun’s work and approach to AI development and resonate with many of his insights, I believe this particular claim overlooks some key aspects of how LLMs function in practice. In this post, I’ll explain why autoregressive models are not inherently divergent and doomed, and how techniques like Chain-of-Thought (CoT) and Attentive Reasoning Queries (ARQs)—a method we’ve developed to achieve high-accuracy customer interactions with Parlant—effectively prove otherwise.

What is Autoregression?

At its core, an LLM is a probabilistic model trained to generate text one token at a time. Given an input context, the model predicts the most likely next token, feeds it back into the original sequence, and repeats the process iteratively until a stop condition is met. This allows the model to generate anything from short responses to entire articles.

For a deeper dive into autoregression, check out our recent technical blog post.

Do Generation Errors Compound Exponentially?

LeCun’s argument can be unpacked as follows:

- Define C as the set of all possible completions of length N.

- Define A ⊂ C as the subset of acceptable completions, where U = C – A represents the unacceptable ones.

- Let Ci[K] be an in-progress completion of length K, which at K is still acceptable (Ci[N] ∈ A may still ultimately apply).

- Assume a constant E as the error probability of generating the next token, such that it pushes Ci into U.

- The probability of generating the remaining tokens while keeping Ci in A is then (1 – E)^(N – K).

This leads to LeCun’s conclusion that for sufficiently long responses, the likelihood of maintaining coherence exponentially approaches zero, suggesting that autoregressive LLMs are inherently flawed.

But here’s the problem: E is not constant.

To put it simply, LeCun’s argument assumes that the probability of making a mistake in each new token is independent. However, LLMs don’t work that way.

As an analogy to what allows LLMs to overcome this problem, imagine you’re telling a story: if you make a mistake in one sentence, you can still correct it in the next one to keep the narrative coherent. The same applies to LLMs, especially when techniques like Chain-of-Thought (CoT) prompting guide them toward better reasoning by helping them reassess their own outputs along the way.

Why This Assumption is Flawed

LLMs exhibit self-correction properties that prevent them from spiraling into incoherence.

Take Chain-of-Thought (CoT) prompting, which encourages the model to generate intermediate reasoning steps. CoT allows the model to consider multiple perspectives, improving its ability to converge to an acceptable answer. Similarly, Chain-of-Verification (CoV) and structured feedback mechanisms like ARQs guide the model in reinforcing valid outputs and discarding erroneous ones.

A small mistake early on in the generation process doesn’t necessarily doom the final answer. Figuratively speaking, an LLM can double-check its work, backtrack, and correct errors on the go.

Attentive Reasoning Queries (ARQs) are a Game-Changer

At Parlant, we’ve taken this principle further in our work on Attentive Reasoning Queries (a research paper describing our results is currently in the works, but the implementation pattern can be explored in our open-source codebase). ARQs introduce reasoning blueprints that help the model maintain coherence throughout long completions by dynamically refocusing attention on key instructions at strategic points in the completion process, continuously preventing LLMs from diverging into incoherence. Using them, we’ve been able to maintain a large test suite that exhibits close to 100% consistency in generating correct completions for complex tasks.

This technique allows us to achieve much higher accuracy in AI-driven reasoning and instruction-following, which has been critical for us in enabling reliable and aligned customer-facing applications.

Autoregressive Models Are Here to Stay

We think autoregressive LLMs are far from doomed. While long-form coherence is a challenge, assuming an exponentially compounding error rate ignores key mechanisms that mitigate divergence—from Chain-of-Thought reasoning to structured reasoning like ARQs.

If you’re interested in AI alignment and increasing the accuracy of chat agents using LLMs, feel free to explore Parlant’s open-source effort. Let’s continue refining how LLMs generate and structure knowledge.

Disclaimer: The views and opinions expressed in this guest article are those of the author and do not necessarily reflect the official policy or position of Marktechpost.

The post Are Autoregressive LLMs Really Doomed? A Commentary on Yann LeCun’s Recent Keynote at AI Action Summit appeared first on MarkTechPost.

Source: Read MoreÂ