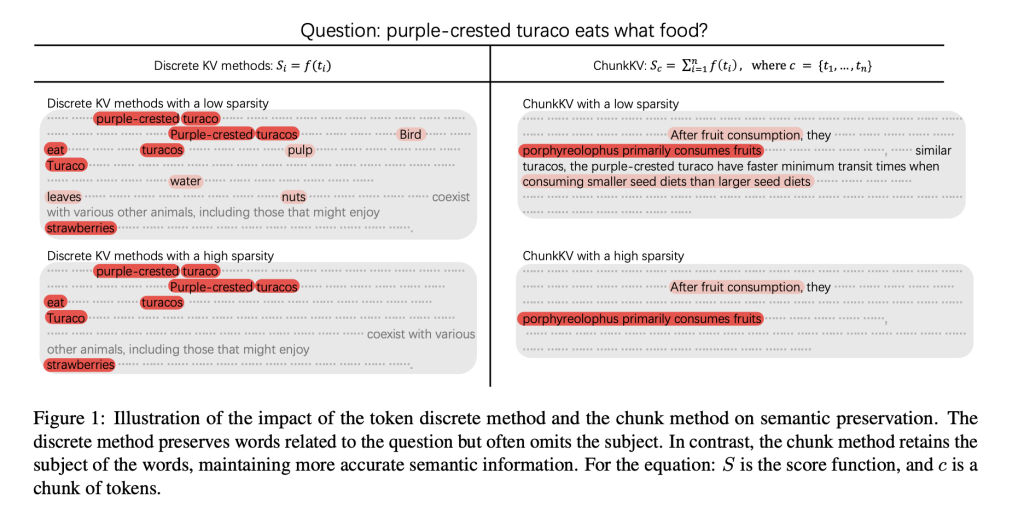

Efficient long-context inference with LLMs requires managing substantial GPU memory due to the high storage demands of key-value (KV) caching. Traditional KV cache compression techniques reduce memory usage by selectively pruning less significant tokens, often based on attention scores. However, existing methods assess token importance independently, overlooking the crucial dependencies among tokens for preserving semantic coherence. For example, a model may retain key subject-related words while discarding contextually significant terms, leading to information loss. This limitation highlights the need for a more structured approach to KV cache compression that considers token relationships and semantic integrity.

Recent research has explored dynamic KV cache compression strategies to optimize memory usage without compromising performance. Methods like H2O and SnapKV employ attention-based evaluation to selectively retain critical tokens while chunking approaches organize text into semantically meaningful segments. Chunking has been widely used in NLP for pre-training and retrieval-based tasks, ensuring contextual consistency. Additionally, layer-wise techniques such as LISA and DoLa enhance model efficiency by leveraging structural insights from different transformer layers. While these advancements improve memory efficiency, incorporating token dependency awareness in KV cache compression can further enhance long-context retention and inference quality in LLMs.

Researchers from Hong Kong University introduced ChunkKV, a KV cache compression method that groups tokens into meaningful chunks rather than evaluating them individually. This approach preserves essential semantic information while reducing memory overhead. Additionally, layer-wise index reuse further optimizes computational efficiency. Evaluated on benchmarks like LongBench, Needle-In-A-Haystack, GSM8K, and JailbreakV, ChunkKV demonstrated superior performance, improving accuracy by up to 10% under aggressive compression. Compared to existing methods, ChunkKV effectively retains contextual meaning and enhances efficiency, establishing it as a robust solution for long-context inference in large language models.

With the increasing context length of LLMs, KV cache compression is crucial for efficient inference, as it consumes substantial GPU memory. ChunkKV is an approach that retains semantically rich token chunks, reducing memory usage while preserving critical information. It segments tokens into meaningful groups and selects the most informative chunks using attention scores. A layer-wise index reuse method optimizes efficiency by sharing compressed indices across layers. Experimental results show that ChunkKV significantly improves index similarity across layers compared to previous methods like SnapKV. This structured KV retention aligns with in-context learning principles, maintaining semantic coherence while optimizing memory usage.

The study evaluates ChunkKV’s effectiveness in KV cache compression across two benchmarks: In-Context Learning (ICL) and Long-Context tasks. For ICL, the study tests GSM8K, Many-Shot GSM8K, and JailbreakV using models like LLaMA-3.1-8B-Instruct and DeepSeek-R1-Distill-Llama-8B. ChunkKV consistently outperforms other methods in maintaining accuracy across various compression ratios. For Long-Context, the study assesses LongBench and Needle-In-A-Haystack (NIAH), showing ChunkKV’s superior performance preserving crucial information. Additionally, index reuse experiments demonstrate improved efficiency, reducing latency and increasing throughput on an A40 GPU. Overall, results confirm ChunkKV’s capability to optimize KV cache compression while maintaining model effectiveness across different contexts and architectures.

In conclusion, the study examines the impact of chunk size on ChunkKV’s performance, maintaining the same experimental settings as LongBench. Results indicate minimal performance variation across chunk sizes, with 10–20 yielding the best outcomes. Extensive evaluations across LongBench and NIAH confirm that a chunk size of 10 optimally balances semantic preservation and compression efficiency. ChunkKV effectively reduces KV cache memory usage while retaining crucial information. Additionally, the layer-wise index reuse technique enhances computational efficiency, reducing latency by 20.7% and improving throughput by 26.5%. These findings establish ChunkKV as an efficient KV cache compression method for deploying LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post ChunkKV: Optimizing KV Cache Compression for Efficient Long-Context Inference in LLMs appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)