Despite recent advancements, generative video models still struggle to represent motion realistically. Many existing models focus primarily on pixel-level reconstruction, often leading to inconsistencies in motion coherence. These shortcomings manifest as unrealistic physics, missing frames, or distortions in complex motion sequences. For example, models may struggle with depicting rotational movements or dynamic actions like gymnastics and object interactions. Addressing these issues is essential for improving the realism of AI-generated videos, particularly as their applications expand into creative and professional domains.

Meta AI presents VideoJAM, a framework designed to introduce a stronger motion representation in video generation models. By encouraging a joint appearance-motion representation, VideoJAM improves the consistency of generated motion. Unlike conventional approaches that treat motion as a secondary consideration, VideoJAM integrates it directly into both the training and inference processes. This framework can be incorporated into existing models with minimal modifications, offering an efficient way to enhance motion quality without altering training data.

Technical Approach and Benefits

VideoJAM consists of two primary components:

- Training Phase: An input video (x1) and its corresponding motion representation (d1) are both subjected to noise and embedded into a single joint latent representation using a linear layer (Win+). A diffusion model then processes this representation, and two linear projection layers predict both appearance and motion components from it (Wout+). This structured approach helps balance appearance fidelity with motion coherence, mitigating the common trade-off found in previous models.

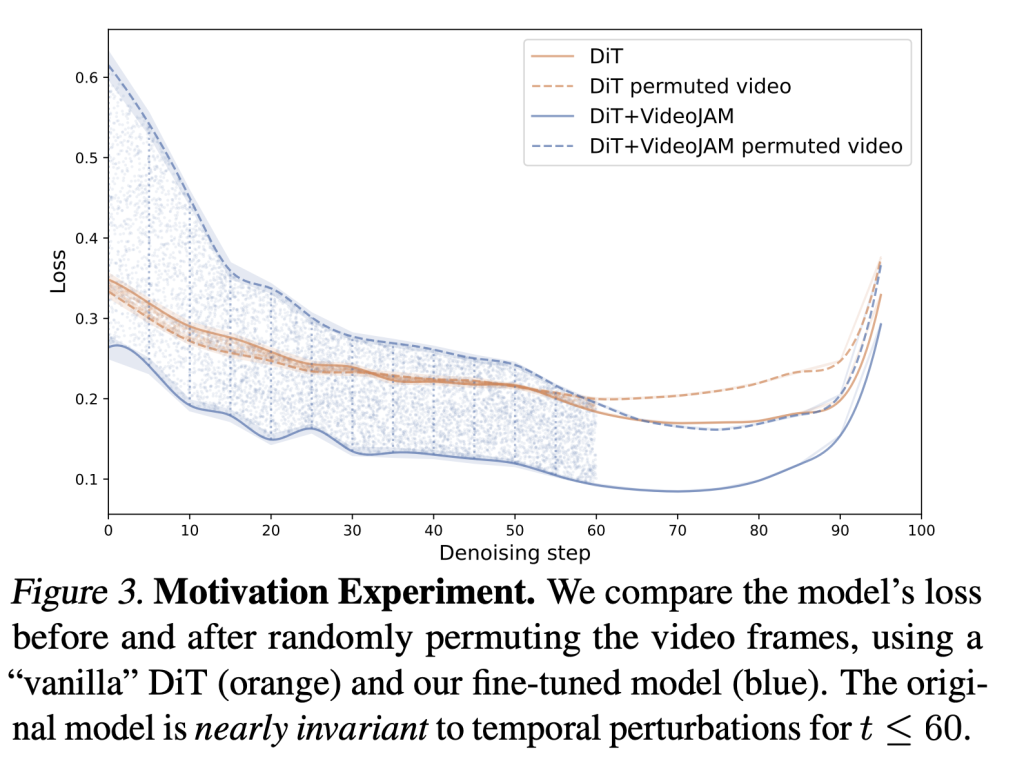

- Inference Phase (Inner-Guidance Mechanism): During inference, VideoJAM introduces Inner-Guidance, where the model utilizes its own evolving motion predictions to guide video generation. Unlike conventional techniques that rely on fixed external signals, Inner-Guidance allows the model to adjust its motion representation dynamically, leading to smoother and more natural transitions between frames.

Insights

Evaluations of VideoJAM indicate notable improvements in motion coherence across different types of videos. Key findings include:

- Enhanced Motion Representation: Compared to established models like Sora and Kling, VideoJAM reduces artifacts such as frame distortions and unnatural object deformations.

- Improved Motion Fidelity: VideoJAM consistently achieves higher motion coherence scores in both automated assessments and human evaluations.

- Versatility Across Models: The framework integrates effectively with various pre-trained video models, demonstrating its adaptability without requiring extensive retraining.

- Efficient Implementation: VideoJAM enhances video quality using only two additional linear layers, making it a lightweight and practical solution.

Conclusion

VideoJAM provides a structured approach to improving motion coherence in AI-generated videos by integrating motion as a key component rather than an afterthought. By leveraging a joint appearance-motion representation and Inner-Guidance mechanism, the framework enables models to generate videos with greater temporal consistency and realism. With minimal architectural modifications required, VideoJAM offers a practical means to refine motion quality in generative video models, making them more reliable for a range of applications.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Meta AI Introduces VideoJAM: A Novel AI Framework that Enhances Motion Coherence in AI-Generated Videos appeared first on MarkTechPost.

Source: Read MoreÂ