Despite progress in AI-driven human animation, existing models often face limitations in motion realism, adaptability, and scalability. Many models struggle to generate fluid body movements and rely on filtered training datasets, restricting their ability to handle varied scenarios. Facial animation has seen improvements, but full-body animations remain challenging due to inconsistencies in gesture accuracy and pose alignment. Additionally, many frameworks are constrained by specific aspect ratios and body proportions, limiting their applicability across different media formats. Addressing these challenges requires a more flexible and scalable approach to motion learning.

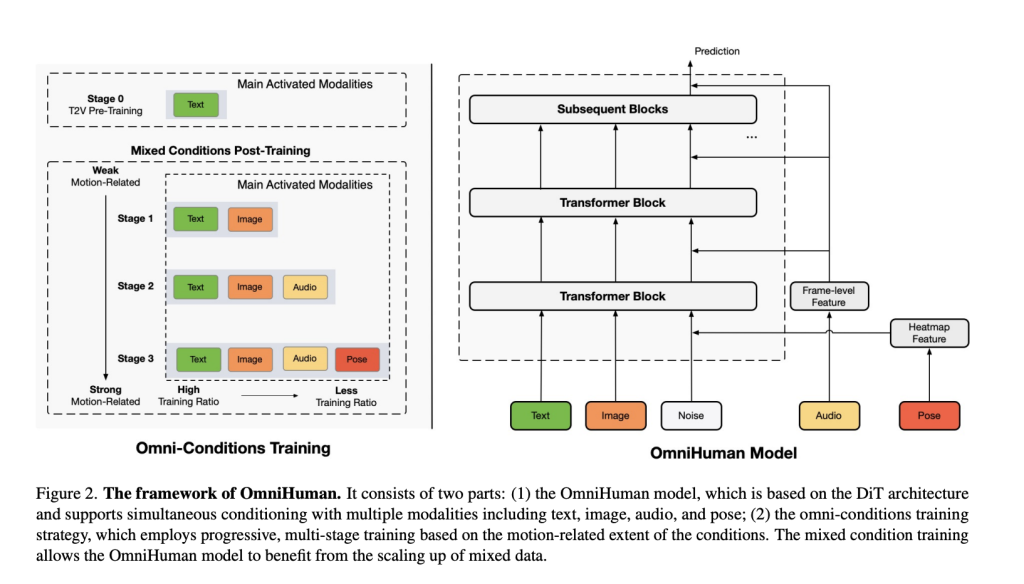

ByteDance has introduced OmniHuman-1, a Diffusion Transformer-based AI model capable of generating realistic human videos from a single image and motion signals, including audio, video, or a combination of both. Unlike previous methods that focus on portrait or static body animations, OmniHuman-1 incorporates omni-conditions training, enabling it to scale motion data effectively and improve gesture realism, body movement, and human-object interactions.

OmniHuman-1 supports multiple forms of motion input:

- Audio-driven animation, generating synchronized lip movements and gestures from speech input.

- Video-driven animation, replicating motion from a reference video.

- Multimodal fusion, combining both audio and video signals for precise control over different body parts.

Its ability to handle various aspect ratios and body proportions makes it a versatile tool for applications requiring human animation, setting it apart from prior models.

Technical Foundations and Advantages

OmniHuman-1 employs a Diffusion Transformer (DiT) architecture, integrating multiple motion-related conditions to enhance video generation. Key innovations include:

- Multimodal Motion Conditioning: Incorporating text, audio, and pose conditions during training, allowing it to generalize across different animation styles and input types.

- Scalable Training Strategy: Unlike traditional methods that discard significant data due to strict filtering, OmniHuman-1 optimizes the use of both strong and weak motion conditions, achieving high-quality animation from minimal input.

- Omni-Conditions Training: The training strategy follows two principles:

- Stronger conditioned tasks (e.g., pose-driven animation) leverage weaker conditioned data (e.g., text, audio-driven motion) to improve data diversity.

- Training ratios are adjusted to ensure weaker conditions receive higher emphasis, balancing generalization across modalities.

- Realistic Motion Generation: OmniHuman-1 excels at co-speech gestures, natural head movements, and detailed hand interactions, making it particularly effective for virtual avatars, AI-driven character animation, and digital storytelling.

- Versatile Style Adaptation: The model is not confined to photorealistic outputs; it supports cartoon, stylized, and anthropomorphic character animations, broadening its creative applications.

Performance and Benchmarking

OmniHuman-1 has been evaluated against leading animation models, including Loopy, CyberHost, and DiffTED, demonstrating superior performance in multiple metrics:

- Lip-sync accuracy (higher is better):

- OmniHuman-1: 5.255

- Loopy: 4.814

- CyberHost: 6.627

- Fréchet Video Distance (FVD) (lower is better):

- OmniHuman-1: 15.906

- Loopy: 16.134

- DiffTED: 58.871

- Gesture expressiveness (HKV metric):

- OmniHuman-1: 47.561

- CyberHost: 24.733

- DiffGest: 23.409

- Hand keypoint confidence (HKC) (higher is better):

- OmniHuman-1: 0.898

- CyberHost: 0.884

- DiffTED: 0.769

Ablation studies further confirm the importance of balancing pose, reference image, and audio conditions in training to achieve natural and expressive motion generation. The model’s ability to generalize across different body proportions and aspect ratios gives it a distinct advantage over existing approaches.

Conclusion

OmniHuman-1 represents a significant step forward in AI-driven human animation. By integrating omni-conditions training and leveraging a DiT-based architecture, ByteDance has developed a model that effectively bridges the gap between static image input and dynamic, lifelike video generation. Its capacity to animate human figures from a single image using audio, video, or both makes it a valuable tool for virtual influencers, digital avatars, game development, and AI-assisted filmmaking.

As AI-generated human videos become more sophisticated, OmniHuman-1 highlights a shift toward more flexible, scalable, and adaptable animation models. By addressing long-standing challenges in motion realism and training scalability, it lays the groundwork for further advancements in generative AI for human animation.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post ByteDance Proposes OmniHuman-1: An End-to-End Multimodality Framework Generating Human Videos based on a Single Human Image and Motion Signals appeared first on MarkTechPost.

Source: Read MoreÂ