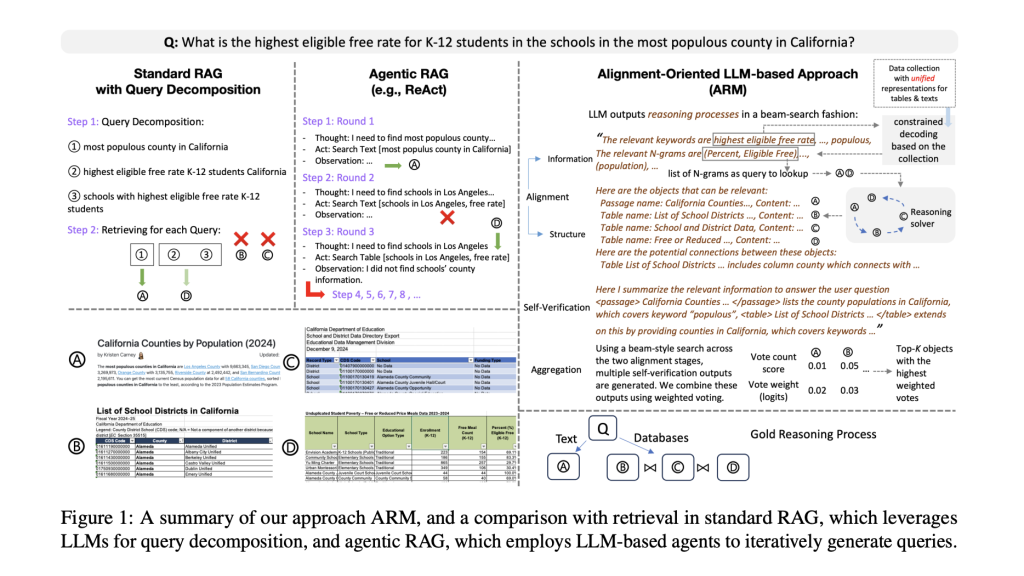

Answering open-domain questions in real-world scenarios is challenging, as relevant information is often scattered across diverse sources, including text, databases, and images. While LLMs can break down complex queries into simpler steps to improve retrieval, they usually fail to account for how data is structured, leading to suboptimal results. Agentic RAG introduces iterative retrieval, refining searches based on prior results. However, this approach is inefficient, as queries are guided by past retrievals rather than data organization. Additionally, it lacks joint optimization, making it prone to reasoning derailment, where errors in early steps cascade into incorrect decisions, increasing computational costs.

Researchers from MIT, AWS AI, and the University of Pennsylvania introduced ARM, an LLM-based retrieval method designed to enhance complex question answering by aligning queries with the structure of available data. Unlike conventional approaches, ARM explores relationships between data objects rather than relying solely on semantic matching, enabling a retrieve-all-at-once solution. Evaluated on Bird and OTT-QA datasets, ARM outperformed standard RAG and agentic RAG, achieving up to 5.2 and 15.9 points higher execution accuracy on Bird and up to 5.5 and 19.3 points higher F1 scores on OTT-QA. ARM improves retrieval efficiency through structured reasoning and alignment verification.

The alignment-driven LLM retrieval framework integrates retrieval and reasoning within a unified decoding process, optimizing it through beam search. Unlike conventional methods that treat retrieval and reasoning as separate steps, the LLM can dynamically retrieve relevant data objects while incorporating structured data, a reasoning solver, and self-verification. Since LLMs lack direct access to structured data, we frame retrieval as a generative task, where the model formulates reasoning to identify essential data objects. This process involves iterative decoding with three key components: information alignment, structure alignment, and self-verification, ensuring logical consistency and accurate retrieval.

Textual data is indexed as N-grams and embeddings to enhance retrieval accuracy, enabling constrained beam decoding for precise alignment. Information alignment extracts key terms and retrieves relevant objects using BM25 scoring and embedding-based similarity. Structure alignment refines these objects through an optimization model, ensuring logical coherence. Finally, self-verification allows the LLM to validate and integrate selected objects within a structured reasoning framework. Multiple drafts are generated through controlled object expansion, and beam search aggregation prioritizes the most confident selections, ensuring high-quality, contextually relevant responses from diverse data sources.

The study assesses the method on open-domain question-answering tasks using OTT-QA and Bird datasets. OTT-QA involves short-text answers from passages and tables, while Bird requires SQL queries from multiple tables. We compare our approach with standard and agentic RAG baselines, incorporating query decomposition and reranking. ARM, using Llama-3.1-8B-Instruct, retrieves relevant objects efficiently, outperforming baselines in recall and end-to-end accuracy while reducing LLM calls. ReAct struggles with iterative reasoning errors, often repeating searches. ARM’s structured retrieval process improves precision and efficiency. The results highlight ARM’s superiority in retrieving essential information while maintaining computational efficiency across both datasets.

In conclusion, Effective open-domain question answering requires understanding the available data objects and their organization. Query decomposition with an off-the-shelf LLM often leads to suboptimal retrieval due to a lack of awareness about the data structure. While agentic RAG can interact with the data, it relies on previous retrieval results, making it inefficient and increasing LLM calls. The proposed ARM retrieval method identifies and navigates relevant data objects, even those not directly mentioned in the question. Experimental results show that ARM outperforms baselines in retrieval accuracy and efficiency, requiring fewer LLM calls for improved performance in downstream tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post ARM: Enhancing Open-Domain Question Answering with Structured Retrieval and Efficient Data Alignment appeared first on MarkTechPost.

Source: Read MoreÂ