Developing AI agents capable of independent decision-making, especially for multi-step tasks, is a significant challenge. DeepSeekAI, a leader in advancing large language models and reinforcement learning, focuses on enabling AI to process information, predict outcomes, and adjust actions as situations evolve. It underlines the importance of proper reasoning in dynamic settings. The new development from DeepSeekAI captures state-of-the-art methods in reinforcement learning, large language models, and agent-based decision-making to ensure that it stays on top of the current AI research and applications. It deals with many common problems, such as decision-making inconsistencies, long-term planning issues, and the inability to adapt to changing conditions. However, AI can take suboptimal actions or even commit errors without a proper reasoning mechanism.

Many AI training methodologies suffer from problems of inconsistent processing, which, in turn, leads to errors on tasks that necessitate multiple decision-making rounds. These approaches do not describe an environment that, through the action of AI, provides a complete understanding of the consequences, due to which results are unanalyzed and obscure. Also, training is implemented in a step-by-step procedure by which there are breaks in learning sequences, and reward functions become unstable, resulting in the lack of suitable long-term policy development. Therefore, decision and problem-solving systems become inefficient and ineffective. The DeepSeekAI solves this dilemma by providing more integrated and well-streamlined training, helping AI make good, consistent, dependable decisions while quickly adapting to new environments.

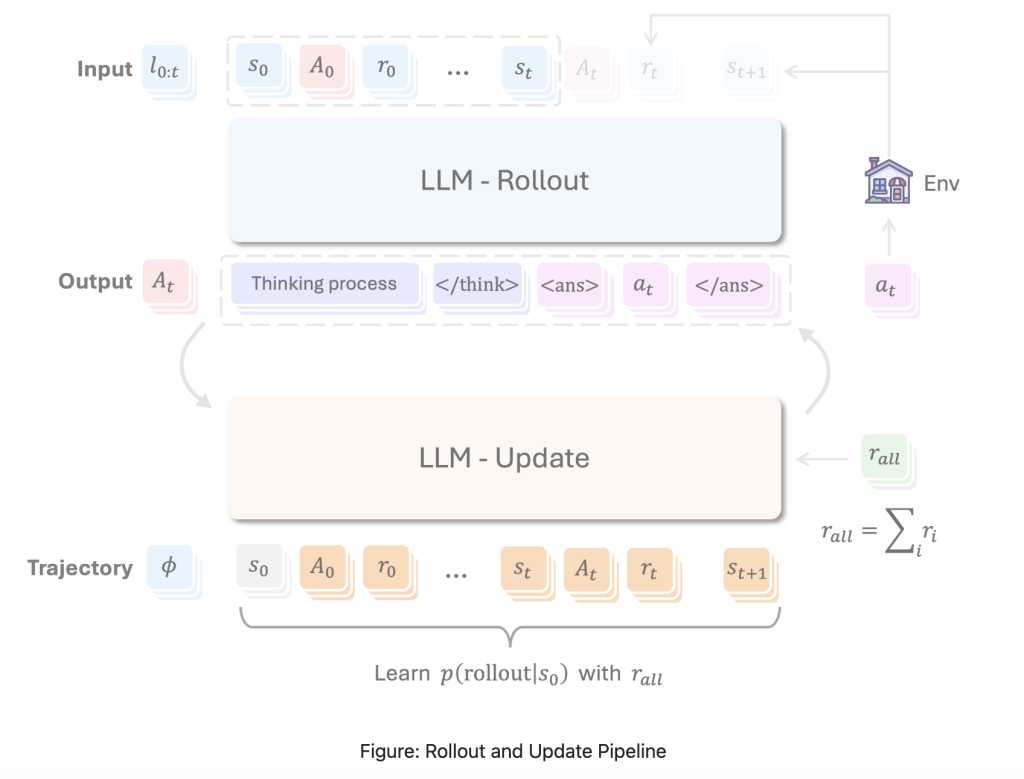

Meet RAGEN, the first reproduction of DeepSeek-R1(-Zero) methods for training agentic models, to address challenges in training AI agents for multi-step reasoning and real-world tasks. DeepSeekAI, known for its advancements in large language models and reinforcement learning, developed DeepSeek-R1 to enhance agentic reasoning through structured training. Unlike other methods that struggle with inconsistent batch processing, limited planning, and unstable rewards, RAGEN streamlines training using a two-phase approach: a rollout phase where environment states and model-generated reasoning tokens are processed together and an update phase where only critical tokens (actions and rewards) contribute to learning, ensuring stable batch rollouts and improving decision-making. The framework efficiently prevents instability from variable sequence lengths by generating reasoning and action tokens during rollout, executing only actions in the environment, and reinforcing strategic planning through reward aggregation in the update phase. Tested on the Sokoban puzzle environment, RAGEN showed that smaller models perform comparably to larger ones and that models without explicit instructions adapt well. RAGEN enhances sequential decision-making by reproducing DeepSeek-R1’s training methodology, making it valuable for applications like logistics automation and AI assistants.

Ultimately, RAGEN enhances the training of AI agents by eliminating inconsistent decision-making, unstable rewards, and planning limitations. By mimicking DeepSeek-R1’s approach, it guarantees stable learning and better adaptability. Tested on the Sokoban puzzle, it showed that smaller models perform well as an efficiency indicator. As a baseline for future research, RAGEN can help refine AI training methods, improve reinforcement learning, and support advancements in general-purpose AI systems.

Check out the GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 70k+ ML SubReddit.

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

The post Meet RAGEN Framework: The First Open-Source Reproduction of DeepSeek-R1 for Training Agentic Models via Reinforcement Learning appeared first on MarkTechPost.

Source: Read MoreÂ