It can significantly enhance LLMs’ problem-solving capabilities by guiding them to think more deeply about complex problems and effectively utilize inference-time computation. Prior research has explored various strategies, including chain-of-thought reasoning, self-consistency, sequential revision with feedback, and search mechanisms guided by auxiliary verifiers or evaluators. Search-based methods, particularly when paired with solution evaluators, leverage additional computational resources to explore a broader set of solution candidates. Techniques like best-of-N and tree search harness this capability to increase the likelihood of identifying successful solutions by examining a more extensive solution space.

Recent efforts have combined LLMs with evolutionary search for optimization tasks, such as numerical and combinatorial problems and natural language planning. Unlike earlier studies that required task formalization in structured spaces, these approaches evolve solutions directly in natural language, bypassing the need for expert knowledge in formalizing tasks. Evolutionary search has also been applied to prompt optimization and multi-agent system design, such as EvoAgent, which evolved agents for problem-solving. However, these approaches often achieved limited success compared to methods like Gemini 1.5 Flash, demonstrating significant improvements in tasks like the TravelPlanner benchmark. Additionally, program-based evaluators integrated during evolutionary search provide reliable feedback to refine solutions, a technique widely adopted in code generation and response refinement across various domains. While learned feedback models or self-evaluators have been explored, they often suffer from noise and unreliability, presenting opportunities for future advancements.

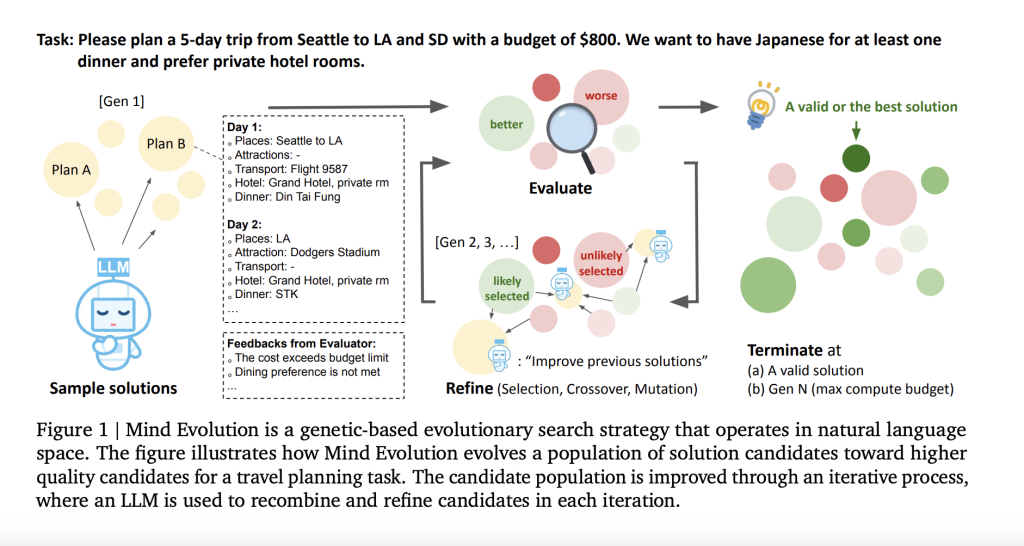

Researchers from Google DeepMind, UC San Diego, and the University of Alberta introduced Mind Evolution, an evolutionary search strategy designed to enhance inference-time computation for LLMs. Unlike previous methods like Best-of-N or sequential refinement, Mind Evolution uses a genetic approach to iteratively generate, refine, and recombine candidate solutions in natural language. It avoids formalizing tasks by relying on a solution evaluator, enabling higher success rates in natural language planning tasks like TravelPlanner and Natural Plan. Mind Evolution achieved 95.6% success on TravelPlanner and introduced new benchmarks like StegPoet, showcasing its versatility across challenging, non-formalized domains.

Mind Evolution integrates a genetic search approach with an LLM and customized prompts to efficiently address natural language planning tasks. It employs language-based genetic algorithms, where solutions are represented in natural language, enabling LLMs to facilitate key operations like crossover, mutation, and island reset. The process begins by generating initial solutions through LLM-driven prompts. Solutions are iteratively refined using a “Refinement through Critical Conversation” (RCC) process involving critic and author roles for evaluation and improvement. The framework incorporates Boltzmann tournament selection, cyclic migration between islands, and periodic island resets to sustain diversity and optimize solutions effectively.

The experiments evaluate Mind Evolution on three natural language planning benchmarks: TravelPlanner, Trip Planning, and Meeting Planning, excluding Calendar Scheduling due to its simplicity. The primary model, Gemini 1.5 Flash, is used with specified hyperparameters, while a two-stage approach incorporates Gemini 1.5 Pro for unsolved cases, improving cost efficiency. Mind Evolution outperforms baselines, achieving over 95% success in TravelPlanner and Trip Planning and 85% in Meeting Planning, with near-perfect results using the two-stage approach. Metrics such as success rate, LLM calls, token usage, and API costs highlight the efficiency of Mind Evolution’s evolutionary search strategy compared to baselines.

In conclusion, Mind Evolution introduces an evolutionary search strategy to enhance inference-time computation for complex natural language planning tasks, focusing on stochastic exploration and iterative refinement. Unlike methods relying on formal solvers, Mind Evolution leverages language models to generate, recombine, and refine candidate solutions, requiring only a solution evaluator. It outperforms strategies like Best-of-N and Sequential Revision in benchmarks such as TravelPlanner, Natural Plan, and the newly introduced StegPoet. Controlling for inference costs, it achieves remarkable success, solving over 98% of problem instances in TravelPlanner and Natural Plan benchmarks using Gemini 1.5 Pro, demonstrating its effectiveness without formal solver dependency.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

The post Google DeepMind Introduces Mind Evolution: Enhancing Natural Language Planning with Evolutionary Search in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ