Artificial Intelligence has made significant strides, yet some challenges persist in advancing multimodal reasoning and planning capabilities. Tasks that demand abstract reasoning, scientific understanding, and precise mathematical computations often expose the limitations of current systems. Even leading AI models face difficulties integrating diverse types of data effectively and maintaining logical coherence in their responses. Moreover, as the use of AI expands, there is increasing demand for systems capable of processing extensive contexts, such as analyzing documents with millions of tokens. Tackling these challenges is vital to unlocking AI’s full potential across education, research, and industry.

To address these issues, Google has introduced the Gemini 2.0 Flash Thinking model, an enhanced version of its Gemini AI series with advanced reasoning abilities. This latest release builds on Google’s expertise in AI research and incorporates lessons from earlier innovations, such as AlphaGo, into modern large language models. Available through the Gemini API, Gemini 2.0 introduces features like code execution, a 1-million-token content window, and better alignment between its reasoning and outputs.

Technical Details and Benefits

At the core of Gemini 2.0 Flash Thinking mode is its improved Flash Thinking capability, which allows the model to reason across multiple modalities such as text, images, and code. This ability to maintain coherence and precision while integrating diverse data sources marks a significant step forward. The 1-million-token content window enables the model to process and analyze large datasets simultaneously, making it particularly useful for tasks like legal analysis, scientific research, and content creation.

Another key feature is the model’s ability to execute code directly. This functionality bridges the gap between abstract reasoning and practical application, allowing users to perform computations within the model’s framework. Additionally, the architecture addresses a common issue in earlier models by reducing contradictions between the model’s reasoning and responses. These improvements result in more reliable performance and greater adaptability across a variety of use cases.

For users, these enhancements translate into faster, more accurate outputs for complex queries. Gemini 2.0’s ability to integrate multimodal data and manage extensive content makes it an invaluable tool in fields ranging from advanced mathematics to long-form content generation.

Performance Insights and Benchmark Achievements

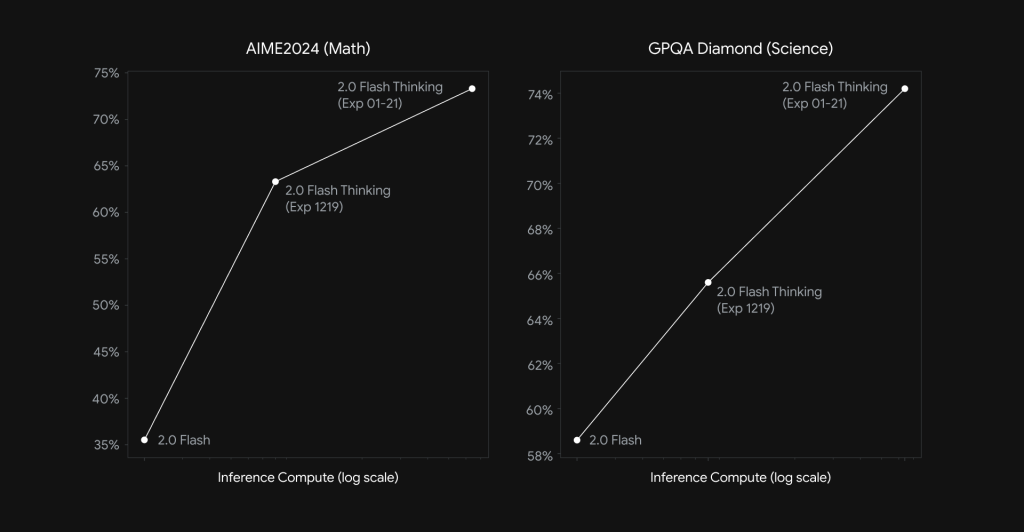

Gemini 2.0 Flash Thinking model’s advancements are evident in its benchmark performance. The model scored 73.3% on AIME (math), 74.2% on GPQA Diamond (science), and 75.4% on the Multimodal Model Understanding (MMMU) test. These results showcase its capabilities in reasoning and planning, particularly in tasks requiring precision and complexity.

Feedback from early users has been encouraging, highlighting the model’s speed and reliability compared to its predecessor. Its ability to handle extensive datasets while maintaining logical consistency makes it a valuable asset in industries like education, research, and enterprise analytics. The rapid progress seen in this release—achieved just a month after the previous version—reflects Google’s commitment to continuous improvement and user-focused innovation.

Conclusion

The Gemini 2.0 Flash Thinking model represents a measured and meaningful advancement in artificial intelligence. By addressing longstanding challenges in multimodal reasoning and planning, it provides practical solutions for a wide range of applications. Features like the 1-million-token content window and integrated code execution enhance its problem-solving capabilities, making it a versatile tool for various domains.

With strong benchmark results and improvements in reliability and adaptability, Gemini 2.0 Flash Thinking model underscores Google’s leadership in AI development. As the model evolves further, its impact on industries and research is likely to grow, paving the way for new possibilities in AI-driven innovation.

Check out the Details and Try the latest Flash Thinking model in Google AI Studio. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

The post Google AI Releases Gemini 2.0 Flash Thinking model (gemini-2.0-flash-thinking-exp-01-21): Scoring 73.3% on AIME (Math) and 74.2% on GPQA Diamond (Science) Benchmarks appeared first on MarkTechPost.

Source: Read MoreÂ

:

: