Mathematical reasoning has long been a significant challenge for Large Language Models (LLMs). Errors in intermediate reasoning steps can undermine both the accuracy and reliability of final outputs, which is particularly problematic for applications requiring precision, such as education and scientific computation. Traditional evaluation methods, like the Best-of-N (BoN) strategy, often fail to capture the intricacies of reasoning processes. This has led to the development of Process Reward Models (PRMs), which aim to provide detailed supervision by evaluating the correctness of intermediate steps. However, building effective PRMs remains a difficult task, primarily due to challenges in data annotation and evaluation methodologies. These obstacles highlight the need for models that better align with robust, process-driven reasoning.

The Alibaba Qwen Team recently published a paper titled ‘Lessons of Developing Process Reward Models in Mathematical Reasoning.’ Alongside this research, they introduced two PRMs with 7B and 72B parameters, part of their Qwen2.5-Math-PRM series. These models address significant limitations in existing PRM frameworks, employing innovative techniques to improve the accuracy and generalization of reasoning models.

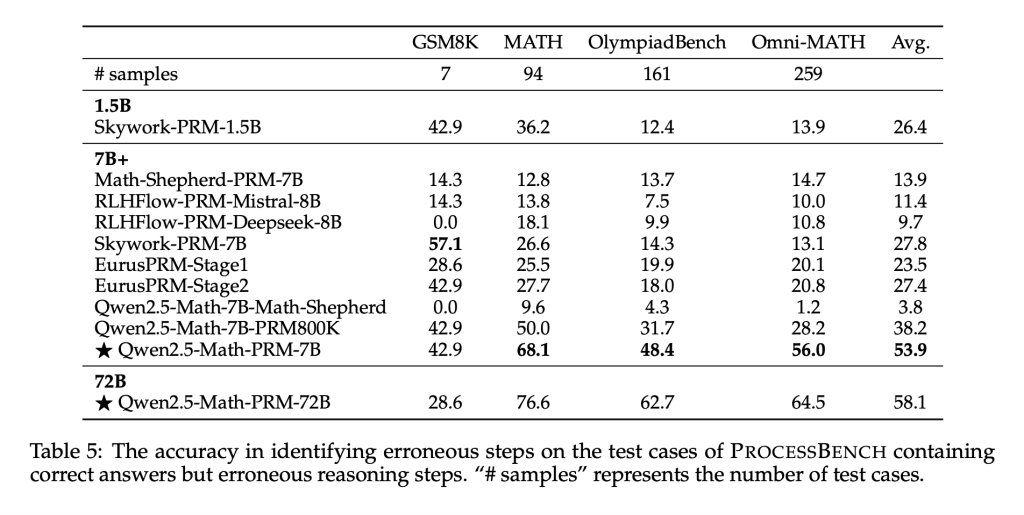

Central to their approach is a hybrid methodology that combines Monte Carlo (MC) estimation with a novel “LLM-as-a-judge” mechanism. This integration enhances the quality of step-wise annotations, making the resulting PRMs more effective in identifying and mitigating errors in mathematical reasoning. The models have demonstrated strong performance on benchmarks like PROCESSBENCH, which tests a model’s ability to pinpoint intermediate reasoning errors.

Technical Innovations and Benefits

The Qwen team’s methodology involves generating multiple solutions for mathematical problems using fine-tuned LLMs and evaluating the correctness of each step through a dual approach. This method addresses the limitations of traditional MC estimation, which often produces inaccurate labels due to its reliance on future outcomes.

Key innovations include:

- Consensus Filtering: This mechanism retains data only when both MC estimation and LLM-as-a-judge agree on step correctness, significantly reducing noise in the training process.

- Hard Labeling: Deterministic labels, verified by both mechanisms, enhance the model’s ability to distinguish valid from invalid reasoning steps.

- Efficient Data Utilization: By combining MC estimation with LLM-as-a-judge, the consensus filtering strategy ensures high-quality data while maintaining scalability. This approach enables the development of PRMs that perform well even with smaller datasets.

These innovations facilitate the creation of PRMs that are not only accurate but also robust, making them suitable for applications such as automated tutoring and complex problem-solving.

Results and Insights

The Qwen2.5-Math-PRM models demonstrated strong results on PROCESSBENCH and other evaluation metrics. For example, the Qwen2.5-Math-PRM-72B model achieved an F1 score of 78.3%, surpassing many open-source alternatives. In tasks requiring step-wise error identification, it outperformed proprietary models like GPT-4-0806.

The consensus filtering approach played a crucial role in improving training quality, reducing data noise by approximately 60%. While MC estimation alone can be helpful, it is insufficient for accurately labeling reasoning steps. Combining MC estimation with LLM-as-a-judge significantly enhanced the model’s ability to detect errors, as reflected in improved PROCESSBENCH scores.

The Qwen2.5-Math-PRM series also emphasized step-level evaluation over outcome-based BoN strategies. This shift addressed the shortcomings of earlier models, which often prioritized final answers at the expense of reasoning accuracy.

Conclusion

The introduction of the Qwen2.5-Math-PRM models represents meaningful progress in mathematical reasoning for LLMs. By addressing challenges in PRM development, such as noisy data annotation and process-to-outcome biases, the Alibaba Qwen Team has provided a practical framework for improving reasoning accuracy and reliability. These models not only outperform existing alternatives but also offer valuable methodologies for future research. As PRMs continue to advance, their application in broader AI contexts promises to enhance the reliability and effectiveness of machine reasoning systems.

Check out the Paper and Models on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

The post Alibaba Qwen Team just Released ‘Lessons of Developing Process Reward Models in Mathematical Reasoning’ along with a State-of-the-Art 7B and 72B PRMs appeared first on MarkTechPost.

Source: Read MoreÂ