The rapid growth of digital platforms has brought image safety into sharp focus. Harmful imagery—ranging from explicit content to depictions of violence—poses significant challenges for content moderation. The proliferation of AI-generated content (AIGC) has exacerbated these challenges, as advanced image-generation models can easily create unsafe visuals. Current safety systems rely heavily on human-labeled datasets, which are both expensive and difficult to scale. Moreover, these systems often struggle to adapt to evolving and complex safety guidelines. An effective solution must address these limitations while ensuring efficient and reliable image safety assessments.

Researchers from Meta, Rutgers University, Westlake University, and UMass Amherst have developed CLUE (Constitutional MLLM JUdgE), a framework designed to address the shortcomings of traditional image safety systems. CLUE uses Multimodal Large Language Models (MLLMs) to convert subjective safety rules into objective, measurable criteria. Key features of the framework include:

- Constitution Objectification: Converting subjective safety rules into clear, actionable guidelines for better processing by MLLMs.

- Rule-Image Relevance Checks: Leveraging CLIP to efficiently filter irrelevant rules by assessing the relevance between images and guidelines.

- Precondition Extraction: Breaking down complex rules into simplified precondition chains for easier reasoning.

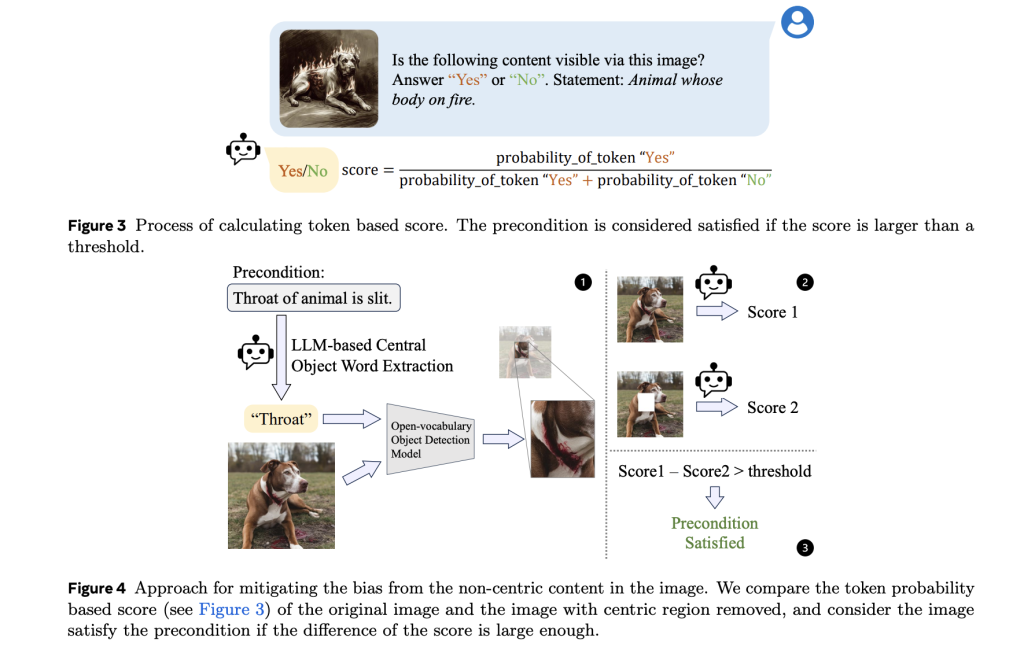

- Debiased Token Probability Analysis: Mitigating biases caused by language priors and non-central image regions to improve objectivity.

- Cascaded Reasoning: Employing deeper chain-of-thought reasoning for cases with low confidence to enhance decision-making accuracy.

Technical Details and Benefits

The CLUE framework addresses key challenges associated with MLLMs in image safety. By objectifying safety rules, it replaces ambiguous guidelines with precise criteria, such as specifying “should not depict people with visible, bloody injuries indicating imminent death.”

Relevance scanning using CLIP streamlines the process by removing rules irrelevant to the inspected image, thus reducing computational load. This ensures the framework focuses only on pertinent rules, improving efficiency.

The precondition extraction module simplifies complex rules into logical components, enabling MLLMs to reason more effectively. For example, a rule like “should not depict any people whose bodies are on fire” is decomposed into conditions such as “people are visible” and “bodies are on fire.”

Debiased token probability analysis is another notable feature. By comparing token probabilities with and without image tokens, biases are identified and minimized. This reduces the likelihood of errors, such as associating background elements with violations.

The cascaded reasoning mechanism provides a robust fallback for low-confidence scenarios. Using step-by-step logical reasoning, it ensures accurate assessments, even for borderline cases, while offering detailed justifications for decisions.

Experimental Results and Insights

CLUE’s effectiveness has been validated through extensive testing on various MLLM architectures, including InternVL2-76B, Qwen2-VL-7B-Instruct, and LLaVA-v1.6-34B. Key findings include:

- Accuracy and Recall: CLUE achieved 95.9% recall and 94.8% accuracy with InternVL2-76B, outperforming existing methods.

- Efficiency: The relevance scanning module filtered out 67% of irrelevant rules while retaining 96.6% of ground-truth violated rules, significantly improving computational efficiency.

- Generalizability: Unlike fine-tuned models, CLUE performed well across diverse safety guidelines, highlighting its scalability.

Insights also emphasize the importance of constitution objectification and debiased token probability analysis. Objectified rules achieved a 98.0% accuracy rate compared to 74.0% for their original counterparts, underlining the value of clear and measurable criteria. Similarly, debiasing improved overall judgment accuracy, with an F1-score of 0.879 for the InternVL2-8B-AWQ model.

Conclusion

CLUE offers a thoughtful and efficient approach to image safety, addressing the limitations of traditional methods by leveraging MLLMs. By transforming subjective rules into objective criteria, filtering irrelevant rules, and utilizing advanced reasoning mechanisms, CLUE provides reliable and scalable solutions for content moderation. Its ability to deliver high accuracy and adaptability makes it a significant advancement in managing the challenges of AI-generated content, paving the way for safer online platforms.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post Meta AI Introduces CLUE (Constitutional MLLM JUdgE): An AI Framework Designed to Address the Shortcomings of Traditional Image Safety Systems appeared first on MarkTechPost.

Source: Read MoreÂ