Large language models (LLMs) like GPT-4, PaLM, Bard, and Copilot have made a huge impact in natural language processing (NLP). They can generate text, solve problems, and carry out conversations with remarkable accuracy. However, they also come with significant challenges. These models require vast computational resources, making them expensive to train and deploy. This excludes smaller businesses and individual developers from fully benefiting. Additionally, their energy consumption raises environmental concerns. The dependency on advanced infrastructure further limits their accessibility, creating a gap between well-funded organizations and others trying to innovate.

What are Small Language Models (SLMs)?

Small Language Models (SLMs) are a more practical and efficient alternative to LLMs. These models are smaller in size, with millions to a few billion parameters, compared to the hundreds of billions found in larger models. SLMs focus on specific tasks, providing a balance between performance and resource consumption. Their design makes them accessible and cost-effective, offering organizations an opportunity to harness NLP without the heavy demands of LLMs. You can explore more details in IBM’s analysis.

Technical Details and Benefits

SLMs use techniques like model compression, knowledge distillation, and transfer learning to achieve their efficiency. Model compression involves reducing the size of a model by removing less critical components, while knowledge distillation allows smaller models (students) to learn from larger ones (teachers), capturing essential knowledge in a compact form. Transfer learning further enables SLMs to fine-tune pre-trained models for specific tasks, cutting down on resource and data requirements.

Why Consider SLMs?

- Cost Efficiency: Lower computational needs mean reduced operational costs, making SLMs ideal for smaller budgets.

- Energy Savings: By consuming less energy, SLMs align with the push for environmentally friendly AI.

- Accessibility: They make advanced NLP capabilities available to smaller organizations and individuals.

- Focus: Tailored for specific tasks, SLMs often outperform larger models in specialized use cases.

Examples of SLM’s

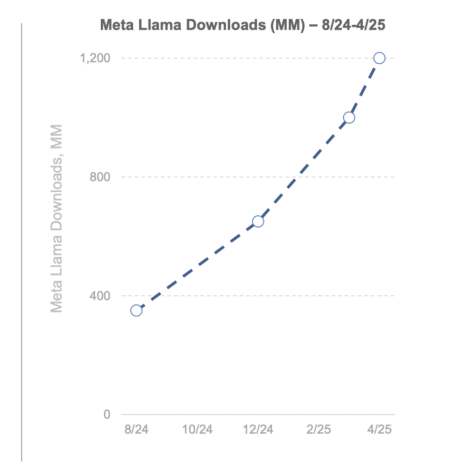

- Llama 3 8B (Meta)

- Qwen2: 0.5B, 1B, and 7B (Alibaba)

- Gemma 2 9B (Google)

- Gemma 2B and 7B (Google)

- Mistral 7B (Mistral AI)

- Gemini Nano 1.8B and 3.25B (Google)

- OpenELM 270M, 450M, 1B, and 3B (Apple)

- Phi-4 (Microsoft)

- and many more…..

Results, Data, and Insights

SLMs have demonstrated their value across a range of applications. In customer service, for instance, platforms powered by SLMs—like those from Aisera—are delivering faster, cost-effective responses. According to an DataCamp article, SLMs achieve up to 90% of the performance of LLMs in tasks such as text classification and sentiment analysis while using half the resources.

In healthcare, SLMs fine-tuned on medical datasets have been particularly effective in identifying conditions from patient records. A Medium article by Nagesh Mashette highlights their ability to streamline document summarization in industries like law and finance, cutting down processing times significantly.

SLMs also excel in cybersecurity. According to Splunk’s case studies, they’ve been used for log analysis, providing real-time insights with minimal latency.

Conclusion

Small Language Models are proving to be an efficient and accessible alternative to their larger counterparts. They address many challenges posed by LLMs by being resource-efficient, environmentally sustainable, and task-focused. Techniques like model compression and transfer learning ensure that these smaller models retain their effectiveness across a range of applications, from customer support to healthcare and cybersecurity. As Zapier’s blog suggests, the future of AI may well lie in optimizing smaller models rather than always aiming for bigger ones. SLMs show that innovation doesn’t have to come with massive infrastructure—it can come from doing more with less.

Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post What are Small Language Models (SLMs)? appeared first on MarkTechPost.

Source: Read MoreÂ