The rise of multimodal applications has highlighted the importance of instruction data in training MLMs to handle complex image-based queries effectively. Current practices for generating such data rely on LLMs or MLMs, which, despite their effectiveness, face several challenges. These include high costs, licensing restrictions, and susceptibility to hallucinations—generating inaccurate or unreliable content. Additionally, the generation process is often opaque, making it difficult to customize or interpret outputs, limiting its scalability and reliability. Visual instruction data is crucial for enabling MLMs to respond effectively to user queries about input images, but existing methods for its collection and generation remain constrained by these issues.

Recent advancements in MLMs, such as the LLaVA and InstructBLIP models, have leveraged multimodal data to achieve remarkable results in visual-language tasks. However, despite significant progress, these models often underperform in vision-specific tasks like depth estimation and localization due to the limited availability of instruction data for such tasks. While most synthetic data methods rely on LLMs, MLMs, or diffusion models, programmatic approaches like those used in GQA and AGQA focus primarily on evaluation. Unlike these methods, newer approaches aim to generate adaptable single- and multi-image instruction data for training, addressing the limitations of existing techniques and broadening the scope of multimodal learning.

Researchers from the University of Washington, Salesforce Research, and the University of Southern California introduced PROVISION. This scalable programmatic system uses scene graphs as symbolic image representations to generate vision-centric instruction data. By combining human-written programs with automatically or manually created scene graphs, PROVISION ensures interpretability, accuracy, and scalability while avoiding hallucinations and licensing constraints common in LLM/MLM-driven methods. The system generates over 10 million data points (PROVISION-10M) from Visual Genome and DataComp, covering diverse tasks like object, attribute, and depth-based queries. This data improves MLM performance, yielding up to 8% gains on benchmarks like CVBench, QBench2, and Mantis-Eval across pretraining and fine-tuning stages.

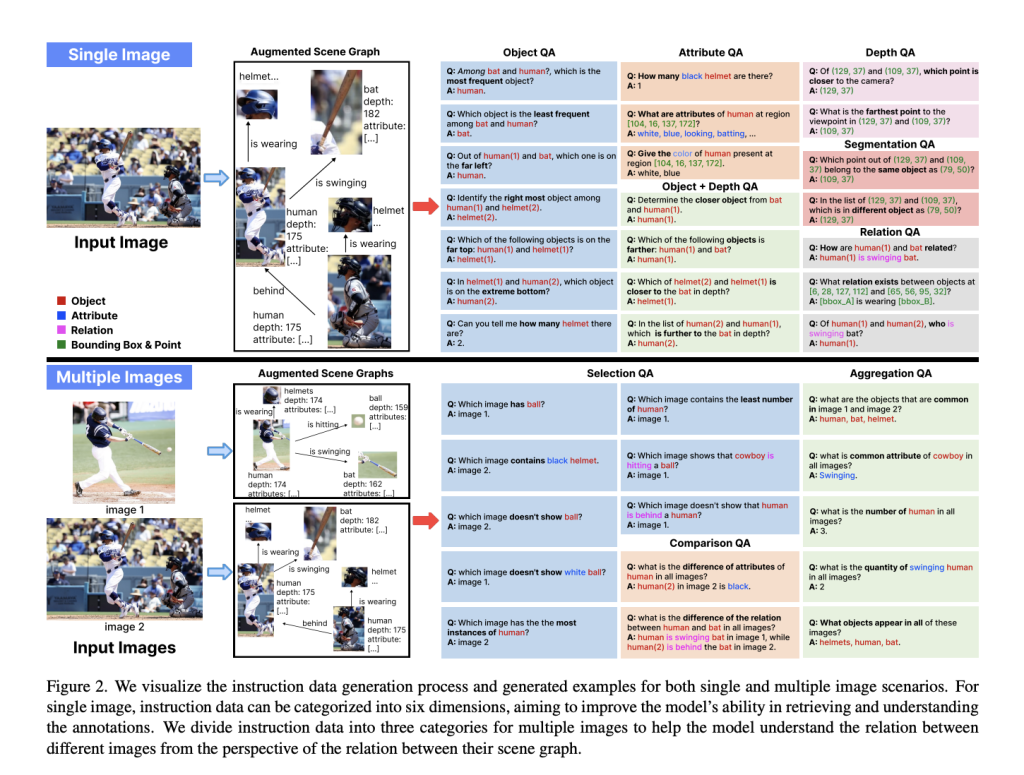

The study introduces a method for generating vision-centric instruction data using augmented scene graphs enhanced with depth and segmentation labels. For single-image scenarios, 24 generators create diverse question-answer pairs using pre-defined templates, focusing on object attributes, relations, and spatial depth. Multi-image generators enable advanced reasoning tasks like comparison and aggregation across scene graphs. The scene graph generation pipeline integrates object detection (YOLO-world), segmentation (SAM-2), attribute detection (finetuned CoCa and LLaVA-1.5), relation extraction (Osprey), and depth estimation (Depth Anything V2). The modular framework supports customization, enabling users to create diverse data for visual reasoning and multimodal AI applications.

The experiments involve synthesizing instruction data to improve model performance. Results show that manually annotated scene graphs outperform those generated by models, and both data format (short answer vs. multiple choice) and data scale significantly impact outcomes. Incorporating synthesized data in both pre-training and fine-tuning stages yields optimal results. The PROVISION-10M dataset was constructed using Visual Genome’s manually annotated scene graphs and generated scene graphs from high-resolution images, producing over 10 million instruction samples. These were tested in augmentation and replacement settings across various benchmarks, demonstrating the effectiveness of scene graphs for creating useful instructions, whether real or automatically generated.

In conclusion, The PROVISION system generates vision-centric instruction data for MLMs using scene graph representations and human-written programs. Applied to Visual Genome and DataComp, it creates PROVISION-10M, a dataset with over 10 million instructions, improving MLM performance during pretraining and instruction tuning. The system uses 24 single-image and 14 multi-image instruction generators, producing diverse queries about objects, attributes, and relationships. PROVISION achieves up to 8% performance on benchmarks like CVBench and Mantis-Eval. While limitations include dependency on scene graph quality and human-written programs, future enhancements may improve automation and scalability using LLMs.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post ProVision: A Scalable Programmatic Approach to Vision-Centric Instruction Data for Multimodal Language Models appeared first on MarkTechPost.

Source: Read MoreÂ