Time-series forecasting plays a crucial role in various domains, including finance, healthcare, and climate science. However, achieving accurate predictions remains a significant challenge. Traditional methods like ARIMA and exponential smoothing often struggle to generalize across domains or handle the complexities of high-dimensional data. Contemporary deep learning approaches, while promising, frequently require large labeled datasets and substantial computational resources, making them inaccessible to many organizations. Additionally, these models often lack the flexibility to handle varying time granularities and forecast horizons, further limiting their applicability.

Google AI has just released TimesFM-2.0, a new foundation model for time-series forecasting, now available on Hugging Face in both JAX and PyTorch implementations. This release brings improvements in accuracy and extends the maximum context length, offering a robust and versatile solution for forecasting challenges. TimesFM-2.0 builds on its predecessor by integrating architectural enhancements and leveraging a diverse training corpus, ensuring strong performance across a range of datasets.

The model’s open availability on Hugging Face underscores Google AI’s effort to support collaboration within the AI community. Researchers and developers can readily fine-tune or deploy TimesFM-2.0, facilitating advancements in time-series forecasting practices.

Technical Innovations and Benefits

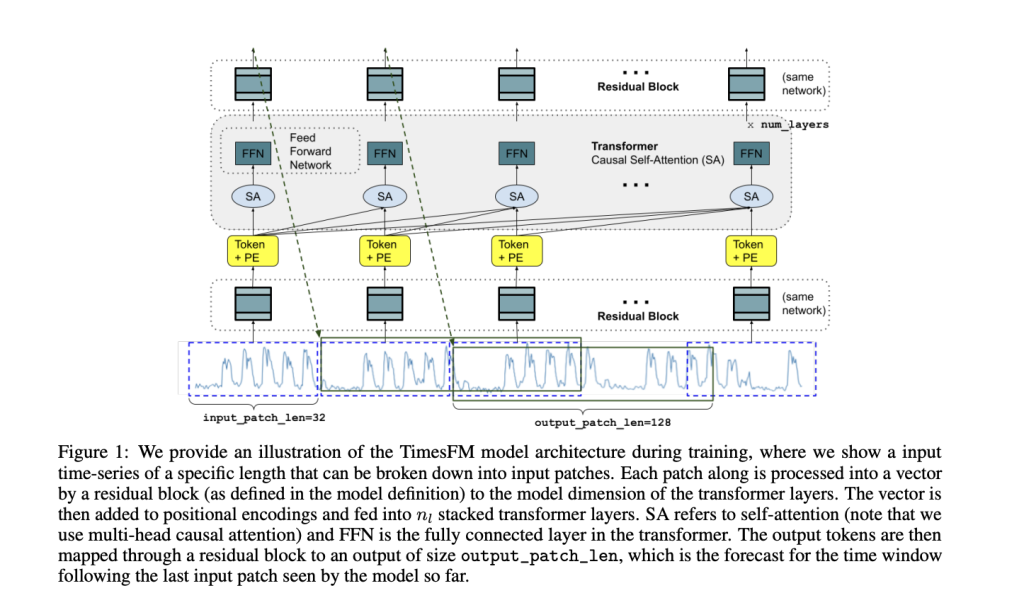

TimesFM-2.0 incorporates several advancements that enhance its forecasting capabilities. Its decoder-only architecture is designed to accommodate varying history lengths, prediction horizons, and time granularities. Techniques like input patching and patch masking enable efficient training and inference, while also supporting zero-shot forecasting—a rare feature among forecasting models.

One of its key features is the ability to predict longer horizons by generating larger output patches, reducing the computational overhead of autoregressive decoding. The model is trained on a rich dataset comprising real-world data from sources such as Google Trends and Wikimedia pageviews, as well as synthetic datasets. This diverse training data equips the model to recognize a broad spectrum of temporal patterns. Pretraining on over 100 billion time points enables TimesFM-2.0 to deliver performance comparable to state-of-the-art supervised models, often without the need for task-specific fine-tuning.

With 200 million parameters, the model balances computational efficiency and forecasting accuracy, making it practical for deployment in various scenarios.

Results and Insights

Empirical evaluations highlight the model’s strong performance. In zero-shot settings, TimesFM-2.0 consistently performs well compared to traditional and deep learning baselines across diverse datasets. For example, on the Monash archive—a collection of 30 datasets covering various granularities and domains—TimesFM-2.0 achieved superior results in terms of scaled mean absolute error (MAE), outperforming models like N-BEATS and DeepAR.

On the Darts benchmarks, which include univariate datasets with complex seasonal patterns, TimesFM-2.0 delivered competitive results, often matching the top-performing methods. Similarly, evaluations on Informer datasets, such as electricity transformer temperature datasets, demonstrated the model’s effectiveness in handling long horizons (e.g., 96 and 192 steps).

TimesFM-2.0 tops the GIFT-Eval leaderboard on point and probabilistic forecasting accuracy metrics.

Ablation studies underscored the impact of specific design choices. Increasing the output patch length, for instance, reduced the number of autoregressive steps, improving efficiency without sacrificing accuracy. The inclusion of synthetic data proved valuable in addressing underrepresented granularities, such as quarterly and yearly datasets, further enhancing the model’s robustness.

Conclusion

Google AI’s release of TimesFM-2.0 represents a thoughtful advance in time-series forecasting. By combining scalability, accuracy, and adaptability, the model addresses common forecasting challenges with a practical and efficient solution. Its open-source availability invites the research community to explore its potential, fostering further innovation in this domain. Whether used for financial modeling, climate predictions, or healthcare analytics, TimesFM-2.0 equips organizations to make informed decisions with confidence and precision.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post Google AI Just Released TimesFM-2.0 (JAX and Pytorch) on Hugging Face with a Significant Boost in Accuracy and Maximum Context Length appeared first on MarkTechPost.

Source: Read MoreÂ