Artificial intelligence research has steadily advanced toward creating systems capable of complex reasoning. Multimodal large language models (MLLMs) represent a significant development in this journey, combining the ability to process text and visual data. These systems can address intricate challenges like mathematical problems or reasoning through diagrams. By enabling AI to bridge the gap between modalities, MLLMs expand their application scope, offering new possibilities in education, science, and data analysis.

One of the primary challenges in developing these systems is integrating visual and textual reasoning seamlessly. Traditional large language models excel in processing either text or images but fall short when tasked with combining these modalities for reasoning. This limitation hinders their performance in multimodal tasks, particularly in scenarios requiring extended and deliberate thought processes, often termed “slow thinking.” Addressing this issue is crucial for advancing MLLMs toward practical applications where multimodal reasoning is essential.

Current approaches to enhancing reasoning capabilities in MLLMs are rooted in two broad strategies. The first involves using structured search methods, such as Monte Carlo tree search, guided by reward models to refine the reasoning path. The second focuses on training LLMs with long-form reasoning instructions, often structured as chains of thought (CoT). However, these methods have primarily concentrated on text-based tasks, leaving multimodal scenarios relatively underexplored. Although a few commercial systems like OpenAI’s o1 model have demonstrated promise, their proprietary nature limits access to the methodologies, creating a gap for public research.

Researchers from Renmin University of China, Baichuan AI, and BAAI have introduced Virgo, a model designed to enhance slow-thinking reasoning in multimodal contexts. Virgo was developed by fine-tuning the Qwen2-VL-72B-Instruct model, leveraging a straightforward yet innovative approach. This involved training the MLLM using textual long-thought data, an unconventional choice to transfer reasoning capabilities across modalities. This method distinguishes Virgo from prior efforts, as it focuses on the inherent reasoning strengths of the LLM backbone within the MLLM.

The methodology behind Virgo’s development is both detailed and deliberate. The researchers curated a dataset comprising 5,000 long-thought instruction examples, primarily from mathematics, science, and coding. These instructions were formatted to include structured reasoning processes and final solutions, ensuring clarity and reproducibility during training. To optimize Virgo’s capabilities, the researchers selectively fine-tuned parameters in the LLM and cross-modal connectors, leaving the visual encoder untouched. This approach preserved the visual processing capabilities of the base model while enhancing its reasoning performance. Further, they explored self-distillation, using the fine-tuned model to generate visual long-thought data, further refining Virgo’s multimodal reasoning capabilities.

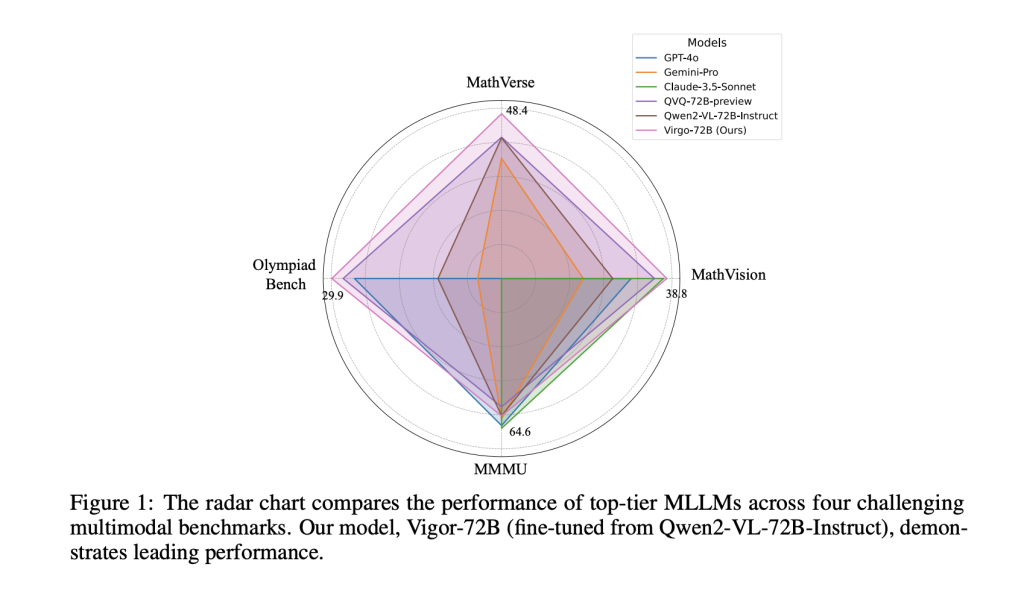

Virgo’s performance was evaluated across four challenging benchmarks: MathVerse, MathVision, OlympiadBench, and MMMU. These benchmarks included thousands of multimodal problems, testing the model’s reasoning ability over text and visual inputs. Virgo achieved remarkable results, outperforming several advanced models and rivaling commercial systems. For example, on MathVision, Virgo recorded a 38.8% accuracy, surpassing many existing solutions. On OlympiadBench, one of the most demanding benchmarks, it achieved a 12.4% improvement over its base model, highlighting its capacity for complex reasoning. In addition, Virgo’s text-based fine-tuning demonstrated superior performance in extracting slow-thinking reasoning capabilities compared to multimodal training data. This finding emphasizes the potential of leveraging textual instructions for enhancing multimodal systems.

The researchers further analyzed Virgo’s performance by breaking down results based on difficulty levels within the benchmarks. While Virgo showed consistent improvements in challenging tasks requiring extended reasoning, it experienced limited gains in simpler tasks, such as those in the MMMU benchmark. This insight underscores the importance of tailoring reasoning systems to the complexity of the problems they are designed to solve. Virgo’s results also revealed that textual reasoning data often outperformed visual reasoning instructions, suggesting that textual training can effectively transfer reasoning capabilities to multimodal domains.

By demonstrating a practical and efficient approach to enhancing MLLMs, the researchers contributed significantly to the field of AI. Their work bridges the gap in multimodal reasoning and opens avenues for future research in refining these systems. Virgo’s success illustrates the transformative potential of leveraging long-thought textual data for training, offering a scalable solution for developing advanced reasoning models. With further refinement and exploration, this methodology could drive significant progress in multimodal AI research.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post This AI Paper Introduces Virgo: A Multimodal Large Language Model for Enhanced Slow-Thinking Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ