Large Language Models (LLMs) have significantly advanced artificial intelligence, particularly in natural language understanding and generation. However, these models encounter difficulties with complex reasoning tasks, especially those requiring multi-step, non-linear processes. While traditional Chain-of-Thought (CoT) approaches, which promote step-by-step reasoning, improve performance on simpler tasks, they often fall short in addressing more intricate problems. This shortcoming stems from CoT’s inability to fully capture the latent reasoning processes that underpin complex problem-solving.

To tackle these challenges, researchers from SynthLabs and Stanford have proposed Meta Chain-of-Thought (Meta-CoT), a framework designed to model the latent steps necessary for solving complex problems. Unlike classical CoT, which focuses on linear reasoning, Meta-CoT incorporates a structured approach inspired by cognitive science’s dual-process theory. This framework seeks to emulate deliberate, logical, and reflective thinking, often referred to as “System 2” reasoning.

Meta-CoT integrates instruction tuning, synthetic data generation, and reinforcement learning to help models internalize these reasoning processes. By doing so, it bridges the gap between conventional reasoning methods and the complexities of real-world problem-solving. The framework employs algorithms such as Monte Carlo Tree Search (MCTS) and A* search to generate synthetic data that reflects latent reasoning processes. This data, combined with process supervision, enables models to move beyond simplistic left-to-right token prediction and better approximate the true reasoning pathways required for complex tasks.

Key Components and Benefits

Meta-CoT incorporates three main components:

- Process Supervision: Models are trained on intermediate reasoning steps generated through structured search. This training provides explicit rewards for following reasoning processes, allowing iterative refinement of outputs until a correct solution is reached.

- Synthetic Data Generation: Using search algorithms like MCTS and A*, researchers generate Meta-CoT traces that mimic the hidden processes behind complex problem-solving. These traces enable models to internalize structured reasoning strategies.

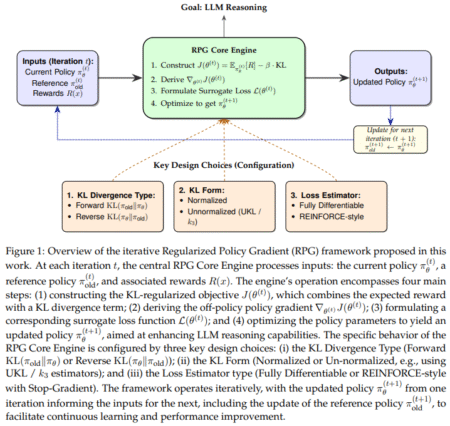

- Reinforcement Learning: After initial instruction tuning, models undergo reinforcement learning to fine-tune their ability to generate and verify Meta-CoT solutions. This ensures that reasoning aligns with the true data generation processes.

This approach enables LLMs to address challenges that traditional CoT cannot, such as solving high-difficulty mathematical reasoning problems and logical puzzles. By formalizing reasoning as a latent variable process, Meta-CoT expands the range of tasks LLMs can handle.

Evaluation and Insights

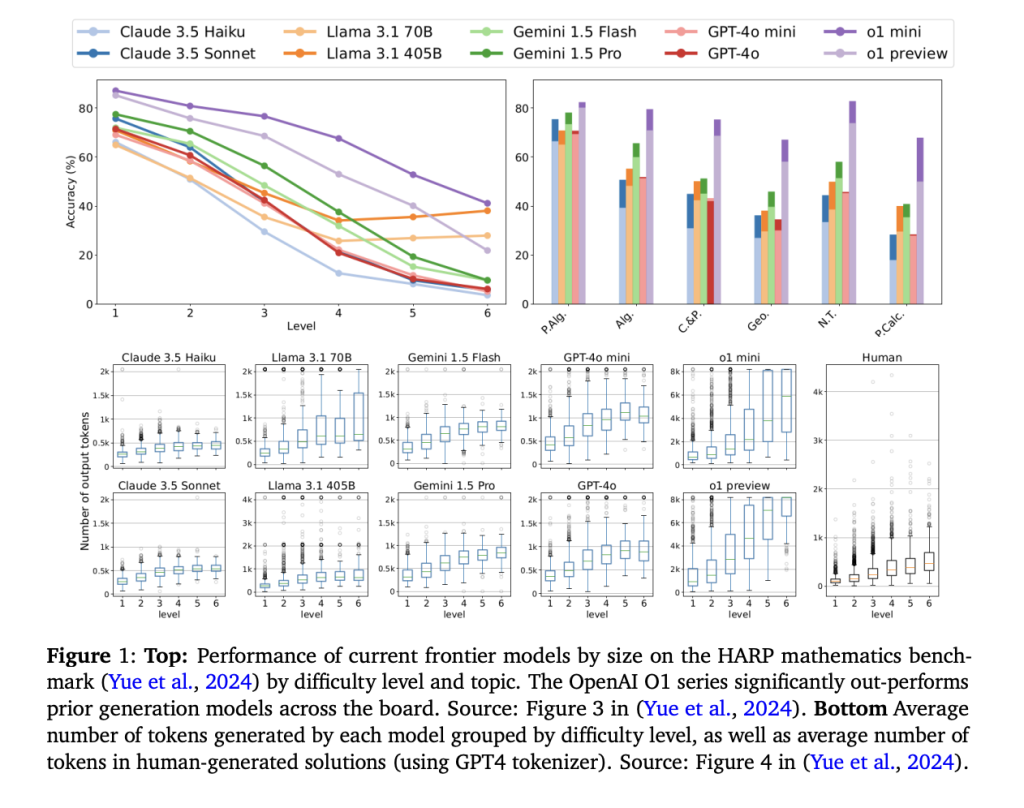

The researchers evaluated Meta-CoT on demanding benchmarks, including the Hendrycks MATH dataset and Olympiad-level reasoning tasks. The results highlight Meta-CoT’s effectiveness:

- Improved Accuracy: Models trained with Meta-CoT showed a 20-30% improvement in accuracy on advanced reasoning tasks compared to baseline CoT models.

- Scalability: As problem complexity increased, the performance gap between Meta-CoT and traditional CoT widened, demonstrating Meta-CoT’s capacity to handle computationally demanding tasks.

- Efficiency: Structured search strategies within Meta-CoT reduced inference time for complex problems, making it a practical solution for resource-constrained environments.

Experiments revealed that Meta-CoT helps LLMs internalize search processes, enabling self-correction and optimization of reasoning strategies. These capabilities mimic aspects of human problem-solving and mark a significant step forward in LLM development.

Conclusion

Meta-CoT offers a thoughtful and structured approach to enhancing the reasoning capabilities of LLMs. By modeling latent reasoning processes and incorporating advanced search techniques, it addresses the limitations of traditional CoT methods. The framework’s success in empirical evaluations underscores its potential to transform how LLMs approach complex tasks. As further refinements are made, Meta-CoT is poised to become a foundational element in developing next-generation AI systems capable of tackling intricate reasoning challenges in various domains, from mathematics to scientific discovery.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post Researchers from SynthLabs and Stanford Propose Meta Chain-of-Thought (Meta-CoT): An AI Framework for Improving LLM Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ