Language-based agentic systems represent a breakthrough in artificial intelligence, allowing for the automation of tasks such as question-answering, programming, and advanced problem-solving. These systems, heavily reliant on Large Language Models (LLMs), communicate using natural language. This innovative design reduces the engineering complexity of individual components and enables seamless interaction between them, paving the way for the efficient execution of multifaceted tasks. Despite their immense potential, optimizing these systems for real-world applications remains a significant challenge.

A critical problem in optimizing agentic systems is assigning precise feedback to various components within a computational framework. As these systems are modeled using computational graphs, the challenge intensifies due to the intricate interconnections among their components. Without accurate directional guidance, improving the performance of individual elements becomes inefficient and hinders the overall effectiveness of these systems in delivering exact and reliable outcomes. This lack of effective optimization methods has limited the scalability of these systems in complex applications.

Existing solutions such as DSPy, TextGrad, and OptoPrime have attempted to address the optimization problem. DSPy uses prompt optimization techniques, while TextGrad and OptoPrime rely on feedback mechanisms inspired by backpropagation. However, these methods often overlook critical relationships among graph nodes or fail to incorporate neighboring node dependencies, resulting in suboptimal feedback distribution. These limitations reduce their ability to optimize agentic systems effectively, especially when dealing with intricate computational structures.

Researchers from King Abdullah University of Science and Technology (KAUST) and collaborators from SDAIA and the Swiss AI Lab IDSIA introduced semantic backpropagation and semantic gradient descent to tackle these challenges. Semantic backpropagation generalizes reverse-mode automatic differentiation by introducing semantic gradients, which provide a broader understanding of how variables within a system impact overall performance. The approach emphasizes alignment between components, incorporating node relationships to enhance optimization precision.

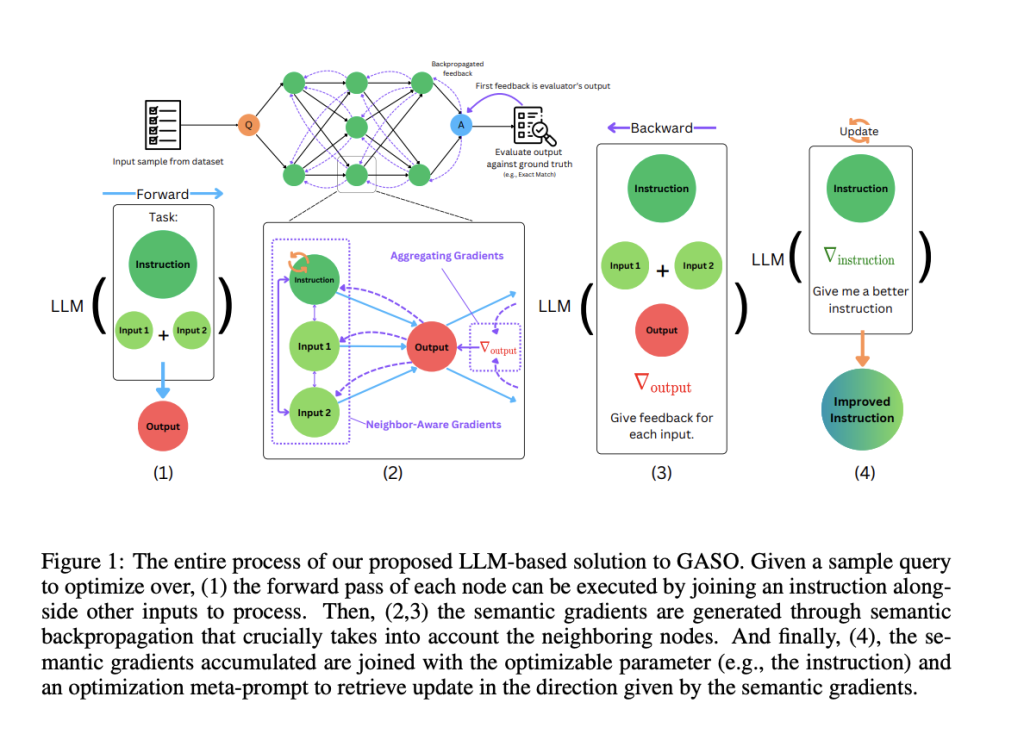

Semantic backpropagation utilizes computational graphs where semantic gradients guide the optimization of variables. This method extends traditional gradients by capturing semantic relationships between nodes and neighbors. These gradients are aggregated through backward functions that align with the graph’s structure, ensuring that the optimization reflects real dependencies. Semantic gradient descent applies these gradients iteratively, allowing for systematic updates to optimizable parameters. Addressing component-level and system-wide feedback distribution enables efficient resolution of the graph-based agentic system optimization (GASO) problem.

Experimental evaluations showcased the efficacy of semantic gradient descent across multiple benchmarks. On GSM8K, a dataset comprising mathematical problems, the approach achieved a remarkable 93.2% accuracy, surpassing TextGrad’s 78.2%. Similarly, the BIG-Bench Hard dataset demonstrated superior performance with 82.5% accuracy in natural language processing tasks and 85.6% in algorithmic tasks, outperforming other methods like OptoPrime and COPRO. These results highlight the approach’s robustness and adaptability across diverse datasets. An ablation study on the LIAR dataset further underscored its efficiency. The study revealed a significant performance drop when key components of semantic backpropagation were removed, emphasizing the necessity of its integrative design.

Semantic gradient descent not only improved performance but also optimized computational costs. By incorporating neighborhood dependencies, the method reduced the number of forward computations required compared to traditional approaches. For instance, in the LIAR dataset, including neighboring node information improved classification accuracy to 71.2%, a significant increase compared to variants that excluded this information. These results demonstrate the potential of semantic backpropagation to deliver scalable and cost-effective optimization for agentic systems.

In conclusion, the research introduced by the KAUST, SDAIA, and IDSIA teams provides an innovative solution to the optimization challenges faced by language-based agentic systems. By leveraging semantic backpropagation and gradient descent, the approach resolves the limitations of existing methods and establishes a scalable framework for future advancements. The method’s remarkable performance across benchmarks highlights its transformative potential in improving the efficiency and reliability of AI-driven systems.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post This AI Paper Introduces Semantic Backpropagation and Gradient Descent: Advanced Methods for Optimizing Language-Based Agentic Systems appeared first on MarkTechPost.

Source: Read MoreÂ