Advancements in neural networks have brought significant changes across domains like natural language processing, computer vision, and scientific computing. Despite these successes, the computational cost of training such models remains a key challenge. Neural networks often employ higher-order tensor weights to capture complex relationships, but this introduces memory inefficiencies during training. Particularly in scientific computing, tensor-parameterized layers used for modeling multidimensional systems, such as solving partial differential equations (PDEs), require substantial memory for optimizer states. Flattening tensors into matrices for optimization can lead to the loss of important multidimensional information, limiting both efficiency and performance. Addressing these issues requires innovative solutions that maintain model accuracy.

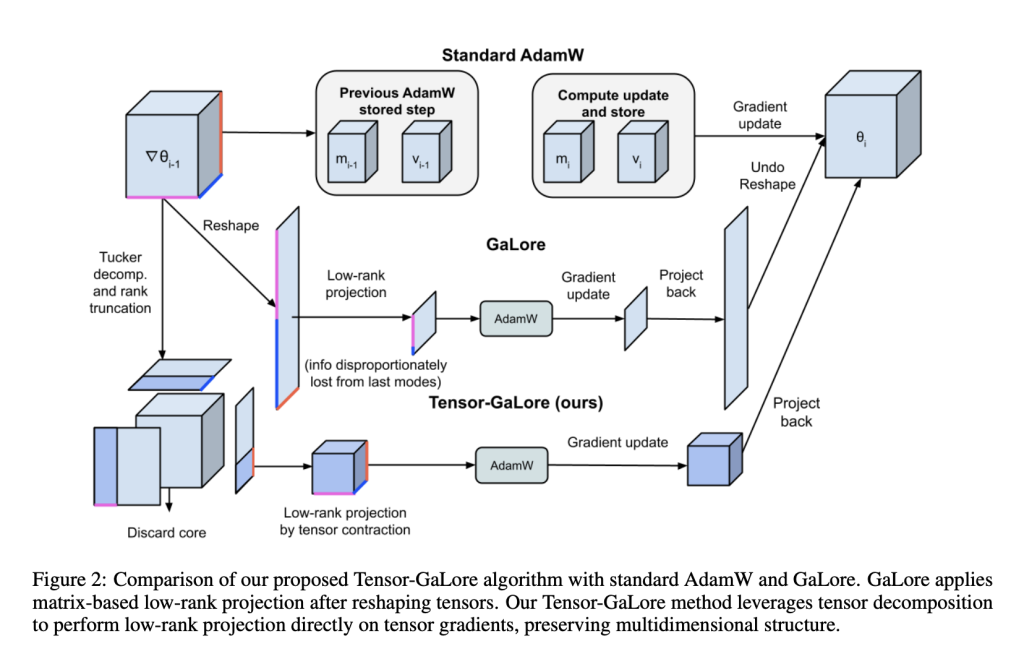

To address these challenges, researchers from Caltech, Meta FAIR, and NVIDIA AI developed Tensor-GaLore, a method for efficient neural network training with higher-order tensor weights. Tensor-GaLore operates directly in the high-order tensor space, using tensor factorization techniques to optimize gradients during training. Unlike earlier methods such as GaLore, which relied on matrix operations via Singular Value Decomposition (SVD), Tensor-GaLore employs Tucker decomposition to project gradients into a low-rank subspace. By preserving the multidimensional structure of tensors, this approach improves memory efficiency and supports applications like Fourier Neural Operators (FNOs).

FNOs are a class of models designed for solving PDEs. They leverage spectral convolution layers involving higher-order tensors to represent mappings between function spaces. Tensor-GaLore addresses the memory overhead caused by Fourier coefficients and optimizer states in FNOs, enabling efficient training for high-resolution tasks such as Navier-Stokes and Darcy flow equations.

Technical Details and Benefits of Tensor-GaLore

Tensor-GaLore’s core innovation is its use of Tucker decomposition for gradients during optimization. This decomposition breaks tensors into a core tensor and orthogonal factor matrices along each mode. Key benefits of this approach include:

- Memory Efficiency: Tensor-GaLore projects tensors into low-rank subspaces, achieving memory savings of up to 75% for optimizer states.

- Preservation of Structure: Unlike matrix-based methods that collapse tensor dimensions, Tensor-GaLore retains the original tensor structure, preserving spatial, temporal, and channel-specific information.

- Implicit Regularization: The low-rank tensor approximation helps prevent overfitting and supports smoother optimization.

- Scalability: Features like per-layer weight updates and activation checkpointing reduce peak memory usage, making it feasible to train large-scale models.

Theoretical analysis ensures Tensor-GaLore’s convergence and stability. Its mode-specific rank adjustments provide flexibility and often outperform traditional low-rank approximation techniques.

Results and Insights

Tensor-GaLore has been tested on various PDE tasks, showing notable improvements in performance and memory efficiency:

- Navier-Stokes Equations: For tasks at 1024×1024 resolution, Tensor-GaLore reduced optimizer memory usage by 76% while maintaining performance comparable to baseline methods.

- Darcy Flow Problem: Experiments revealed a 48% improvement in test loss with a 0.25 rank ratio, alongside significant memory savings.

- Electromagnetic Wave Propagation: Tensor-GaLore improved test accuracy by 11% and reduced memory consumption, proving effective for handling complex multidimensional data.

Conclusion

Tensor-GaLore offers a practical solution for memory-efficient training of neural networks using higher-order tensor weights. By leveraging low-rank tensor projections and preserving multidimensional relationships, it addresses key limitations in scaling models for scientific computing and other domains. Its demonstrated success with PDEs, through memory savings and performance gains, makes it a valuable tool for advancing AI-driven scientific discovery. As computational demands grow, Tensor-GaLore provides a pathway to more efficient and accessible training of complex, high-dimensional models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post Researchers from Caltech, Meta FAIR, and NVIDIA AI Introduce Tensor-GaLore: A Novel Method for Efficient Training of Neural Networks with Higher-Order Tensor Weights appeared first on MarkTechPost.

Source: Read MoreÂ