Large language models (LLMs) have demonstrated promising capabilities in machine translation (MT) tasks. Depending on the use case, they are able to compete with neural translation models such as Amazon Translate. LLMs particularly stand out for their natural ability to learn from the context of the input text, which allows them to pick up on cultural cues and produce more natural sounding translations. For instance, the sentence “Did you perform well?” translated in French might be translated into “Avez-vous bien performé?” The target translation can vary widely depending on the context. If the question is asked in the context of sport, such as “Did you perform well at the soccer tournament?”, the natural French translation would be very different. It is critical for AI models to capture not only the context, but also the cultural specificities to produce a more natural sounding translation. One of LLMs’ most fascinating strengths is their inherent ability to understand context.

A number of our global customers are looking to take advantage of this capability to improve the quality of their translated content. Localization relies on both automation and humans-in-the-loop in a process called Machine Translation Post Editing (MTPE). Building solutions that help enhance translated content quality present multiple benefits:

- Potential cost savings on MTPE activities

- Faster turnaround for localization projects

- Better experience for content consumers and readers overall with enhanced quality

LLMs have also shown gaps with regards to MT tasks, such as:

- Inconsistent quality over certain language pairs

- No standard pattern to integrate past translations knowledge, also known as translation memory (TM)

- Inherent risk of hallucination

Switching MT workloads from to LLM-driven translation should be considered on a case-by-case basis. However, the industry is seeing enough potential to consider LLMs as a valuable option.

This blog post with accompanying code presents a solution to experiment with real-time machine translation using foundation models (FMs) available in Amazon Bedrock. It can help collect more data on the value of LLMs for your content translation use cases.

Steering the LLMs’ output

Translation memory and TMX files are important concepts and file formats used in the field of computer-assisted translation (CAT) tools and translation management systems (TMSs).

Translation memory

A translation memory is a database that stores previously translated text segments (typically sentences or phrases) along with their corresponding translations. The main purpose of a TM is to aid human or machine translators by providing them with suggestions for segments that have already been translated before. This can significantly improve translation efficiency and consistency, especially for projects involving repetitive content or similar subject matter.

Translation Memory eXchange (TMX) is a widely used open standard for representing and exchanging TM data. It is an XML-based file format that allows for the exchange of TMs between different CAT tools and TMSs. A typical TMX file contains a structured representation of translation units, which are groupings of a same text translated into multiple languages.

Integrating TM with LLMs

The use of TMs in combination with LLMs can be a powerful approach for improving the quality and efficiency of machine translation. The following are a few potential benefits:

- Improved accuracy and consistency – LLMs can benefit from the high-quality translations stored in TMs, which can help improve the overall accuracy and consistency of the translations produced by the LLM. The TM can provide the LLM with reliable reference translations for specific segments, reducing the chances of errors or inconsistencies.

- Domain adaptation – TMs often contain translations specific to a particular domain or subject matter. By using a domain-specific TM, the LLM can better adapt to the terminology, style, and context of that domain, leading to more accurate and natural translations.

- Efficient reuse of human translations – TMs store human-translated segments, which are typically of higher quality than machine-translated segments. By incorporating these human translations into the LLM’s training or inference process, the LLM can learn from and reuse these high-quality translations, potentially improving its overall performance.

- Reduced post-editing effort – When the LLM can accurately use the translations stored in the TM, the need for human post-editing can be reduced, leading to increased productivity and cost savings.

Another approach to integrating TM data with LLMs is to use fine-tuning in the same way you would fine-tune a model for business domain content generation, for instance. For customers operating in global industries, potentially translating to and from over 10 languages, this approach can prove to be operationally complex and costly. The solution proposed in this post relies on LLMs’ context learning capabilities and prompt engineering. It enables you to use an off-the-shelf model as is without involving machine learning operations (MLOps) activity.

Solution overview

The LLM translation playground is a sample application providing the following capabilities:

- Experiment with LLM translation capabilities using models available in Amazon Bedrock

- Create and compare various inference configurations

- Evaluate the impact of prompt engineering and Retrieval Augmented Generation (RAG) on translation with LLMs

- Configure supported language pairs

- Import, process, and test translation using your existing TMX file with Multiple LLMS

- Custom terminology conversion

- Performance, quality, and usage metrics including BLEU, BERT, METEOR and, CHRF

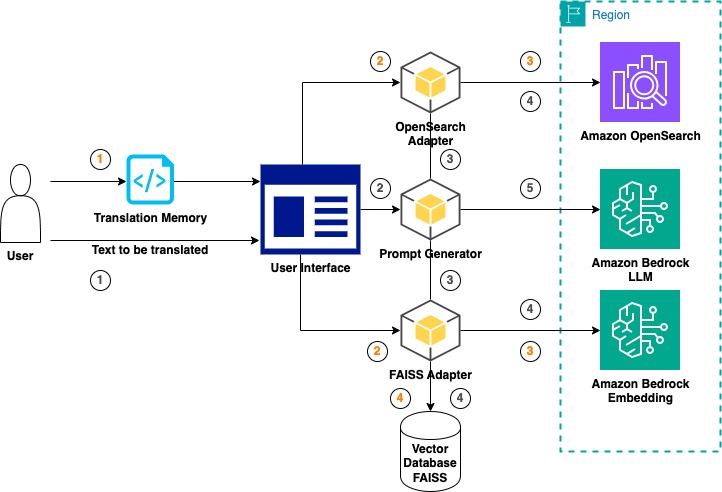

The following diagram illustrates the translation playground architecture. The numbers are color-coded to represent two flows: the translation memory ingestion flow (orange) and the text translation flow (gray). The solution offers two TM retrieval modes for users to choose from: vector and document search. This is covered in detail later in the post.

The TM ingestion flow (orange) consists of the following steps:

- The user uploads a TMX file to the playground UI.

- Depending on which retrieval mode is being used, the appropriate adapter is invoked.

- When using the Amazon OpenSearch Service adapter (document search), translation unit groupings are parsed and stored into an index dedicated to the uploaded file. When using the FAISS adapter (vector search), translation unit groupings are parsed and turned into vectors using the selected embedding model from Amazon Bedrock.

- When using the FAISS adapter, translation units are stored into a local FAISS index along with the metadata.

The text translation flow (gray) consists of the following steps:

- The user enters the text they want to translate along with source and target language.

- The request is sent to the prompt generator.

- The prompt generator invokes the appropriate knowledge base according to the selected mode.

- The prompt generator receives the relevant translation units.

- Amazon Bedrock is invoked using the generated prompt as input along with customization parameters.

The translation playground could be adapted into a scalable serverless solution as represented by the following diagram using AWS Lambda, Amazon Simple Storage Service (Amazon S3), and Amazon API Gateway.

Strategy for TM knowledge base

The LLM translation playground offers two options to incorporate the translation memory into the prompt. Each option is available through its own page within the application:

- Vector store using FAISS – In this mode, the application processes the .tmx file the user uploaded, indexes it, and stores it locally into a vector store (FAISS).

- Document store using Amazon OpenSearch Serverless – Only standard document search using Amazon OpenSearch Serverless is supported. To test vector search, use the vector store option (using FAISS).

In vector store mode, the translation segments are processed as follows:

- Embed the source segment.

- Extract metadata:

- Segment language

- System generated

<tu>segment unique identifier

- Store source segment vectors along with metadata and the segment itself in plain text as a document

The translation customization section allows you to select the embedding model. You can choose either Amazon Titan Embeddings Text V2 or Cohere Embed Multilingual v3. Amazon Titan Text Embeddings V2 includes multilingual support for over 100 languages in pre-training. Cohere Embed supports 108 languages.

In document store mode, the language segments are not embedded and are stored following a flat structure. Two metadata attributes are maintained across the documents:

- Segment Language

- System generated

<tu>segment unique identifier

Prompt engineering

The application uses prompt engineering techniques to incorporate several types of inputs for the inference. The following sample XML illustrates the prompt’s template structure:

Prerequisites

The project code uses the Python version of the AWS Cloud Development Kit (AWS CDK). To run the project code, make sure that you have fulfilled the AWS CDK prerequisites for Python.

The project also requires that the AWS account is bootstrapped to allow the deployment of the AWS CDK stack.

Install the UI

To deploy the solution, first install the UI (Streamlit application):

- Clone the GitHub repository using the following command:

git clone https://github.com/aws-samples/llm-translation-playground.git- Navigate to the deployment directory:

cd llm-translation-playground- Install and activate a Python virtual environment:

python3 -m venv .venv

source .venv/bin/activate- Install Python libraries:

python -m pip install -r requirements.txtDeploy the AWS CDK stack

Complete the following steps to deploy the AWS CDK stack:

- Move into the deployment folder:

cd deployment/cdk- Configure the AWS CDK context parameters file

context.json. Forcollection_name, use the OpenSearch Serverless collection name. For example:

"collection_name": "search-subtitles"

- Deploy the AWS CDK stack:

cdk deploy- Validate successful deployment by reviewing the

OpsServerlessSearchStackstack on the AWS CloudFormation The status should read CREATE_COMPLETE. - On the Outputs tab, make note of the

OpenSearchEndpointattribute value.

Configure the solution

The stack creates an AWS Identity and Access Management (IAM) role with the right level of permission needed to run the application. The LLM translation playground assumes this role automatically on your behalf. To achieve this, modify the role or principal under which you are planning to run the application so you are allowed to assume the newly created role. You can use the pre-created policy and attach it to your role. The policy Amazon Resource Name (ARN) can be retrieved as a stack output under the key LLMTranslationPlaygroundAppRoleAssumePolicyArn, as illustrated in the preceding screenshot. You can do so from the IAM console after selecting your role and choosing Add permissions. If you prefer to use the AWS Command Line Interface (AWS CLI), refer to the following sample command line:

aws iam attach-role-policy --role-name <role-name> --policy-arn <policy-arn>Finally, configure the .env file in the utils folder as follows:

- APP_ROLE_ARN – The ARN of the role created by the stack (stack output

LLMTranslationPlaygroundAppRoleArn) - HOST – OpenSearch Serverless collection endpoint (without https)

- REGION – AWS Region the collection was deployed into

- INGESTION_LIMIT – Maximum amount of translation units (

<tu>tags) indexed per TMX file you upload

Run the solution

To start the translation playground, run the following commands:

cd llm-translation-playground/source

streamlit run LLM_Translation_Home.pyYour default browser should open a new tab or window displaying the Home page.

Simple test case

Let’s run a simple translation test using the phrase mentioned earlier: “Did you perform well?”

Because we’re not using a knowledge base for this test case, we can use either a vector store or document store. For this post, we use a document store.

- Choose With Document Store.

- For Source Text, enter the text to be translated.

- Choose your source and target languages (for this post, English and French, respectively).

- You can experiment with other parameters, such as model, maximum tokens, temperature, and top-p.

- Choose Translate.

The translated text appears in the bottom section. For this example, the translated text, although accurate, is close to a literal translation, which is not a common phrasing in French.

- We can rerun the same test after slightly modifying the initial text: “Did you perform well at the soccer tournament?”

We’re now introducing some situational context in the input. The translated text should be different and closer to a more natural translation. The new output literally means “Did you play well at the soccer tournament?”, which is consistent with the initial intent of the question.

Also note the completion metrics on the left pane, displaying latency, input/output tokens, and quality scores.

This example highlights the ability of LLMs to naturally adapt the translation to the context.

Adding translation memory

Let’s test the impact of using a translation memory TMX file on the translation quality.

- Copy the text contained within

test/source_text.txtand paste into the Source text - Choose French as the target language and run the translation.

- Copy the text contained within

test/target_text.txtand paste into the reference translation field.

- Choose Evaluate and notice the quality scores on the left.

- In the Translation Customization section, choose Browse files and choose the file

test/subtitles_memory.tmx.

This will index the translation memory into the OpenSearch Service collection previously created. The indexing process can take a few minutes.

- When the indexing is complete, select the created index from the index dropdown.

- Rerun the translation.

You should see a noticeable increase in the quality score. For instance, we’ve seen up to 20 percentage points improvement in BLEU score with the preceding test case. Using prompt engineering, we were able to steer the model’s output by providing sample phrases directly pulled from the TMX file. Feel free to explore the generated prompt for more details on how the translation pairs were introduced.

You can replicate a similar test case with Amazon Translate by launching an asynchronous job customized using parallel data.

Here we took a simplistic retrieval approach, which consists of loading all of the samples as part of the same TMX file, matching the source and target language. You can enhance this technique by using metadata-driven filtering to collect the relevant pairs according to the source text. For example, you can classify the documents by theme or business domain, and use category tags to select language pairs relevant to the text and desired output.

Semantic similarity for translation memory selection

In vector store mode, the application allows you to upload a TMX and create a local index that uses semantic similarity to select the translation memory segments. First, we retrieve the segment with the highest similarity score based on the text to be translated and the source language. Then we retrieve the corresponding segment matching the target language and parent translation unit ID.

To try it out, upload the file in the same way as shown earlier. Depending on the size of the file, this can take a few minutes. There is a maximum limit of 200 MB. You can use the sample file as in the previous example or one of the other samples provided in the code repository.

This approach differs from the static index search because it’s assumed that the source text is semantically close to segments representative enough of the expected style and tone.

Adding custom terminology

Custom terminology allows you to make sure that your brand names, character names, model names, and other unique content get translated to the desired result. Given that LLMs are pre-trained on massive amounts of data, they can likely already identify unique names and render them accurately in the output. If there are names for which you want to enforce a strict and literal translation, you can try the custom terminology feature of this translate playground. Simply provide the source and target language pairs separated by semicolon in the Translation Customization section. For instance, if you want to keep the phrase “Gen AI” untranslated regardless of the language, you can configure the custom terminology as illustrated in the following screenshot.

Clean up

To delete the stack, navigate to the deployment folder and run:cdk destroy.

Further considerations

Using existing TMX files with generative AI-based translation systems can potentially improve the quality and consistency of translations. The following are some steps to use TMX files for generative AI translations:

- TMX data pipeline – TMX files contain structured translation units, but the format might need to be preprocessed to extract the source and target text segments in a format that can be consumed by the generative AI model. This involves extract, transform, and load (ETL) pipelines able to parse the XML structure, handle encoding issues, and add metadata.

- Incorporate quality estimation and human review – Although generative AI models can produce high-quality translations, it is recommended to incorporate quality estimation techniques and human review processes. You can use automated quality estimation models to flag potentially low-quality translations, which can then be reviewed and corrected by human translators.

- Iterate and refine – Translation projects often involve iterative cycles of translation, review, and improvement. You can periodically retrain or fine-tune the generative AI model with the updated TMX file, creating a virtuous cycle of continuous improvement.

Conclusion

The LLM translation playground presented in this post enables you evaluate the use of LLMs for your machine translation needs. The key features of this solution include:

- Ability to use translation memory – The solution allows you to integrate your existing TM data, stored in the industry-standard TMX format, directly into the LLM translation process. This helps improve the accuracy and consistency of the translations by using high-quality human-translated content.

- Prompt engineering capabilities – The solution showcases the power of prompt engineering, demonstrating how LLMs can be steered to produce more natural and contextual translations by carefully crafting the input prompts. This includes the ability to incorporate custom terminology and domain-specific knowledge.

- Evaluation metrics – The solution includes standard translation quality evaluation metrics, such as BLEU, BERT Score, METEOR, and CHRF, to help you assess the quality and effectiveness of the LLM-powered translations compared to their your existing machine translation workflows.

As the industry continues to explore the use of LLMs, this solution can help you gain valuable insights and data to determine if LLMs can become a viable and valuable option for your content translation and localization workloads.

To dive deeper into the fast-moving field of LLM-based machine translation on AWS, check out the following resources:

- How 123RF saved over 90% of their translation costs by switching to Amazon Bedrock

- Video auto-dubbing using Amazon Translate, Amazon Bedrock, and Amazon Polly

- Multi-Model agentic & reflective translation workflow in Amazon Bedrock

About the Authors

Narcisse Zekpa is a Sr. Solutions Architect based in Boston. He helps customers in the Northeast U.S. accelerate their business transformation through innovative, and scalable solutions, on the AWS Cloud. He is passionate about enabling organizations to transform transform their business, using advanced analytics and AI. When Narcisse is not building, he enjoys spending time with his family, traveling, running, cooking and playing basketball.

Narcisse Zekpa is a Sr. Solutions Architect based in Boston. He helps customers in the Northeast U.S. accelerate their business transformation through innovative, and scalable solutions, on the AWS Cloud. He is passionate about enabling organizations to transform transform their business, using advanced analytics and AI. When Narcisse is not building, he enjoys spending time with his family, traveling, running, cooking and playing basketball.

Ajeeb Peter is a Principal Solutions Architect with Amazon Web Services based in Charlotte, North Carolina, where he guides global financial services customers to build highly secure, scalable, reliable, and cost-efficient applications on the cloud. He brings over 20 years of technology experience on Software Development, Architecture and Analytics from industries like finance and telecom

Ajeeb Peter is a Principal Solutions Architect with Amazon Web Services based in Charlotte, North Carolina, where he guides global financial services customers to build highly secure, scalable, reliable, and cost-efficient applications on the cloud. He brings over 20 years of technology experience on Software Development, Architecture and Analytics from industries like finance and telecom

Source: Read MoreÂ