Multimodal foundation models are becoming increasingly relevant in artificial intelligence, enabling systems to process and integrate multiple forms of data—such as images, text, and audio—to address diverse tasks. However, these systems face significant challenges. Existing models often struggle to generalize across a wide variety of modalities and tasks due to their reliance on limited datasets and modalities. Additionally, the architecture of many current models suffers from negative transfer, where performance on certain tasks deteriorates as new modalities are added. These challenges hinder scalability and the ability to deliver consistent results, underscoring the need for frameworks that can unify diverse data representations while preserving task performance.

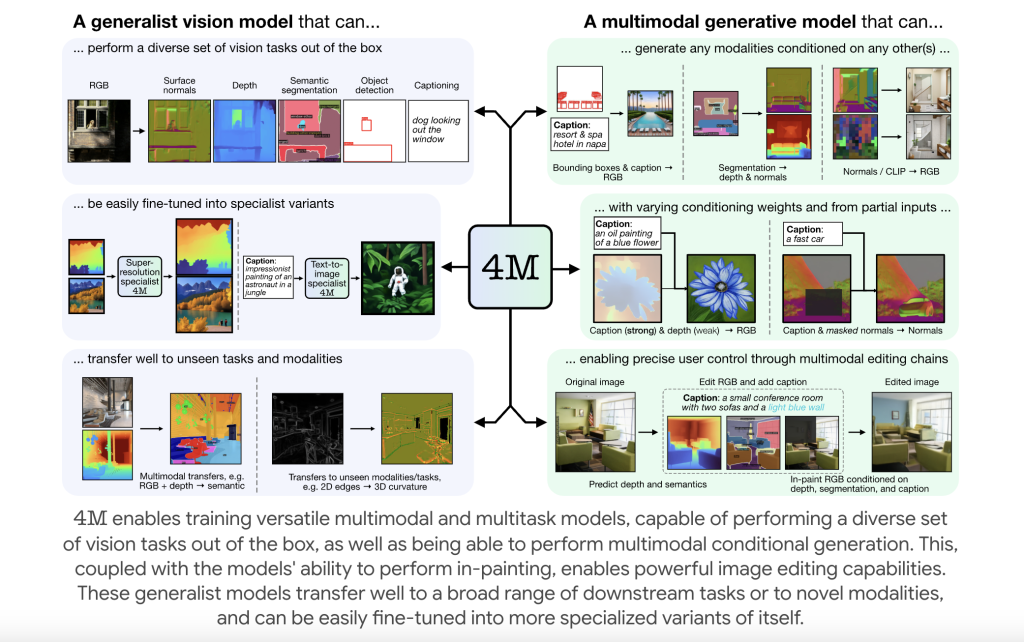

Researchers at EPFL have introduced 4M, an open-source framework designed to train versatile and scalable multimodal foundation models that extend beyond language. 4M addresses the limitations of existing approaches by enabling predictions across diverse modalities, integrating data from sources such as images, text, semantic features, and geometric metadata. Unlike traditional frameworks that cater to a narrow set of tasks, 4M expands to support 21 modalities, three times more than many of its predecessors.

A core innovation of 4M is its use of discrete tokenization, which converts diverse modalities into a unified sequence of tokens. This unified representation allows the model to leverage a Transformer-based architecture for joint training across multiple data types. By simplifying the training process and removing the need for task-specific components, 4M achieves a balance between scalability and efficiency. As an open-source project, it is accessible to the broader research community, fostering collaboration and further development.

Technical Details and Advantages

The 4M framework utilizes an encoder-decoder Transformer architecture tailored for multimodal masked modeling. During training, modalities are tokenized using specialized encoders suited to their data types. For instance, image data employs spatial discrete VAEs, while text and structured metadata are processed using a WordPiece tokenizer. This consistent approach to tokenization ensures seamless integration of diverse data types.

One notable feature of 4M is its capability for fine-grained and controllable data generation. By conditioning outputs on specific modalities, such as human poses or metadata, the model provides a high degree of control over the generated content. Additionally, 4M’s cross-modal retrieval capabilities allow for queries in one modality (e.g., text) to retrieve relevant information in another (e.g., images).

The framework’s scalability is another strength. Trained on large datasets like COYO700M and CC12M, 4M incorporates over 0.5 billion samples and scales up to three billion parameters. By compressing dense data into sparse token sequences, it optimizes memory and computational efficiency, making it a practical choice for complex multimodal tasks.

Results and Insights

The capabilities of 4M are evident in its performance across various tasks. In evaluations, it demonstrated robust performance across 21 modalities without compromising results compared to specialized models. For instance, 4M’s XL model achieved a semantic segmentation mIoU score of 48.1, matching or exceeding benchmarks while handling three times as many tasks as earlier models.

The framework also excels in transfer learning. Tests on downstream tasks, such as 3D object detection and multimodal semantic segmentation, show that 4M’s pretrained encoders maintain high accuracy across both familiar and novel tasks. These results highlight its potential for applications in areas like autonomous systems and healthcare, where integrating multimodal data is critical.

Conclusion

The 4M framework marks a significant step forward in the development of multimodal foundation models. By tackling scalability and cross-modal integration challenges, EPFL’s contribution sets the stage for more flexible and efficient AI systems. Its open-source release encourages the research community to build on this work, pushing the boundaries of what multimodal AI can achieve. As the field evolves, frameworks like 4M will play a crucial role in enabling new applications and advancing the capabilities of AI.

Check out the Paper, Project Page, GitHub Page, Demo, and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post EPFL Researchers Releases 4M: An Open-Source Training Framework to Advance Multimodal AI appeared first on MarkTechPost.

Source: Read MoreÂ