Imagine this familiar scene: it’s Friday evening, and your team is prepping a hot-fix release. The code passes unit tests, the sprint board is almost empty, and you’re already tasting weekend freedom. Suddenly, a support ticket pings:“Screen-reader users can’t reach the checkout button. The focus keeps looping back to the promo banner.”The clock is ticking, stress levels spike, and what should have been a routine push turns into a scramble. Five years ago, issues like this were inconvenient. Today, they’re brand-critical. Lawsuits over inaccessible sites keep climbing, and social media “name-and-shame” threads can tank brand trust overnight. That’s where AI in Accessibility Testing enters the picture. Modern machine-learning engines can crawl thousands of pages in minutes, flagging low-contrast text, missing alt attributes, or keyboard traps long before your human QA team would ever click through the first page. More importantly, these tools rank issues by severity so you fix what matters most, first. Accessibility Testing is no longer a nice-to-have it’s a critical part of your release pipeline.

However, and this is key, AI isn’t magic pixie dust. Algorithms still miss context, nuance, and the lived experience of real people with disabilities. The smartest teams pair automated scans with human insight, creating a hybrid workflow that’s fast and empathetic. In this guide you’ll learn how to strike that balance. We’ll explore leading AI tools, walk through implementation steps, and share real-world wins and pitfalls, plus answer the questions most leaders ask when they start this journey. By the end, you’ll have a clear roadmap for building an accessibility program that scales with your release velocity and your values.

Related Blogs

Accessibility in 2025: The Stakes Keep Rising

Why the Pressure Is Peaking

- Regulators have sharpened their teeth.

- European Accessibility Act (June 2025): Extends digital liability to all EU member states and requires ongoing compliance audits with WCAG 2.2 standards.

- U.S. DOJ ADA Title II Rule (April 2025): Provides explicit WCAG mapping and authorises steeper fines for non-compliance.

- India’s RPwD Rules 2025 update: Mandates quarterly accessibility statements for any government-linked site or app.

- Legal actions have accelerated. UsableNet’s 2024 Litigation Report shows

U.S. digital-accessibility lawsuits rose 15 % YoY, averaging one new case every working hour. Parallel class actions are now emerging in Canada, Australia, and Brazil. - Users are voting with their wallets. A 2025 survey from the UK charity Scope found 52 % of disabled shoppers abandoned an online purchase in the past month due to barriers, representing £17 billion in lost spend for UK retailers alone.

- Inclusive design is proving its ROI. Microsoft telemetry reveals accessibility-first features like dark mode and live captions drive some of the highest net-promoter scores across all user segments.

Quick Reality Check

- Tougher regulations, higher penalties: financial fines routinely hit six figures, and reputation damage can cost even more.

- User expectations have skyrocketed: 79 % of homepages still fail contrast checks, yet 71 % of disabled visitors bounce after a single bad experience.

- Competitive edge: teams that embed accessibility from sprint 0 enjoy faster page loads, stronger SEO, and measurable brand lift.

Takeaway: Annual manual audits are like locking your doors but leaving the windows open. AI-assisted testing offers 24/7 surveillance, provided you still invite people with lived experience to validate real-world usability.

From Manual to Machine: How AI Has Reshaped Testing

| Sno | Era | Typical Workflow | Pain Points | AI Upgrade |

|---|---|---|---|---|

| 1 | Purely Manual (pre-2018) | Expert testers run WCAG checklists page by page. | Slow, costly, inconsistent. | — |

| 2 | Rule-Based Automation | Linters and static analyzers scan code for known patterns. | Catch ~30 % of issues; misses anything contextual. | Adds early alerts but still noisy. |

| 3 | AI-Assisted (2023-present) | ML models evaluate visual contrast, generate alt text, and predict keyboard flow. | Needs human validation for edge cases. | Real-time remediation and smarter prioritization. |

Independent studies show fully automated tools still miss about 70 % of user-blocking barriers. That’s why the winning strategy is hybrid testing: let algorithms cover the broad surface area, then let people verify real-life usability.

Related Blogs

What AI Can and Can’t Catch

AI’s Sweet Spots

- Structural errors: missing form labels, empty buttons, incorrect ARIA roles.

- Visual contrast violations: color ratios below 4.5 : 1 pop up instantly.

- Keyboard traps: focus indicators and tab order problems appear in seconds.

- Alt-text gaps: bulk-identify images without descriptions.

AI’s Blind Spots

- Contextual meaning: Alt text that reads “image1234” technically passes but tells the user nothing.

- Logical UX flows: AI can’t always tell if a modal interrupts user tasks.

- Cultural nuance: Memes or slang may require human cultural insight.

Consequently, think of AI as a high-speed scout: it maps the terrain quickly, but you still need seasoned guides to navigate tricky passes.

Spotlight on Leading AI Accessibility Tools (2025 Edition)

| Sno | Tool | Best For | Signature AI Feature | Ballpark Pricing* |

|---|---|---|---|---|

| 1 | axe DevTools | Dev teams in CI/CD | “Intelligent Guided Tests” ask context-aware questions during scans. | Free core, paid Pro. |

| 2 | Siteimprove | Enterprise websites | “Accessibility Code Checker” blocks merges with WCAG errors. | Quote-based. |

| 3 | EqualWeb | Quick overlays + audits | Instant widget fixes common WCAG 2.2 issues. | From $39/mo. |

| 4 | accessiBe | SMBs needing hands-off fixes | 24-hour rescans plus keyboard-navigation tuning. | From $49/mo. |

| 5 | UserWay | Large multilingual sites | Over 100 AI improvements in 50 languages. | Freemium tiers. |

| 6 | Allyable | Dev-workflow integration | Pre-deploy scans and caption generation. | Demo, tiered pricing. |

| 7 | Google Lighthouse | Quick page snapshots | Open-source CLI and Chrome DevTools integration. | Free. |

| 8 | Microsoft Accessibility Insights | Windows & web apps | “Ask Accessibility” AI assistant explains guidelines in plain English. | Free. |

*Pricing reflects public tiers as of August 2025.

Real-life Example: When a SaaS retailer plugged Siteimprove into their GitHub Actions pipeline, accessibility errors on mainline branches dropped by 45 % within one quarter. Developers loved the instant feedback, and legal felt calmer overnight.

Step‑by‑Step: Embedding AI into Your Workflow

Below you’ll see exactly where the machine‑learning magic happens in each phase.

Step 1: Run a Baseline Audit

- Launch Axe DevTools or Lighthouse; both use trained models to flag structural issues, such as missing labels and low-contrast text.

- Export the JSON/HTML report; it already includes an AI‑generated severity score for each error, so you know what to fix first.

Step 2: Set Up Continuous Monitoring

- Choose Siteimprove, EqualWeb, UserWay, or Allyable.

- These platforms crawl your site with computer‑vision and NLP models that detect new WCAG violations the moment content changes.

- Schedule daily or weekly crawls and enable email/Slack alerts.

- Turn on email/Slack alerts that use AI triage to group similar issues so your inbox isn’t flooded.

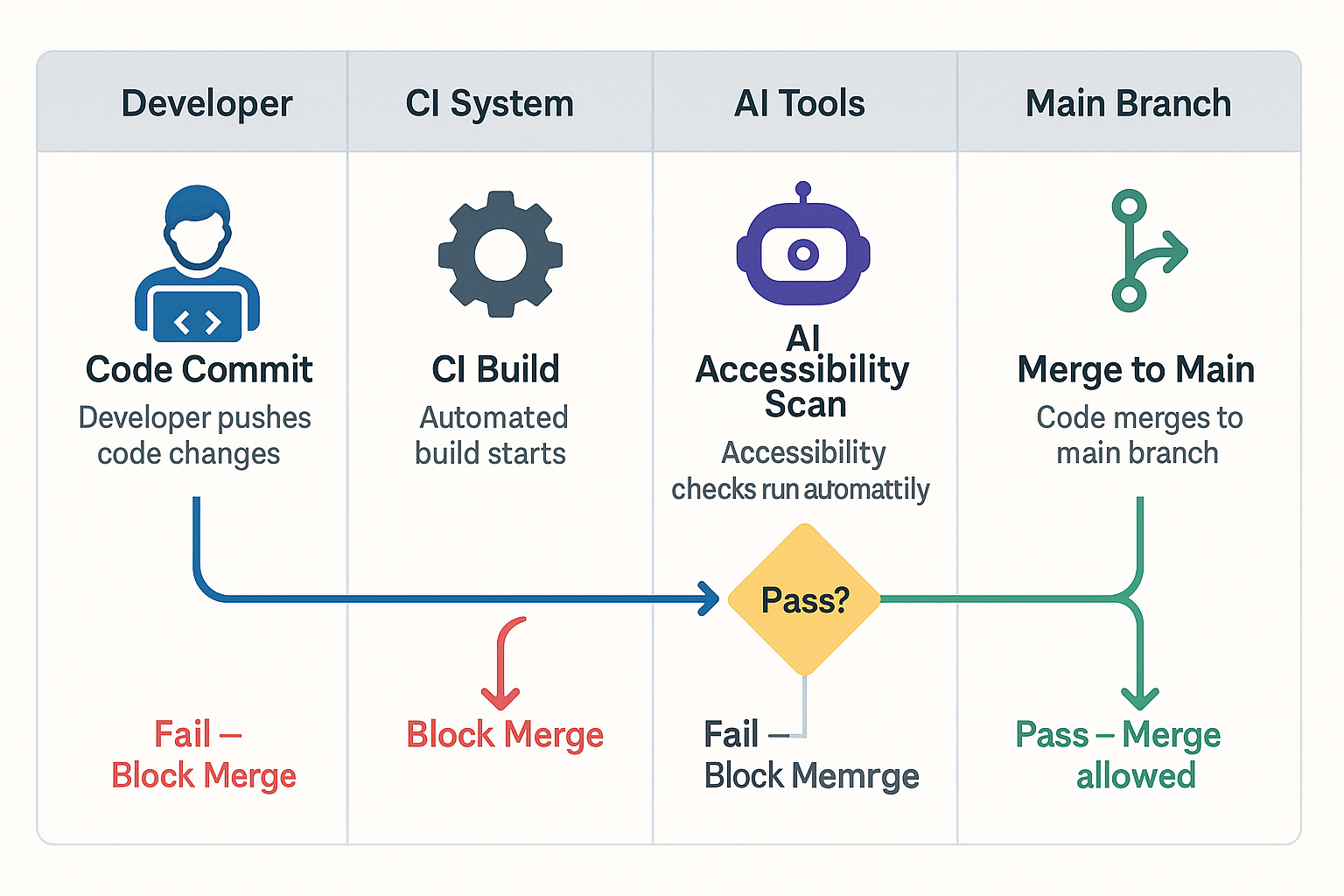

Step 3: Add an Accessibility Gate to CI/CD

- Install the CLI for your chosen tool (e.g., axe‑core).

- During each pull request, the CLI’s trained model scans the rendered DOM headlessly; if it finds critical AI‑scored violations, the build fails automatically.

Step 4: Apply Temporary Overlays (Optional)

- Deploy an overlay widget containing on‑page machine‑learning scripts that:

- Auto‑generate alt text (via computer vision)

- Reflow layouts for better keyboard focus

- Offer on‑the‑fly colour‑contrast adjustments

Step 5: Conduct Monthly Manual Verification

- Use a tool like Microsoft Accessibility Insights. It’s AI “Ask Accessibility” assistant guides human testers with context‑aware prompts, “Did this modal trap focus for you?” reducing guesswork.

- Pair at least two testers who rely on screen readers; the tool’s speech‑to‑text AI can transcribe their feedback live into your ticketing system.

Step 6: Report Progress and Iterate

- Dashboards in Siteimprove or Allyable apply machine‑learning trend analysis to show which components most frequently cause issues.

- Predictive insights highlight pages likely to fail next sprint, letting you act before users ever see the problem.

Benefits Table AI vs. Manual vs. Hybrid

| Benefit | Manual Only | AI Only | Hybrid (Recommended) |

|---|---|---|---|

| Scan speed | Hours → Weeks | Seconds → Minutes | Minutes |

| Issue coverage | ≈ 30 % | 60–80 % | 90 %+ |

| Context accuracy | High | Moderate | High |

| Cost efficiency | Low at scale | High | Highest |

| User trust | Moderate | Variable | High |

Takeaway: Hybrid testing keeps you fast without losing empathy or accuracy.

Real-World Wins: AI Improving Everyday Accessibility

- Netflix captions & audio descriptions now spin up in multiple languages long before a series drops, thanks to AI translation pipelines.

- Microsoft Windows 11 Live Captions converts any system audio into real-time English subtitles hugely helpful for Deaf and hard-of-hearing users.

- E-commerce brand CaseStudy.co saw a 12 % increase in mobile conversions after fixing keyboard navigation flagged by an AI scan.

Common Pitfalls & Ethical Watch-outs

- False sense of security. Overlays may mask but not fix code-level barriers, leaving you open to lawsuits.

- Data bias. Models trained on limited datasets might miss edge cases; always test with diverse user groups.

- Opaque algorithms. Ask vendors how their AI makes decisions; you deserve transparency.

- Privacy concerns. If a tool captures real user data (e.g., screen reader telemetry), confirm it’s anonymized.

The Road Ahead: Predictive & Personalized Accessibility

- Generative UIs that reshape layouts based on user preferences in real time.

- Predictive testing: AI suggests component fixes while designers sketch wireframes.

- Voice-first interactions: Large language models respond conversationally, making sites more usable for people with motor impairments.

Sample Code Snippet: Quick Contrast Checker in JavaScript

Before You Paste the Script: 4 Quick Prep Steps

- Load the page you want to audit in Chrome, Edge, or any Chromium-based browser; make sure dynamic content has finished loading.

- Open Developer Tools by pressing F12 (or Cmd+Opt+I on macOS) and switch to the Console tab.

- Scope the test if needed. Optional: type document.body in the console to confirm you’re in the right frame (useful for iframes or SPAs).

- Clear existing logs with Ctrl+L so you can focus on fresh contrast warnings.

Now paste the script below and hit Enter to watch low-contrast elements appear in real time.

// Flag elements failing 4.5:1 contrast ratio

function hexToRgb(hex) {

const bigint = parseInt(hex.replace('#', ''), 16);

return [(bigint >> 16) & 255, (bigint >> 8) & 255, bigint & 255];

}

function luminance(r, g, b) {

const a = [r, g, b].map(v => {

v /= 255;

return v <= 0.03928 ? v / 12.92 : Math.pow((v + 0.055) / 1.055, 2.4);

});

return a[0] * 0.2126 + a[1] * 0.7152 + a[2] * 0.0722;

}

function contrast(rgb1, rgb2) {

const lum1 = luminance(...rgb1) + 0.05;

const lum2 = luminance(...rgb2) + 0.05;

return lum1 > lum2 ? lum1 / lum2 : lum2 / lum1;

}

[...document.querySelectorAll('*')].forEach(el => {

const color = window.getComputedStyle(el).color;

const bg = window.getComputedStyle(el).backgroundColor;

const rgb1 = color.match(/d+/g).map(Number);

const rgb2 = bg.match(/d+/g).map(Number);

if (contrast(rgb1, rgb2) < 4.5) {

console.warn('Low contrast:', el);

}

});

Drop this script into your dev console for a quick gut-check, or wrap it in a Lighthouse custom audit to automate feedback.

Under the Hood: How This Script Works

- Colour parsing: The helper parseColor() hands off any CSS colour HEX, RGB, or RGBA to an off-screen <canvas> so the browser normalises it. This avoids fragile regex hacks and supports the full CSS-Colour-4 spec.

- Contrast math: WCAG uses relative luminance. We calculate that via the sRGB transfer curve, then compare foreground and background to get a single ratio.

- Severity levels: The script flags anything below 4.5 : 1 as a WCAG AA failure and anything below 3 : 1 as a severe UX blocker. Adjust those thresholds if you target AAA (7 : 1).

- Performance guard: A maxErrors parameter stops the scan after 50 hits, preventing dev-console overload on very large pages. Tweak or remove as needed.

- Console UX: console.groupCollapsed() keeps the output tidy by tucking each failing element into an expandable log group. You see the error list without drowning in noise.

Adapting for Other Environments

| S. No | Environment | What to Change | Why |

|---|---|---|---|

| 1 | Puppeteer CI | Replace document.querySelectorAll(‘*’) with await page.$$(‘*’) & run in Node context. | Enables headless Chrome scans in pipelines. |

| 2 | Jest Unit Test | Import functions and assert on result length instead of console logs. | Makes failures visible in test reporter. |

| 3 | Storybook Add-on | Wrap the scanner in a decorator that watches rendered components. | Flags contrast issues during component review. |

Conclusion

AI won’t single-handedly solve accessibility, yet it offers a turbo-boost in speed and scale that manual testing alone can’t match. By blending high-coverage scans with empathetic human validation, you’ll ship inclusive features sooner, avoid legal headaches, and most importantly, welcome millions of users who are too often left out.

Feeling inspired? Book a free 30-minute AI-augmented accessibility audit with our experts, and receive a personalized action plan full of quick wins and long-term strategy.

Frequently Asked Questions

-

Can AI fully replace manual accessibility testing?

In a word, no. AI catches the bulk tech issues, but nuanced user flows still need human eyes and ears.

-

What accessibility problems does AI find fastest?

Structural markup errors, missing alt text, color‑contrast fails, and basic keyboard traps are usually flagged within seconds.

-

Is AI accessibility testing compliant with India’s accessibility laws?

Yes most tools align with WCAG 2.2 and India’s Rights of Persons with Disabilities Act. Just remember to schedule periodic manual audits for regional nuances.

-

How often should I run AI scans?

Automated checks should run on every pull request and at least weekly in production to catch CMS changes.

-

Do overlay widgets make a site “fully accessible”?

Overlays can patch surface issues quickly, but they don’t always fix underlying code. Think of them as band‑aids, not cures.

The post AI in Accessibility Testing: The Future Awaits appeared first on Codoid.

Source: Read More