In today’s fast-paced development world, AI agents for automation testing are no longer science fiction they’re transforming how teams ensure software quality. Imagine giving an intelligent “digital coworker” plain English instructions, and it automatically generates, executes, and even adapts test cases across your application. This blog explains what AI agents in testing are, how they differ from traditional automation, and why tech leads and QA engineers are excited about them. We’ll cover real-world examples (including SmolAgent from Hugging Face), beginner-friendly analogies, and the key benefits of AI-driven test automation. Whether you’re a test lead or automation engineer, this post will give you a deep dive into the AI agent for automation testing trend. Let’s explore how these smart assistants are freeing up testers to focus on creative problem-solving while handling the routine grind of regression and functional checks.

Related Blogs

What Is an AI Agent in Test Automation?

An AI testing agent is essentially an intelligent software entity dedicated to running and improving tests. Think of it as a “digital coworker” that can examine your app’s UI or API, spot bugs, and even adapt its testing strategy on the fly. Unlike a fixed script that only does exactly what it’s told, a true agent can decide what to test next based on what it learns. It combines AI technologies (like machine learning, natural language processing, or computer vision) under one umbrella to analyze the application and make testing decisions

- Digital coworker analogy: As one guide notes, AI agents are “a digital coworker…with the power to examine your application, spot issues, and adapt testing scenarios on the fly” . In other words, they free human testers from repetitive tasks, allowing the team to focus on creative, high-value work.

- Intelligent automation: These agents can read the app (using tools like vision models or APIs), generate test cases, execute them, and analyze the results. Over time, they learn from outcomes to suggest better tests.

- Not a replacement, but a partner: AI agents aren’t meant to replace QA engineers. Instead, they handle grunt work (regression suites, performance checks, etc.), while humans handle exploratory testing, design, and complex scenarios

In short, an AI agent in automation testing is an autonomous or semi-autonomous system that can perform software testing tasks on its own or under guidance. It uses ML models and AI logic to go beyond simple record-playback scripts, continuously learning and adapting as the app changes. The result is smarter, faster testing where the agentic part its ability to make decisions and adapt distinguishes it from traditional automation tools

How AI Agents Work in Practice

AI agents in testing operate in a loop of sense – decide – act – learn. Here’s a simplified breakdown of how they function:

- Perception (Sense): The agent gathers information about the application under test. For a UI, this might involve using computer vision to identify buttons or menus. For APIs, it reads endpoints and data models. Essentially, the agent uses AI (vision, NLP, data analysis) to understand the app’s state, much like a human tester looking at a screen.

- Decision-Making (Plan): Based on what it sees, the agent chooses what to do next. For example, it may decide to click a “Submit” button or enter a certain data value. Unlike scripted tests, this decision is not pre-encoded – the agent evaluates possible actions and selects one that it predicts will be informative.

- Action (Execute): The agent performs the chosen test actions. It might run a Selenium click, send an HTTP request, or invoke other tools. This step is how the agent actually exercises the application. Because it’s driven by AI logic, the same agent can test very different features without rewriting code.

- Analysis & Learning: After actions, the agent analyzes the results. Did the app respond correctly? Did any errors or anomalies occur? A true agent will use this feedback to learn and adapt future tests. For example, it might add a new test case if it finds a new form or reduce redundant tests over time. This continuous loop sensing, acting, and learning is what differentiates an agent from a simple automation script.

In practice, many so-called “AI agents” today may be simpler (often just advanced scripts with AI flair). But the goal is to move toward fully autonomous agents that can build, maintain, and improve test suites on their own. For example, an agent can “actively decide what tasks to perform based on its understanding of the app” spotting likely failure points (like edge case input) without being explicitly programmed to do so. It can then adapt if the app changes, updating its strategy without human intervention.

AI Agents vs. Traditional Test Automation

It helps to compare traditional automation with AI agent driven testing. Traditional test automation relies on pre-written scripts that play back fixed actions (click here, enter that) under each run. Imagine a loyal robot following an old instruction manual it’s fast and tireless, but it won’t notice if the UI changes or try new paths on its own. In contrast, AI agents behave more like a smart helper that learns and adapts.

- Script vs. Smarts: Traditional tools run pre-defined scripts only. AI agents learn from data and evolve their approach.

- Manual updates vs. Self-healing: Normal automation breaks when the app changes (say, a button moves). AI agents can “self-heal” tests – they detect UI changes and adjust on the fly.

- Reactive vs. Proactive: Classic tests only do what they’re told. AI-driven tests can proactively spot anomalies or suggest new tests by recognizing patterns and trends.

- Human effort: Manual test creation requires skilled coders. With AI agents, testers can often work in natural language or high-level specs. For instance, one example lets testers write instructions in plain English, which the agent converts into Selenium code.

- Coverage: Pre-scripted tests cover only what’s been coded. AI agents can generate additional test cases automatically, using techniques like analyzing requirements or even generating tests from user stories

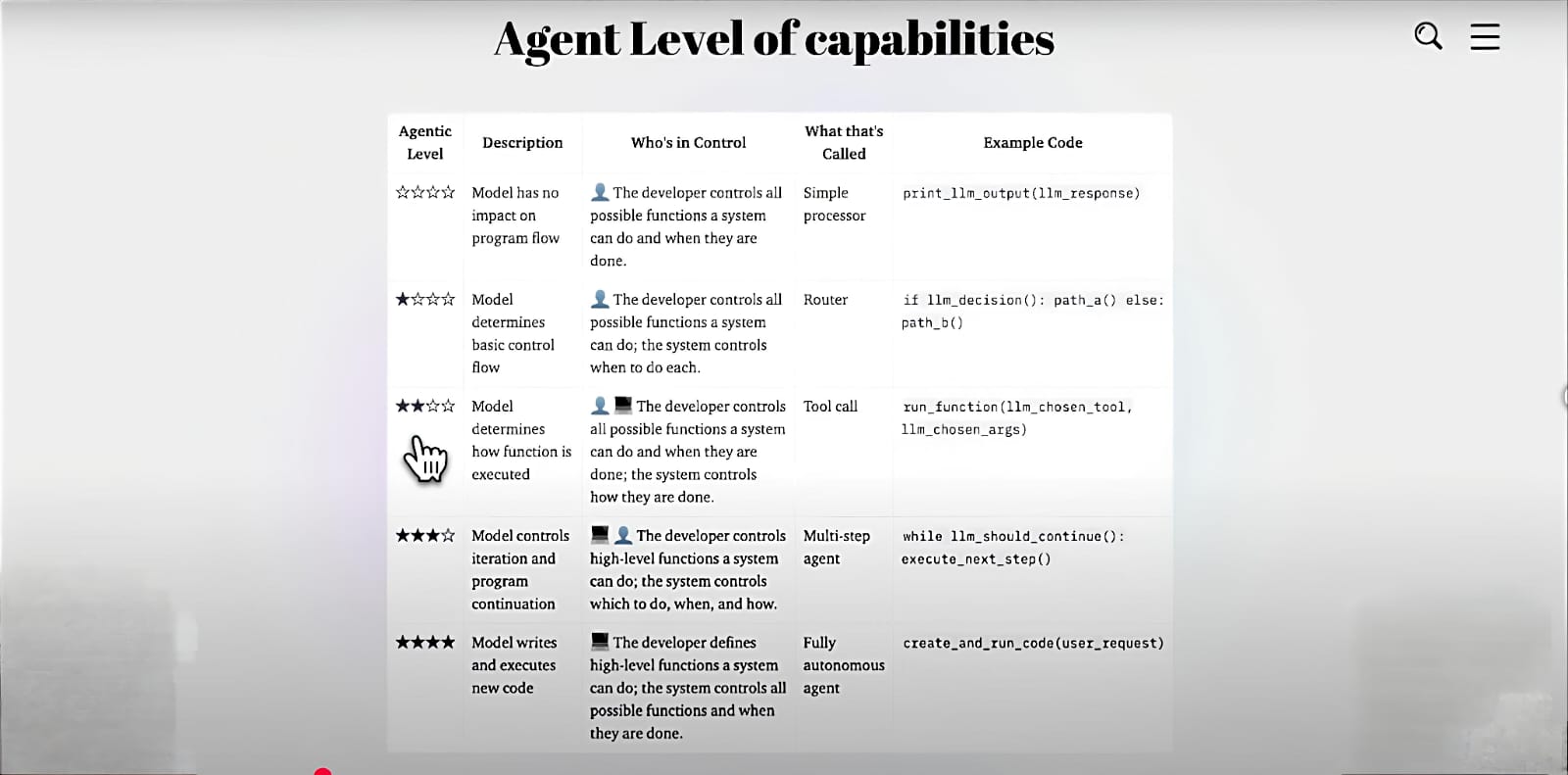

A handy way to see this is in a comparison table:

| S. No | Aspect | Traditional Automation | AI Agent Automation |

|---|---|---|---|

| 1 | Test Creation | Manual scripting with code (e.g. Selenium scripts) | Generated by agent (often from high-level input or AI insights) |

| 2 | Maintenance | High scripts break when UI/ logic changes | Low agents can self-heal tests and adapt to app changes |

| 3 | Adaptability | Static (fixed actions) | Dynamic can choose new actions based on context |

| 4 | Learning | None each run is independent | Continuous agent refines its strategy from past runs |

| 5 | Coverage | Limited by manual effort | Broader agents can generate additional cases and explore edges |

| 6 | Required Skills | Automation coding ( Java/Python/etc.) | Often just domain knowledge or natural language inputs |

| 7 | Error Handling | Fail on any mismatch; requires manual fix | Spot anomalies and adjust (e.g. find alternate paths) |

| 8 | Speed | High for repetitive runs, but design is time-consuming | Can quickly create and run many tests, accelerating cycle time |

This table illustrates why many teams view AI agents as the “future of testing.” They dramatically reduce the manual overhead of test creation and maintenance, while providing smarter coverage and resilience. In fact, one article quips that traditional automation is like a robot following an instruction manual, whereas AI automation “actively learns and evolves” , enabling it to upgrade tests on the fly as it learns from results.

Related Blogs

Key Benefits of AI Agents in Automation Testing

Integrating AI agents into your QA process can yield powerful advantages. Here are some of the top benefits emphasized by industry experts and recent research:

- Drastically Reduced Manual Effort: AI agents can automate repetitive tasks (regression runs, data entry, etc.), freeing testers to focus on new features and explorations, They tackle the “tedious, repetitive tasks” so human testers can use their creativity where it matters.

- Fewer Human Errors: By taking over routine scripting, agents eliminate mistakes that slip in during manual test coding. This leads to more reliable test runs and faster releases.

- Improved Test Coverage: Agents can automatically generate new test cases. They analyze app requirements or UI flows to cover scenarios that manual testers might miss. This wider net catches more bugs.

- Self-Healing Tests: One of the most-cited perks is the ability to self-adjust. For example, if a UI element’s position or name changes, an AI agent can often find and use the new element rather than failing outright. This cuts down on maintenance downtime.

- Continuous Learning: AI agents improve over time. They learn from previous test runs and user interactions. This means test quality keeps getting better – the agent can refine its approach for higher accuracy in future cycles.

- Faster Time-to-Market: With agents generating tests and adapting quickly, development cycles speed up. Teams can execute comprehensive tests in minutes that might take hours manually, leading to quicker, confident releases.

- Proactive Defect Detection: Agents can act like vigilant watchdogs. They continuously scan for anomalies and predict likely failures by analyzing patterns in data . This foresight helps teams catch issues earlier and reduce costly late-stage defects.

- Better Tester Focus: With routine checks handled by AI, QA engineers and test leads can dedicate more effort to strategic testing (like exploratory or usability testing) that truly requires human judgment.

These benefits often translate into higher product quality and significant ROI. As Kobiton’s guide notes, by 2025 AI testing agents will be “far more integrated, context-aware, and even self-healing,” helping CI/CD pipelines reach the next level. Ultimately, leveraging AI agents is about working smarter, not harder, in software quality assurance.

AI Agent Tools and Real-World Examples

Hugging Face’s SmolAgent in Action

A great example of AI agents in testing is Hugging Face’s SmolAgents framework. SmolAgents is an open-source Python library that makes it simple to build and run AI agents with minimal code. For QA, SmolAgent can connect to Selenium or Playwright to automate real user interactions on a website.

- English-to-Test Automation: One use case lets a tester simply write instructions in plain English, which the SmolAgent translates into Selenium actions . For instance, a tester could type “log in with admin credentials and verify dashboard loads.” The AI agent interprets this, launches the browser, inputs data, and checks the result. This democratizes test writing, allowing even non- programmers to create tests.

- SmolAgent Project: There’s even a GitHub project titled “Automated Testing with Hugging Face SmolAgent”, which shows SmolAgent generating and executing tests across Selenium, PyTest, and Playwright. This real-world codebase proves the concept: the agent writes the code to test UI flows without hand-crafting each test.

- API Workflow Automation: Beyond UIs, SmolAgents can handle APIs too. In one demo, an agent used the API toolset to automatically create a sequence of API calls (even likened to a “Postman killer” in a recent video). It read API documentation or specs, then orchestrated calls to test endpoints. This means complex workflows (like user signup + order placement) can be tested by an agent without manual scripting.

- Vision and Multimodal Agents: SmolAgent supports vision models and multi-step reasoning. For example, an agent can “see” elements on a page (via computer vision) and decide to click or type. It can call external search tools or databases if needed. This makes it very flexible for end-to-end testing tasks.

In short, SmolAgent illustrates how an AI agent can be a one-stop assistant for testing. Instead of manually writing dozens of Selenium tests, a few natural-language prompts can spawn a robust suite.

Emerging AI Testing Tools

The ecosystem of AI-agent tools for QA is rapidly growing. Recent breakthroughs include specialized frameworks and services:

- UI Testing Agents: Tools like UI TARS and Skyvern use vision language models to handle web UI tests. For example, UI TARS can take high level test scenarios and visualize multistep workflows, while Skyvern is designed for modern single-page apps (SPA) without relying on DOM structure.

- Gherkin-to-Test Automation: Hercules is a tool that converts Gherkin-style test scenarios (plain English specs) into executable UI or API tests. This blurs the line between manual test cases and automation, letting business analysts write scenarios that the AI then automates.

- Natural Language to Code: Browser-Use and APITestGenie allow writing tests in simple English. Browser-Use can transform English instructions into Playwright code using GPT models. APITestGenie focuses on API tests, letting testers describe API calls in natural language and having the agent execute them.

- Open-Source Agents: Beyond SmolAgent, companies are exploring open frameworks. An example is a project that uses SmolAgent along with tools4AI and Docker to sandbox test execution. Such projects show it’s practical to integrate large language models, web drivers, and CI pipelines into a coherent agentic testing system.

Analogies and Beginner-friendly Example

If AI agents are still an abstract idea, consider this analogy: A smart assistant in the kitchen. Traditional automation is like a cook following a rigid cookbook. AI agents are like an experienced sous-chef who understands the cuisine, improvises when an ingredient is missing, and learns a new recipe by observing. You might say, “Set the table for a family dinner,” and the smart sous-chef arranges plates, pours water, and even tweaks the salad dressing recipe on-the-fly as more guests arrive. In testing terms, the AI agent reads requirements (the recipe), arranges tests (the table), and adapts to changes (adds more forks if the family size grows), all without human micromanagement.

Or think of auto-pilot in planes: a pilot (QA engineer) still oversees the flight, but the autopilot (AI agent) handles routine controls, leaving the pilot to focus on strategy. If turbulence hits (a UI change), the autopilot might auto-adjust flight path (self-heal test) rather than shaking (failing test). Over time the system learns which routes (test scenarios) are most efficient.

These analogies highlight that AI agents are assistive, adaptive partners in the testing process, capable of both following instructions and going beyond them when needed.

How to Get Started with AI Agents in Your Testing

Adopting AI agents for test automation involves strategy as much as technology. Here are some steps and tips:

- Choose the Right Tools: Explore AI-agent frameworks like SmolAgents, LangChain, or vendor solutions (Webo.AI, etc.) that support test automation. Many can integrate with Selenium, Cypress, Playwright, or API testing tools. For instance, SmolAgents provides a Python SDK to hook into browsers.

- Define Clear Objectives: Decide what you want the agent to do. Start with a narrow use case (e.g. automate regression tests for a key workflow) rather than “test everything”.

- Feed Data to the Agent: AI agents learn from examples. Provide them with user stories, documentation, or existing test cases. For example, feeding an agent your acceptance criteria (like “user can search and filter products”) can guide it to generate tests for those features.

- Use Natural Language Prompts: If the agent supports it, describe tests in plain English or high- level pseudo code. As one developer did, you could write “Go to login page, enter valid credentials, and verify dashboard” and the agent translates this to actual Selenium commands.

- Set Up Continuous Feedback: Run your agent in a CI/CD pipeline. When a test fails, examine why and refine the agent. Some advanced agents offer “telemetry” to monitor how they make decisions (for example, Hugging Face’s SmolAgent can log its reasoning steps).

- Gradually Expand Scope: Once comfortable, let the agent explore new areas. Encourage it to try edge cases or alternative paths it hasn’t seen. Many agents can use strategies like fuzzing inputs or crawling the UI to find hidden bugs.

- Monitor and Review: Always have a human in the loop, especially early on. Review the tests the agent creates to ensure they make sense. Over time, the agent’s proposals can become a trusted part of your testing suite.

Throughout this process, think of the AI agent as a collaborator. It should relieve workload, not take over completely. For example, you might let an agent handle all regression testing, while your team designs exploratory test charters. By iterating and sharing knowledge (e.g., enriching the agent’s “toolbox” with specific functions like logging in or data cleanup), you’ll improve its effectiveness.

Take Action: Elevate Your Testing with AI Agents

AI agents are transforming test automation into a faster, smarter, and more adaptive process. The question is: are you ready to harness this power for your team? Start small evaluate tools like SmolAgent, LangChain, or UI-TARS by assigning them a few simple test scenarios. Write those scenarios in plain English, let the agent generate and execute the tests, and measure the results. How much time did you save? What new bugs were uncovered?

You can also experiment with integrating AI agents into your DevOps pipeline or test out a platform like Webo.AI to see intelligent automation in action. Want expert support to accelerate your success? Our AI QA specialists can help you pilot AI-driven testing in your environment. We’ll demonstrate how an AI agent can boost your release velocity, reduce manual effort, and deliver better quality with every build.

Don’t wait for the future start transforming your QA today.

Frequently Asked Questions

-

What exactly is an “AI agent” in testing?

An AI testing agent is an intelligent system (often LLM-based) that can autonomously perform testing tasks. It reads or “understands” parts of the application (UI elements, API responses, docs) and decides what tests to run next. The agent generates and executes tests, analyzes results, and learns from them, unlike a fixed automation script.

-

How are AI agents different from existing test automation tools?

Traditional tools require you to write and maintain code for each test. AI agents aim to learn and adapt: they can auto-generate test cases from high-level input, self-heal when the app changes, and continuously improve from past runs. In practice, agents often leverage the same underlying frameworks (e.g., Selenium or Playwright) but with a layer of AI intelligence controlling them.

-

Do AI agents replace human testers or automation engineers?

No. AI agents are meant to be assistants, not replacements. They handle repetitive, well-defined tasks and data-heavy testing. Human testers still define goals, review results, and perform exploratory and usability testing. As Kobiton’s guide emphasizes, agents let testers focus on “creative, high-value work” while the agent covers the tedious stuff

-

Can anyone use AI agents, or do I need special skills?

Many AI agent tools are designed to be user-friendly. Some let you use natural language (English) for test instructions . However, understanding basic test design and being able to review the agent’s output is important. Tech leads should guide the process, and developers/ QA engineers should oversee the integration and troubleshooting.

-

What’s a good beginner project with an AI agent?

Try giving the agent a simple web app and a natural-language test case. For example, have it test a login workflow. Provide it with the page URL and the goal (“log in as a user and verify the welcome message”). See how it sets up the Selenium steps on its own. The SmolAgent GitHub project is a great starting point to experiment with code examples .

-

Are there limitations or challenges?

Yes, AI agents still need good guidance and data. They can sometimes make mistakes or produce nonsensical steps if not properly constrained. Quality of results depends on the AI model and the training/examples you give. Monitoring and continuous improvement are key. Security is also a concern (running code-generation agents needs sandboxing). But the technology is rapidly improving, and many solutions include safeguards (like Hugging Face’s sandbox environments ).

-

What’s the future of AI agents in QA?

Analysts predict AI agents will become more context-aware and even self-healing by 2025 . We’ll likely see deeper integration into DevOps pipelines, with multi-agent systems coordinating to cover complex test suites. As one expert puts it, AI agents are not just automating yesterday’s tests – they’re “exploring new frontiers” in how we think about software testing.

The post AI Agents for Automation Testing: Revolutionizing Software QA appeared first on Codoid.

Source: Read More