Automation testing has revolutionized the way software teams deliver high-quality applications. By automating repetitive and critical test scenarios, QA teams achieve faster release cycles, fewer manual errors, and greater test coverage. But as these automation frameworks scale, so does the risk of accumulating technical debt in the form of flaky tests, poor structure, and inconsistent logic. Enter the code review, an essential quality gate that ensures your automation efforts remain efficient, maintainable, and aligned with engineering standards. While code reviews are a well-established practice in software development, their value in automation testing is often underestimated. A thoughtful code review process helps catch potential bugs, enforce coding best practices, and share domain knowledge across teams. More importantly, it protects the integrity of your test suite by keeping scripts clean, robust, and scalable.

This comprehensive guide will help you unlock the full potential of automation code reviews. We’ll walk through 12 actionable best practices, highlight common mistakes to avoid, and explain how to integrate reviews into your existing workflows. Whether you’re a QA engineer, test automation architect, or team lead, these insights will help you elevate your testing strategy and deliver better software, faster.

Related Blogs

Spring Boot for Automation Testing: A Tester’s Guide

Automation Test Coverage Metrics for QA and Product Managers

Why Code Reviews Matter in Automation Testing

Code reviews are more than just a quality checkpoint; they’re a collaborative activity that drives continuous improvement. In automation testing, they serve several critical purposes:

- Ensure Reliability: Catch flaky or poorly written tests before they impact CI/CD pipelines.

- Improve Readability: Make test scripts easier to understand, maintain, and extend.

- Maintain Consistency: Align with design patterns like the Page Object Model (POM).

- Enhance Test Accuracy: Validate assertion logic and test coverage.

- Promote Reusability: Encourage shared components and utility methods.

- Prevent Redundancy: Eliminate duplicate or unnecessary test logic.

- Foster Collaboration: Facilitate cross-functional knowledge sharing.

Let’s now explore the best practices that ensure effective code reviews in an automation context.

Best Practices for Reviewing Test Automation Code

To ensure your automation tests are reliable and easy to maintain, code reviews should follow clear and consistent practices. These best practices help teams catch issues early, improve code quality, and make scripts easier to understand and reuse. Here are the key things to look for when reviewing automation test code.

1. Standardize the Folder Structure

Structure directly influences test suite maintainability. A clean and consistent directory layout helps team members locate and manage tests efficiently.

Example structure:

/tests /login /dashboard /pages /utils /testdata

Include naming conventions like test_login.py, HomePage.java, or user_flow_spec.js.

2. Enforce Descriptive Naming Conventions

Clear, meaningful names for tests and variables improve readability.

# Good def test_user_can_login_with_valid_credentials(): # Bad def test1():

Stick to camelCase or snake_case based on language standards, and avoid vague abbreviations.

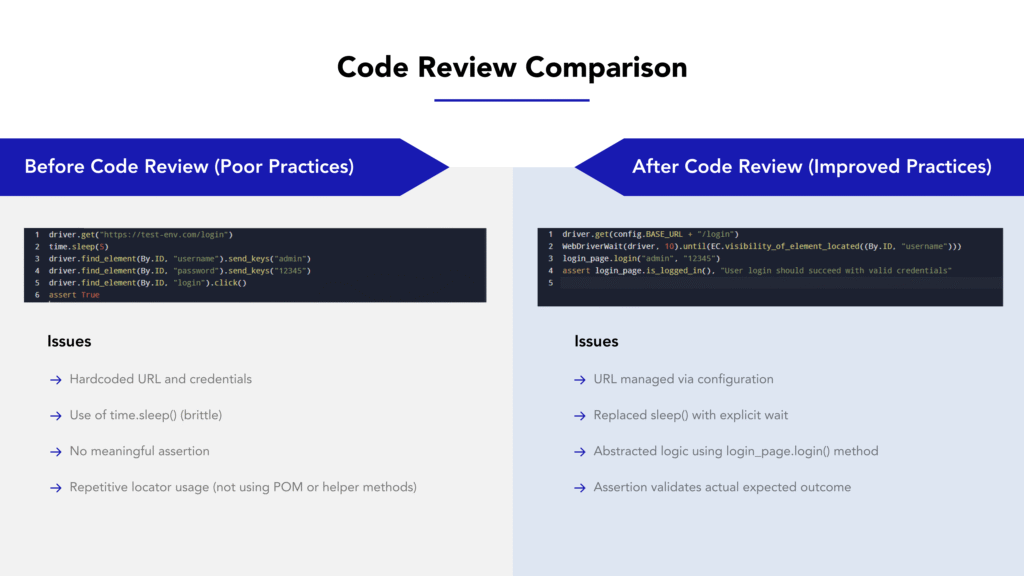

3. Eliminate Hard-Coded Values

Hard-coded inputs increase maintenance and reduce flexibility.

# Bad

driver.get("https://qa.example.com")

# Good

driver.get(config.BASE_URL)

Use config files, environment variables, or data-driven frameworks for flexibility and security.

4. Validate Assertions for Precision

Assertions are your test verdicts make them count.

- Use descriptive messages.

- Avoid overly generic or redundant checks.

- Test both success and failure paths.

assert login_page.is_logged_in(), "User should be successfully logged in"

5. Promote Code Reusability

DRY (Don’t Repeat Yourself) is a golden rule in automation.

Refactor repetitive actions into:

- Page Object Methods

- Helper functions

- Custom utilities

This improves maintainability and scalability.

6. Handle Synchronization Properly

Flaky tests often stem from poor wait strategies.

Avoid: Thread.sleep(5000).

Prefer: Explicit waits like WebDriverWait or Playwright’s waitForSelector()

new WebDriverWait(driver, 10).until(ExpectedConditions.visibilityOfElementLocated(By.id("profile")));

7. Ensure Test Independence

Each test should stand alone. Avoid dependencies on test order or shared state.

Use setup/teardown methods like @BeforeEach, @AfterEach, or fixtures to prepare and reset the environment.

8. Review for Comprehensive Test Coverage

Confirm that the test:

- Covers the user story or requirement

- Validates both positive and negative paths

- Handles edge cases like empty fields or invalid input

Use tools like code coverage reports to back your review.

9. Use Linters and Formatters

Automated tools can catch many style issues before a human review.

Recommended tools:

- Python: flake8, black

- Java: Checkstyle, PMD

- JavaScript: ESLint

Integrate these into CI pipelines to reduce manual overhead.

10. Check Logging and Reporting Practices

Effective logging helps in root-cause analysis when tests fail.

Ensure:

- Meaningful log messages are included.

- Reporting tools like Allure or ExtentReports are integrated.

- Logs are structured (e.g., JSON format for parsing in CI tools).

11. Verify Teardown and Cleanup Logic

Without proper cleanup, tests can pollute environments and cause false positives/negatives.

Check for:

- Browser closure

- State reset

- Test data cleanup

Use teardown hooks (@AfterTest, tearDown()) or automation fixtures.

12. Review for Secure Credential Handling

Sensitive data should never be hard-coded.

Best practices include:

- Using environment variables

- Pulling secrets from vaults

- Masking credentials in logs

export TEST_USER_PASSWORD=secure_token_123

Related Blogs

Who Should Participate in Code Reviews?

Effective automation code reviews require diverse perspectives:

- QA Engineers: Focus on test logic and coverage.

- SDETs or Automation Architects: Ensure framework alignment and reusability.

- Developers (occasionally): Validate business logic alignment.

- Tech Leads: Approve architecture-level improvements.

Encourage rotating reviewers to share knowledge and avoid bottlenecks.

Code Review Summary Table

| S. No | Area | Poor Practice | Best Practice |

|---|---|---|---|

| 1 | Folder Structure | All tests in one directory | Modular folders (tests, pages, etc.) |

| 2 | Assertion Logic | assertTrue(true) | Assert specific, meaningful outcomes |

| 3 | Naming | test1(), x, btn | test_login_valid(), login_button |

| 4 | Wait Strategies | Thread.sleep() | Explicit/Fluent waits |

| 5 | Data Handling | Hardcoded values | Config files or test data files |

| 6 | Credentials | Passwords in code | Use secure storage |

Common Challenges in Code Reviews for Automation Testing

Despite their benefits, automation test code reviews can face real-world obstacles that slow down processes or reduce their effectiveness. Understanding and addressing these challenges is crucial for making reviews both efficient and impactful.

1. Lack of Reviewer Expertise in Test Automation

Challenge: Developers or even fellow QA team members may lack experience in test automation frameworks or scripting practices, leading to shallow reviews or missed issues.

Solution:

- Pair junior reviewers with experienced SDETs or test leads.

- Offer periodic workshops or lunch-and-learns focused on reviewing test automation code.

- Use documentation and review checklists to guide less experienced reviewers.

2. Inconsistent Review Standards

Challenge: Without a shared understanding of what to look for, different reviewers focus on different things some on formatting, others on logic, and some may approve changes with minimal scrutiny.

Solution:

- Establish a standardized review checklist specific to automation (e.g., assertions, synchronization, reusability).

- Automate style and lint checks using CI tools so human reviewers can focus on logic and maintainability.

3. Time Constraints and Review Fatigue

Challenge: In fast-paced sprints, code reviews can feel like a bottleneck. Reviewers may rush or skip steps due to workload or deadlines.

Solution:

- Set expectations for review timelines (e.g., review within 24 hours).

- Use batch review sessions for larger pull requests.

- Encourage smaller, frequent PRs that are easier to review quickly.

4. Flaky Test Logic Not Spotted Early

Challenge: A test might pass today but fail tomorrow due to timing or environment issues. These flakiness sources often go unnoticed in a code review.

Solution:

- Add comments in reviews specifically asking reviewers to verify wait strategies and test independence.

- Use pre-merge test runs in CI pipelines to catch instability.

5. Overly Large Pull Requests

Challenge: Reviewing 500 lines of code is daunting and leads to reviewer fatigue or oversights.

Solution:

- Enforce a limit on PR size (e.g., under 300 lines).

- Break changes into logical chunks—one for login tests, another for utilities, etc.

- Use “draft PRs” for early feedback before the full code is ready.

Conclusion

A strong source code review process is the cornerstone of sustainable automation testing. By focusing on code quality, readability, maintainability, and security, teams can build test suites that scale with the product and reduce future technical debt. Good reviews not only improve test reliability but also foster collaboration, enforce consistency, and accelerate learning across the QA and DevOps lifecycle. The investment in well-reviewed automation code pays dividends through fewer false positives, faster releases, and higher confidence in test results. Adopting these best practices helps teams move from reactive to proactive QA, ensuring that automation testing becomes a strategic asset rather than a maintenance burden.

Frequently Asked Questions

-

Why are source code reviews important in automation testing?

They help identify issues early, ensure code quality, and promote best practices, leading to more reliable and maintainable test suites.

-

How often should code reviews be conducted?

Ideally, code reviews should be part of the development process, conducted for every significant change or addition to the test codebase.

-

Who should be involved in the code review process?

Involve experienced QA engineers, developers, and other stakeholders who can provide valuable insights and feedback.

-

What tools can assist in code reviews?

Tools like GitHub, GitLab, Bitbucket, and code linters like pylint or flake8 can facilitate effective code reviews.

-

Can I automate part of the code review process?

Yes use CI tools for linting, formatting, and running unit tests. Reserve manual reviews for test logic, assertions, and maintainability.

-

How do I handle disagreements in reviews?

Focus on the shared goal code quality. Back your opinions with documentation or metrics.

The post Code Review Best Practices for Automation Testing appeared first on Codoid.

Source: Read More