Ensuring high-quality software requires strong testing processes. Software testing, especially test automation, is very important for this purpose. High test coverage through automation test coverage metrics shows how much automated tests are used in testing a software application. This measurement is key for a good development team and test automation. When teams measure and analyze automation test coverage, they can learn a lot about how well their testing efforts are working. This helps them make smart choices to boost software quality.

Understanding Automation Test Coverage

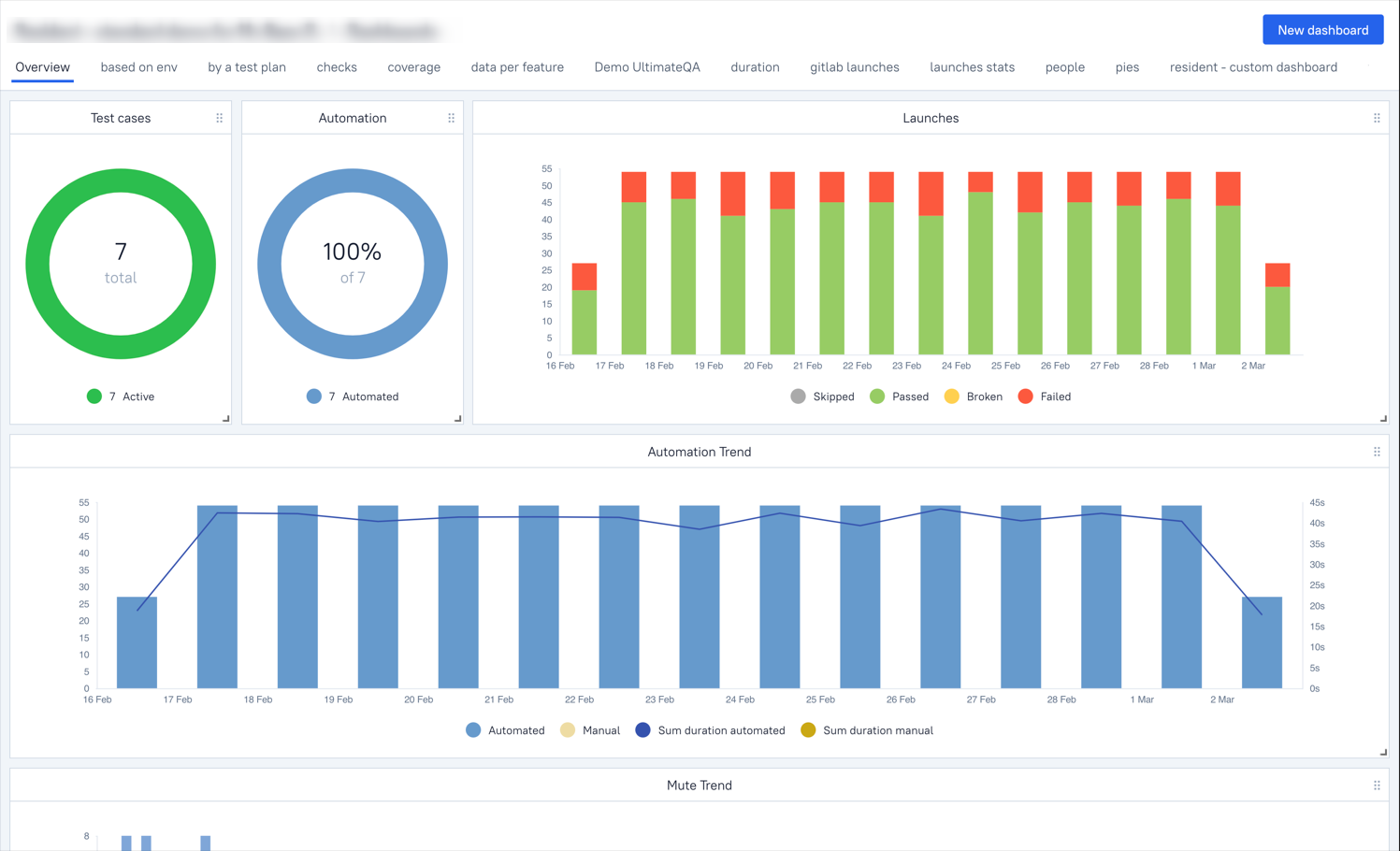

Automation test coverage shows the percentage of a software application’s code that a number of automation tests run. It gives a clear idea of how well these tests check the software’s functionality, performance, and reliability. Getting high automation test coverage is important. It helps cut testing time and costs, leading to a stable and high-quality product.

Still, it’s key to remember that automation test coverage alone does not define software quality. While having high coverage is good, it’s vital not to sacrifice test quality. You need a well-designed and meaningful test suite of automated tests that focus on the important parts of the application.

Key Metrics to Measure Automation Test Coverage

Measuring automation test coverage is very important for making sure your testing efforts are effective. These metrics give you useful information about how complete your automated tests are. They also help you find areas that need improvement.By watching and analyzing these metrics closely, QA teams can improve their automation strategies. This leads to higher test coverage and better software quality.

1. Automatable Test Cases

This metric measures the percentage of test cases that can be automated in relation to the total number of test cases in a suite, ensuring a stable build. It plays a crucial role in prioritizing automation efforts and identifying scenarios that require manual testing due to complexity. By understanding the proportion of automatable test cases, teams can create a balanced testing strategy that effectively integrates both manual and automated testing. Additionally, it helps in recognizing test cases that may not be suitable for automation, thereby improving resource allocation. Some scenarios, such as visual testing, CAPTCHA validation, complex hardware interactions, and dynamically changing UI elements, may be difficult or impractical to automate, requiring manual intervention to ensure comprehensive test coverage.

The formula to calculate test automation coverage for automatable test cases is:

Automatable Test Cases (%) = (Automatable Test Cases ÷ Total Test Cases) × 100

For example, if a project consists of 600 test cases, out of which 400 can be automated, the automatable test case coverage would be 66.67%.

A best practice for maximizing automation effectiveness is to prioritize test cases that are repetitive, time-consuming, and have a high business impact. By focusing on these, teams can enhance efficiency and ensure that automation efforts yield the best possible return on investment.

2. Automation Pass Rate

Automation pass rate measures the percentage of automated test cases that successfully pass during execution. It is a key metric, a more straightforward metric, for assessing the reliability and stability of automated test scripts, with a low failure rate being crucial. A consistently high failure rate may indicate flaky tests, unstable automation logic, or environmental issues. This metric also helps distinguish whether failures are caused by application defects or problems within the test scripts themselves.

The formula to calculate automation pass rate is:

Automation Pass Rate (%) = (Passed Test Cases ÷ Executed Test Cases) × 100

For example, if a testing team executes 500 automated test cases and 450 of them pass successfully, the automation pass rate is:

(450 ÷ 500) × 100 = 90%

This means 90% of the automated tests ran successfully, while the remaining 10% either failed or were inconclusive. A low pass rate could indicate issues with automation scripts, environmental instability, or application defects that require further investigation.

A best practice to improve this metric is to analyze frequent failures and determine whether they stem from script issues, test environment instability, or genuine defects in the application.

3. Automation Execution Time

Automation execution time measures the total duration required for automated test cases to run from start to finish, including test execution time. This metric is crucial in evaluating whether automation provides a time advantage over manual testing. Long execution times can delay deployments and impact release schedules, making it essential to optimize test execution for efficiency. By analyzing automation execution time, teams can identify areas for improvement, such as implementing parallel execution or optimizing test scripts.

One way to improve automation execution time and increase test automation ROI is by using parallel execution, which allows multiple tests to run simultaneously, significantly reducing the total test duration. Additionally, optimizing test scripts by removing redundant steps and leveraging cloud-based test grids to execute tests on multiple devices and browsers can further enhance efficiency.

For example, if the original automation execution time is 4 hours and parallel testing reduces it to 1.5 hours, it demonstrates a significant improvement in test efficiency.

A best practice is to aim for an execution time that aligns with sprint cycles, ensuring that testing does not delay releases. By continuously refining automation strategies, teams can maximize the benefits of test automation while maintaining rapid and reliable software delivery.

4. Code Coverage Metrics

Code coverage measures how much of the application’s codebase is tested through automation.

Key Code Coverage Metrics:

- Statement Coverage: Measures executed statements in the source code.

- Branch Coverage: Ensures all decision branches (if-else conditions) are tested.

- Function Coverage: Determines how many functions or methods are tested.

- Line Coverage: Ensures each line of code runs at least once.

- Path Coverage: Verifies different execution paths are tested.

Code Coverage (%) = (Covered Code Lines ÷ Total Code Lines) × 100

For example, If a project has 5,000 lines of code, and tests execute 4,000 lines, the coverage is 80%.

Best Practice: Aim for 80%+ code coverage, but complement it with exploratory and usability testing.

5. Requirement Coverage

Requirement coverage ensures that automation tests align with business requirements and user stories, helping teams validate that all critical functionalities are tested. This metric is essential for assessing how well automated tests support business needs and whether any gaps exist in test coverage.

The formula to calculate the required coverage is:

Requirement Coverage (%) = (Tested Requirements ÷ Total Number of Requirements) × 100

For example, if a project has 60 requirements and automation tests cover 50, the requirement coverage would be:

(50 ÷ 60) × 100 = 83.3%

A best practice for improving requirement coverage is to use test case traceability matrices to map test cases to requirements. This ensures that all business-critical functionalities are adequately tested and reduces the risk of missing key features during automation testing.

6. Test Execution Coverage Across Environments

This metric ensures that automated tests run across different browsers, devices, and operating system configurations. It plays a critical role in validating application stability across platforms and identifying cross-browser and cross-device compatibility issues. By tracking manual test cases and test execution coverage with a test management tool, teams can optimize their cloud-based test execution strategies and ensure a seamless user experience across various environments.

The formula to calculate test execution coverage is:

Test Execution Coverage (%) = (Tests Run Across Different Environments ÷ Total Test Scenarios) × 100

For example, if a project runs 100 tests on Chrome, Firefox, and Edge but only 80 on Safari, then Safari’s execution coverage would be:

(80 ÷ 100) × 100 = 80%

A best practice to improve execution coverage is to leverage cloud-based testing platforms like BrowserStack, Sauce Labs, or LambdaTest. These tools enable teams to efficiently run tests across multiple devices and browsers, ensuring broader coverage and faster execution.

7. Return on Investment (ROI) of Test Automation

The ROI of test automation helps assess the overall value gained from investing in automation compared to manual testing. This metric is crucial for justifying the cost of automation tools and resources, measuring cost savings and efficiency improvements, and guiding future automation investment decisions.

The formula to calculate automation ROI is:

Automation ROI (%) = [(Manual Effort Savings – Automation Cost) ÷ Automation Cost] × 100

For example, if automation saves $50,000 in manual effort and costs $20,000 to implement, the ROI would be:

(50,000 – 20,000) ÷ 20,000] × 100 = 150%

A best practice is to continuously evaluate ROI to refine the automation strategy and maximize cost efficiency. By regularly assessing returns, teams can ensure that automation efforts remain both effective and financially viable.

Conclusion

In conclusion, metrics for automation test coverage are important for making sure products are good quality and work well in today’s QA practices. By looking at key metrics, such as how many automated tests there are and what percentage of unit tests and test cases are automated, teams can improve how they test and spot issues in automation scripts. This helps boost overall coverage. Using smart methods, like focusing on test cases based on risk and applying continuous integration and deployment, can increase automation coverage. Examples from real life show how these metrics are important across different industries. Regularly checking and using automation test coverage metrics is necessary for improving quality assurance processes. Codoid, a leading software testing company, helps businesses improve automation coverage with expert solutions in Selenium, Playwright, and AI-driven testing. Their services optimize test execution, reduce maintenance efforts, and ensure high-quality software.

Frequently Asked Questions

-

What is the ideal percentage for automation test coverage?

There isn’t a perfect percentage that works for every situation. The best level of automation test coverage changes based on the software development project’s complexity, how much risk you can handle, and how efficient you want your tests to be. Still, aiming for 80% or more is usually seen as a good goal for quality assurance

-

How often should test coverage metrics be reviewed?

You should look over test coverage metrics often. This is an important part of the quality assurance and test management process, ensuring that team members are aware of progress. It’s best to keep an eye on things all the time. However, you should also have more formal reviews at the end of each sprint or development cycle. This helps make adjustments and improvements

-

Can automation test coverage improve manual testing processes?

Yes, automation test coverage can help improve manual testing processes. When we automate critical tasks that happen over and over, it allows testers to spend more time on exploratory testing and handling edge cases. This can lead to better testing processes, greater efficiency, and higher overall quality.

The post Automation Test Coverage Metrics for QA and Product Managers appeared first on Codoid.

Source: Read More