How many times have you spent months evaluating automation projects – enduring multiple vendor assessments, navigating lengthy RFPs, and managing complex procurement cycles – only to face underwhelming results or outright failure? You’re not alone.

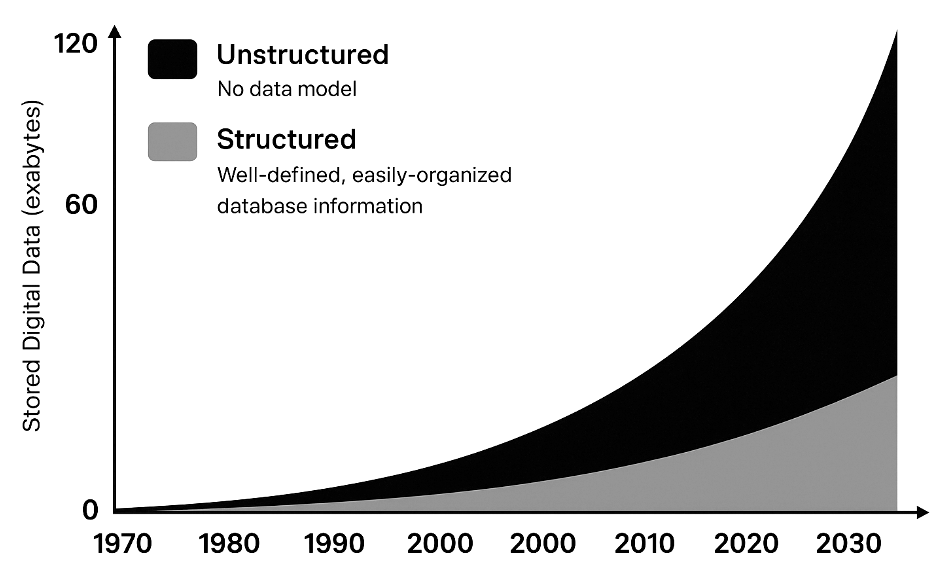

Many enterprises struggle to scale automation, not due to a lack of tools, but because their data isn’t ready. In theory, AI agents and RPA bots could handle countless tasks; in practice, they fail when fed messy or unstructured inputs. Studies show that 80%-90% of all enterprise data is unstructured – think of emails, PDFs, invoices, images, audio, etc. This pervasive unstructured data is the real bottleneck. No matter how advanced your automation platform, it can’t reliably process what it cannot properly read or understand. In short, low automation levels are usually a data problem, not a tool problem.

Why Agents and RPA Require Structured Data

Automation tools like Robotic Process Automation (RPA) excel with structured, predictable data – neatly arranged in databases, spreadsheets, or standardized forms. They falter with unstructured inputs. A typical RPA bot is essentially a rules-based engine (“digital worker”) that follows explicit instructions. If the input is a scanned document or a free-form text field, the bot doesn’t inherently know how to interpret it. RPA is unable to directly manage unstructured datasets; the data must first be converted into structured form using additional methods. In other words, an RPA bot needs a clean table of data, not a pile of documents.

“RPA is most effective when processes involve structured, predictable data. In practice, many business documents such as invoices are unstructured or semi-structured, making automated processing difficult”. Unstructured data now accounts for ~80% of enterprise data, underscoring why many RPA initiatives stall.

The same holds true for AI agents and workflow automation: they only perform as well as the data they receive. If an AI customer service agent is drawing answers from disorganized logs and unlabeled files, it will likely give wrong answers. The foundation of any successful automation or AI agent is “AI-ready” data that is clean, well-organized, and preferably structured. This is why organizations that invest heavily in tools but neglect data preparation often see disappointing automation ROI.

Challenges with Traditional Data Structuring Methods

If unstructured data is the issue, why not just convert it to structured form? This is easier said than done. Traditional methods to structure data like OCR, ICR, and ETL have significant challenges:

- OCR and ICR: OCR and ICR have long been used to digitize documents, but they crumble in real-world scenarios. Classic OCR is just pattern-matching, it struggles with varied fonts, layouts, tables, images, or signatures. Even top engines hit only 80 – 90% accuracy on semi-structured docs, creating 1,000 – 2,000 errors per 10,000 documents and forcing manual review on 60%+ of files. Handwriting makes it worse, ICR barely manages 65 – 75% accuracy on cursive. Most systems are also template-based, demanding endless rule updates for every new invoice or form format.OCR/ICR can pull text, but it can’t understand context or structure at scale, making them unreliable for enterprise automation.

- Conventional ETL Pipelines: ETL works great for structured databases but falls apart with unstructured data. No fixed schema, high variability, and messy inputs mean traditional ETL tools need heavy custom scripting to parse natural language or images. The result? Errors, duplicates, and inconsistencies pile up, forcing data engineers to spend 80% of their time cleaning and prepping data—leaving only 20% for actual analysis or AI modeling. ETL was built for rows and columns, not for today’s messy, unstructured data lakes—slowing automation and AI adoption significantly.

- Rule-Based Approaches: Older automation solutions often tried to handle unstructured info with brute-force rules, e.g. using regex patterns to find key terms in text, or setting up decision rules for certain document layouts. These approaches are extremely brittle. The moment the input varies from what was expected, the rules fail. As a result, companies end up with fragile pipelines that break whenever a vendor changes an invoice format or a new text pattern appears. Maintenance of these rule systems becomes a heavy burden.

All these factors contribute to why so many organizations still rely on armies of data entry staff or manual review. McKinsey observes that current document extraction tools are often “cumbersome to set up” and fail to yield high accuracy over time, forcing companies to invest heavily in manual exception handling. In other words, despite using OCR or ETL, you end up with people in the loop to fix all the things the automation couldn’t figure out. This not only cuts into the efficiency gains but also dampens employee enthusiasm (since workers are stuck correcting machine errors or doing low-value data clean-up). It’s a frustrating status quo: automation tech exists, but without clean, structured data, its potential is never realized.

Foundational LLMs Are Not a Silver Bullet for Unstructured Data

With the rise of large language models, one might hope that they could simply “read” all the unstructured data and magically output structured info. Indeed, modern foundation models (like GPT-4) are very good at understanding language and even interpreting images. However, general-purpose LLMs are not purpose-built to solve the enterprise unstructured data problem of scale, accuracy, and integration. There are several reasons for this:

- Scale Limitations: Out-of-the-box LLMs cannot ingest millions of documents or entire data lakes in one go. Enterprise data often spans terabytes, far beyond an LLM’s capacity at any given time. Chunking the data into smaller pieces helps, but then the model loses the “big picture” and can easily mix up or miss details. LLMs are also relatively slow and computationally expensive for processing very large volumes of text. Using them naively to parse every document can become cost-prohibitive and latency-prone.

- Lack of Reliability and Structure: LLMs generate outputs probabilistically, which means they may “hallucinate” information or fill in gaps with plausible-sounding but incorrect data. For critical fields (like an invoice total or a date), you need 100% precision, a made-up value is unacceptable. Foundational LLMs don’t guarantee consistent, structured output unless heavily constrained. They don’t inherently know which parts of a document are important or correspond to which field labels (unless trained or prompted in a very specific way). As one research study noted, “sole reliance on LLMs is not viable for many RPA use cases” because they are expensive to train, require lots of data, and are prone to errors/hallucinations without human oversight. In essence, a chatty general AI might summarize an email for you, but trusting it to extract every invoice line item with perfect accuracy, every time, is risky.

- Not Trained on Your Data: By default, foundation models learn from internet-scale text (books, web pages, etc.), not from your company’s proprietary forms and vocabulary. They may not understand specific jargon on a form, or the layout conventions of your industry’s documents. Fine-tuning them on your data is possible but costly and complex, and even then, they remain generalists, not specialists in document processing. As a Forbes Tech Council insight put it, an LLM on its own “doesn’t know your company’s data” and lacks the context of internal records. You often need additional systems (like retrieval-augmented generation, knowledge graphs, etc.) to ground the LLM in your actual data, effectively adding back a structured layer.

In summary, foundation models are powerful, but they are not a plug-and-play solution for parsing all enterprise unstructured data into neat rows and columns. They augment but do not replace the need for intelligent data pipelines. Gartner analysts have also cautioned that many organizations aren’t even ready to leverage GenAI on their unstructured data due to governance and quality issues, using LLMs without fixing the underlying data is putting the cart before the horse.

Structuring Unstructured Data, Why Purpose-Built Models are the answer

Today, Gartner and other leading analysts indicate a clear shift: traditional IDP, OCR, and ICR solutions are becoming obsolete, replaced by advanced large language models (LLMs) that are fine-tuned specifically for data extraction tasks. Unlike their predecessors, these purpose-built LLMs excel at interpreting the context of varied and complex documents without the constraints of static templates or limited pattern matching.

Fine-tuned, data-extraction-focused LLMs leverage deep learning to understand document context, recognize subtle variations in structure, and consistently output high-quality, structured data. They can classify documents, extract specific fields—such as contract numbers, customer names, policy details, dates, and transaction amounts—and validate extracted data with high accuracy, even from handwriting, low-quality scans, or unfamiliar layouts. Crucially, these models continually learn and improve through processing more examples, significantly reducing the need for ongoing human intervention.

McKinsey notes that organizations adopting these LLM-driven solutions see substantial improvements in accuracy, scalability, and operational efficiency compared to traditional OCR/ICR methods. By integrating seamlessly into enterprise workflows, these advanced LLM-based extraction systems allow RPA bots, AI agents, and automation pipelines to function effectively on the previously inaccessible 80% of unstructured enterprise data.

As a result, industry leaders emphasize that enterprises must pivot toward fine-tuned, extraction-optimized LLMs as a central pillar of their data strategy. Treating unstructured data with the same rigor as structured data through these advanced models unlocks significant value, finally enabling true end-to-end automation and realizing the full potential of GenAI technologies.

Real-World Examples: Enterprises Tackling Unstructured Data with Nanonets

How are leading enterprises solving their unstructured data challenges today? A number of forward-thinking companies have deployed AI-driven document processing platforms like Nanonets to great success. These examples illustrate that with the right tools (and data mindset), even legacy, paper-heavy processes can become streamlined and autonomous:

- Asian Paints (Manufacturing): One of the largest paint companies in the world, Asian Paints dealt with thousands of vendor invoices and purchase orders. They used Nanonets to automate their invoice processing workflow, achieving a 90% reduction in processing time for Accounts Payable. This translated to freeing up about 192 hours of manual work per month for their finance team. The AI model extracts all key fields from invoices and integrates with their ERP, so staff no longer spend time typing in details or correcting errors.

- JTI (Japan Tobacco International) – Ukraine operations: JTI’s regional team faced a very long tax refund claim process that involved shuffling large amounts of paperwork between departments and government portals. After implementing Nanonets, they brought the turnaround time down from 24 weeks to just 1 week, a 96% improvement in efficiency. What used to be a multi-month ordeal of data entry and verification became a largely automated pipeline, dramatically speeding up cash flow from tax refunds.

- Suzano (Pulp & Paper Industry): Suzano, a global pulp and paper producer, processes purchase orders from various international clients. By integrating Nanonets into their order management, they reduced the time taken per purchase order from about 8 minutes to 48 seconds, roughly a 90% time reduction in handling each order. This was achieved by automatically reading incoming purchase documents (which arrive in different formats) and populating their system with the needed data. The result is faster order fulfillment and less manual workload.

- SaltPay (Fintech): SaltPay needed to manage a vast network of 100,000+ vendors, each submitting invoices in different formats. Nanonets allowed SaltPay to simplify vendor invoice management, reportedly saving 99% of the time previously spent on this process. What was once an overwhelming, error-prone task is now handled by AI with minimal oversight.

These cases underscore a common theme: organizations that leverage AI-driven data extraction can supercharge their automation efforts. They not only save time and labor costs but also improve accuracy (e.g. one case noted 99% accuracy achieved in data extraction) and scalability. Employees can be redeployed to more strategic work instead of typing or verifying data all day. The technology (tools) wasn’t the differentiator here, the key was getting the data pipeline in order with the help of specialized AI models. Once the data became accessible and clean, the existing automation tools (workflows, RPA bots, analytics, etc.) could finally deliver full value.

Clean Data Pipelines: The Foundation of the Autonomous Enterprise

In the pursuit of a “truly autonomous enterprise”, where processes run with minimal human intervention – having a clean, well-structured data pipeline is absolutely critical. A “truly autonomous enterprise” doesn’t just need better tools—it needs better data. Automation and AI are only as good as the information they consume, and when that fuel is messy or unstructured, the engine sputters. Garbage in, garbage out is the single biggest reason automation projects underdeliver.

Forward-thinking leaders now treat data readiness as a prerequisite, not an afterthought. Many enterprises spend 2 – 3 months upfront cleaning and organizing data before AI projects because skipping this step leads to poor outcomes. A clean data pipeline—where raw inputs like documents, sensor feeds, and customer queries are systematically collected, cleansed, and transformed into a single source of truth—is the foundation that allows automation to scale seamlessly. Once this is in place, new use cases can plug into existing data streams without reinventing the wheel.

In contrast, organizations with siloed, inconsistent data remain trapped in partial automation, constantly relying on humans to patch gaps and fix errors. True autonomy requires clean, consistent, and accessible data across the enterprise—much like self-driving cars need proper roads before they can operate at scale.

The takeaway: The tools for automation are more powerful than ever, but it’s the data that determines success. AI and RPA don’t fail due to lack of capability; they fail due to lack of clean, structured data. Solve that, and the path to the autonomous enterprise—and the next wave of productivity—opens up.

Sources:

- https://medium.com/@sanjeeva.bora/the-definitive-guide-to-ocr-accuracy-benchmarks-and-best-practices-for-2025-8116609655da

- https://research.aimultiple.com/ocr-accuracy/

- https://primerecognition.com/ocr-software-accuracy-comparison/

- https://en.wikipedia.org/wiki/Optical_character_recognition

- https://www.mckinsey.com/capabilities/operations/our-insights/fueling-digital-operations-with-analog-data

- https://www.gartner.com/en/articles/data-centric-approach-to-ai

- https://www.bizdata360.com/intelligent-document-processing-idp-ultimate-guide-2025/

- https://benelux.nttdata.com/insights/blog/what-is-unstructured-data

- https://www.sealevel.com/what-is-dark-data-the-upside-down-of-the-data-revolution

- https://www.mckinsey.com/~/media/mckinsey/business%20functions/operations/our%20insights/digital%20service%20excellence/digital-service-excellence–scaling-the-next-generation-operating-model.pdf

- https://indicodata.ai/blog/gartner-report-highlights-the-power-of-unstructured-data-analytics-indico-blog

Source: Read MoreÂ