Top News

Google DeepMind’s new AI models help robots perform physical tasks, even without training

Google DeepMind is introducing two new AI models, Gemini Robotics and Gemini Robotics-ER, aimed at enhancing the capabilities of robots in performing real-world tasks. Gemini Robotics, built on Google’s flagship AI model Gemini 2.0, is a vision-language-action model that can understand and adapt to new situations, even without prior training. It improves robots’ generality, interactivity, and dexterity, enabling them to perform precise physical tasks and interact better with their environment. Gemini Robotics-ER is an advanced visual language model that helps robots understand complex and dynamic environments, aiding them in tasks like packing a lunchbox. Google DeepMind is also developing a layered approach to safety, training the Gemini Robotics-ER models to evaluate the safety of potential actions in given scenarios.

Google calls Gemma 3 the most powerful AI model you can run on one GPU

Google has announced the release of Gemma 3, an updated version of its open AI models, which it claims is the “world’s best single-accelerator model”. The model is designed for developers creating AI applications that can run on various platforms, from phones to workstations, and supports over 35 languages. It can analyze text, images, and short videos, and has been optimized for running on Nvidia’s GPUs and dedicated AI hardware. The company continues to promote Gemma with Google Cloud credits and the Gemma 3 Academic program, which offers academic researchers $10,000 worth of credits to accelerate their research.

Inside Google’s Investment in the A.I. Start-Up Anthropic

Google has a 14% stake in the AI start-up Anthropic, as revealed by court documents obtained by The New York Times. Despite this significant investment, Google has no control over the company, holding no voting rights, board seats, or observer rights. However, Google is set to invest an additional $750 million in Anthropic in September through a convertible debt, a type of loan that can be converted into equity.

Sesame, the startup behind the viral virtual assistant Maya, releases its base AI model

AI startup Sesame has made its base model, CSM-1B, publicly available under an Apache 2.0 license. This model, which is the foundation for the company’s viral voice assistant Maya, is a 1 billion parameter model that generates “RVQ audio codes” from text and audio inputs. RVQ, or residual vector quantization, is a method of encoding audio into discrete tokens, a technique also used in Google’s SoundStream and Meta’s Encodec. While the model can produce a variety of voices, it has not been fine-tuned for any specific voice or non-English languages. The company has urged developers not to misuse the model for activities such as voice mimicry without consent, creating misleading content, or engaging in harmful activities. However, it does not have any real safeguards in place to prevent such misuse.

Other News

Tools

You can now test Gemini 2.0 Flash’s native image output – Gemini 2.0 Flash now offers wider access to its native image output feature, enabling conversational image editing and multimodal capabilities for developers and users through Google AI Studio and the Gemini API.

OpenAI launches new tools to help developers build AI agents – OpenAI’s new Responses API and Agents SDK provide developers with foundational tools to create AI agents capable of web searching, file analysis, and computer task automation, enhancing the ability to build complex, industry-specific solutions.

Allen Institute for AI (AI2) Releases OLMo 32B: A Fully Open Model to Beat GPT 3.5 and GPT-4o mini on a Suite of Multi-Skill Benchmarks – OLMo 2 32B, released by the Allen Institute for AI, is a fully open model that surpasses GPT-3.5 Turbo and GPT-4o mini in multi-skill benchmarks while promoting accessibility and collaboration in AI research.

Reka AI Open Sourced Reka Flash 3: A 21B General-Purpose Reasoning Model that was Trained from Scratch – Reka Flash 3 is a versatile and resource-efficient AI model designed for general-purpose reasoning, offering features like a 32k token context window and budget forcing mechanism, making it suitable for on-device deployments and low-latency applications.

Google is officially dumping Assistant for Gemini – Google is transitioning users from Google Assistant to Gemini, which will replace the classic Assistant on most devices and introduce new experiences across various platforms.

Alibaba launches new version of AI assistant tool as competition heats up – Alibaba’s new AI assistant app, powered by its Qwen AI reasoning model, aims to enhance its competitive edge in the global AI race, integrating advanced features like chatbots and task execution while planning significant investments in AI infrastructure.

Snap introduces AI Video Lenses powered by its in-house generative model – Snapchat is launching its first video generative AI Lenses, powered by its proprietary model, available exclusively to Snapchat Platinum subscribers, as part of its strategy to enhance user experience and maintain competitiveness in the AI and AR space.

Moonvalley releases a video generator it claims was trained on licensed content – Moonvalley’s new AI video generator, Marey, is designed to respect copyright laws by using only licensed data, offering nuanced control over video creation while providing legal safeguards for users and creators.

Adobe’s new AI feature lets you edit stock images on the fly – no Photoshop needed – Adobe’s new AI-powered “Customize” feature in Adobe Stock allows users to make quick edits and generate image variations directly on the platform, enhancing creative control without needing Photoshop.

Sudowrite Launches Muse AI Model That Can Generate Narrative-Driven Fiction – Sudowrite’s Muse AI model specializes in generating unique, narrative-driven fiction by avoiding clichés and offering high creativity, with users able to try it using free credits before opting for a subscription.

Business

Waymo is now offering 24/7 robotaxi rides in Silicon Valley – Waymo is expanding its robotaxi service in Silicon Valley to be available 24/7 for select customers, with plans to gradually increase access within a 27-square-mile area.

OpenAI to pay CoreWeave $11.9 billion over five years for AI data centers, services – OpenAI’s five-year, $11.9 billion deal with CoreWeave includes a $350 million stake in the company, which is preparing for its Nasdaq debut and has rapidly expanded its data center operations with significant backing from Nvidia.

Meta is reportedly testing in-house chips for AI training – Meta is testing a custom AI training chip developed with TSMC to potentially reduce its dependency on Nvidia and cut capital expenditure costs.

Superintelligence startup Reflection AI launches with $130M in funding – Reflection AI Inc., a new startup led by former Google DeepMind researchers, launched today with $130 million in early-stage funding. The company raised the capital over two rounds. The first, a $25 million seed investment, was led by Sequoia Capital and CRV.

Insilico Medicine scores $110M for AI-enabled drug discovery – Insilico Medicine plans to use the $110 million from its Series E funding to enhance its AI-driven drug discovery platform, expand its drug pipeline, and foster industry collaborations.

Cartesia Raises $64M to Advance Real-Time Voice AI with Sonic 2.0 – Cartesia’s $64 million funding will enhance its Sonic 2.0 voice AI model, known for its low latency and advanced voice cloning, to improve real-time applications and expand its market presence.

AI agent Manus partners with Alibaba’s Qwen to develop Chinese version – Manus is collaborating with Alibaba’s Qwen team to adapt its AI agent for Chinese users by ensuring compatibility with domestic models and computing platforms.

Sony is experimenting with AI-powered PlayStation characters – Sony is developing AI-powered versions of PlayStation characters, like Aloy from Horizon Forbidden West, using advanced AI technologies for speech and facial animation, sparking discussions about AI’s role in gaming.

Research

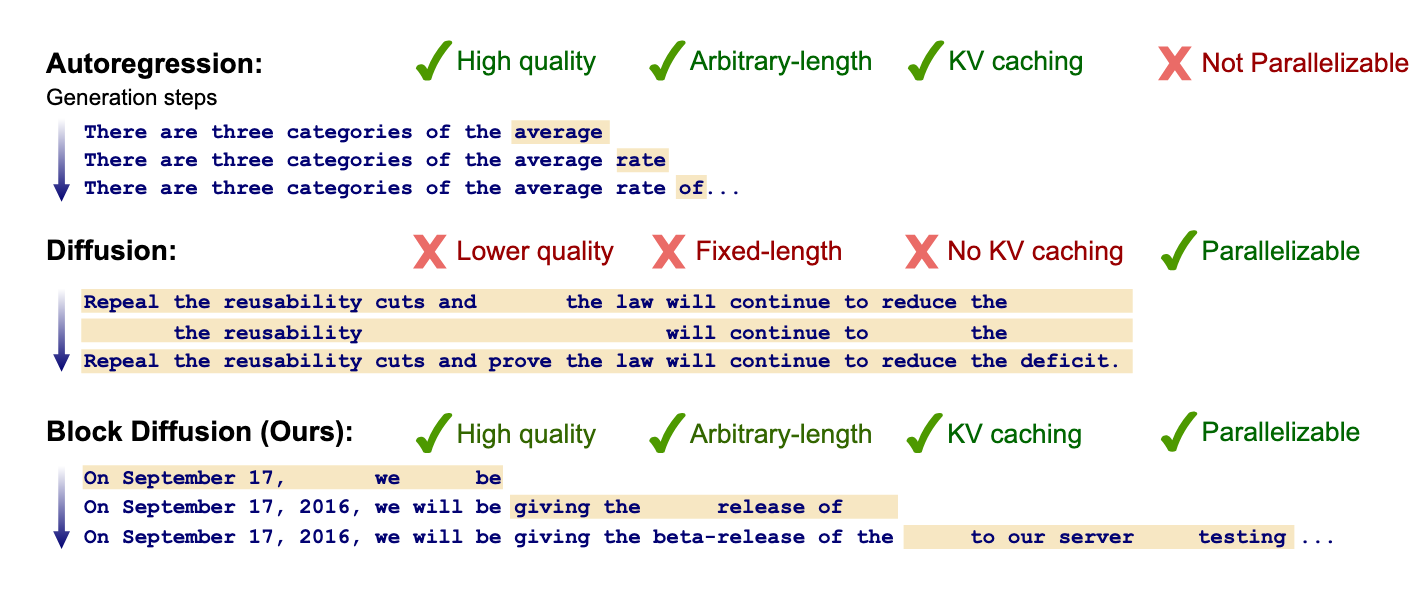

Block Diffusion: Interpolating Between Autoregressive and Diffusion Language Models – Block diffusion language models combine the strengths of discrete denoising diffusion and autoregressive models to enable flexible-length generation and improved inference efficiency, setting a new performance standard in language modeling benchmarks.

An Application of Reinforcement Learning with Verifiable Reward (RLVR) to an Omni-Multimodal Large Language Model – R1-Omni, developed by Alibaba Researchers, utilizes Reinforcement Learning with Verifiable Reward to enhance multimodal emotion recognition by integrating visual and auditory data, providing accurate predictions and clear reasoning explanations.

Inductive Moment Matching – Inductive Moment Matching (IMM) offers a stable, efficient alternative to diffusion models by enabling high-quality, few-step sampling without the need for pre-training or extensive tuning, achieving impressive results on ImageNet and CIFAR-10.

Transformers without Normalization – Dynamic Tanh (DyT) is introduced as a simple, efficient alternative to normalization layers in Transformers, offering stable training and high performance without the need for activation statistics.

START: Self-taught Reasoner with Tools – START, a novel tool-integrated reasoning model, enhances reasoning capabilities by leveraging external tools and a self-learning framework, achieving high accuracy on various benchmarks.

Concerns

‘Open’ model licenses often carry concerning restrictions – Custom, non-standard licenses for AI models like Google’s Gemma 3 and Meta’s Llama create legal uncertainties and hinder commercial adoption, prompting calls for alignment with established open source principles.

Policy

Judge allows authors’ AI copyright lawsuit against Meta to move forward – A federal judge has allowed authors’ copyright infringement claims against Meta to proceed, while dismissing claims under the California Comprehensive Computer Data Access and Fraud Act.

Expert Opinions

On OpenAI’s Safety and Alignment Philosophy – The article critiques OpenAI’s safety and alignment strategies, challenging their assumptions about AI remaining a mere tool, economic normalcy, and the absence of abrupt phase changes, while emphasizing the need for coordination and scalable safety methods.

Source: Read MoreÂ