As companies expand globally, they must be able to architect highly available and fault-tolerant systems across multiple AWS Regions. With such scale, a company can find itself in this position when designing a caching solution across its multi-Region infrastructure.

In this post, we dive deep into how to use Amazon ElastiCache for Valkey, a fully managed in-memory data store with Redis OSS and Valkey compatibility, and the Amazon ElastiCache for Valkey Global Datastore feature set.

This solution provides application servers with a unified database caching layer and secure cross-Region replication. We discuss how it can solve the challenges of operating in multiple Regions while also enabling a disaster recovery strategy that meets strict business requirements.

ElastiCache Global Datastore

With ElastiCache Global Datastore, you can write to your ElastiCache cluster in one Region and have the data available to be read from two other cross-Region replica clusters, thereby enabling low-latency reads and disaster recovery across Regions.

We recently launched Amazon MemoryDB multi-Region capabilities. Amazon MemoryDB is a Valkey- and Redis OSS-compatible, durable, in-memory database service that delivers ultra-fast performance. MemoryDB multi-Region offers active-active replication so you can serve reads and writes locally from the Regions closest to your customers with microsecond read and single-digit millisecond write latency. In this post, we focus on the ElastiCache Global Datastore feature that implements an active-passive replication and is more suitable for use cases where you can perform writes in a single primary Region and eventually consistent reads in a secondary Region.

Evolution of a caching architecture

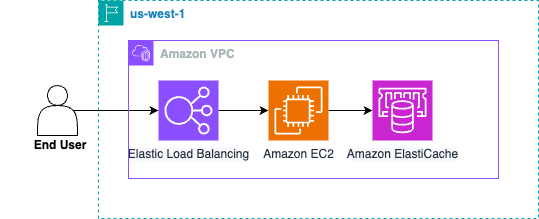

Our example starts with an application stack in a single Region (us-west-1) with a typical setup. The following diagram shows the original stack, consisting of Amazon Virtual Private Cloud (Amazon VPC), Application Load Balancer (ALB), target groups, Amazon Elastic Compute Cloud (Amazon EC2) instances, and ElastiCache for Valkey for user session management.

This setup works well, but let’s introduce new business and regulatory requirements. We have to make the infrastructure multi-Region for read capabilities in multiple Regions and disaster recovery capacity to minimize business impact during a single Region failure. The same infrastructure has to be duplicated to a second Region (us-west-2) where different users would connect. This is a fairly painless task and a great opportunity to implement AWS Global Accelerator to optimize connectivity to the ALBs in the two Regions. The following diagram shows the updated architecture with two Regions.

Global Accelerator automatically routes traffic to a healthy endpoint nearest to the user and is designed to load balance traffic. This means that we can’t deterministically route multiple users to a specific destination behind the accelerator. For this reason, we want that user’s session to be replicated in both Regions so each Region can handle those user’s requests.

Challenges of a cross-Region caching layer

We have to find a solution for this challenge or undo all of the new multi-Region configuration. We need a way to share the dataset across both Regions. In short, both us-west-1 and us-west-2 Regions have to accept logins and provide all users with their session data regardless of the location. This would be best accomplished with a unified dataset between us-west-1 and us-west-2.

Because we are already using ElastiCache, adopting the Global Datastore feature is the logical next step.

A complete multi-Region architecture

ElastiCache for Valkey Global Datastore provides fully managed, fast, reliable, and secure cross-Region replication.

Implementing Global Datastore automatically enables the application servers to read from ElastiCache regardless of the Region. Because Global Datastore provides failover capability between Regions, ElastiCache also meets our requirement for disaster recovery. The following diagram shows the updated architecture with two Regions using Global Datastore.

The cross-Region operations

For the two Regions to be fully operational, we have to implement a solution to enable write operations from all Regions to the Global Datastore primary cluster. To do so, we can configure both Regions using VPC peering, so the application servers running on Amazon EC2 in the secondary Global Datastore Region have the necessary cross-Region connectivity to access the cluster in the primary Region. This allows the application in the secondary Region to write in the primary cluster.

An alternative to VPC peering can be AWS Transit Gateway. A VPC peering connection is a networking connection between two VPCs that routes traffic between them using private IPv4 or IPv6 addresses. Transit Gateway connects VPCs to a single Transit Gateway instance, which consolidates an organization’s entire AWS routing configuration in one place. VPC peering doesn’t support transitive routing. A direct VPC peering connection is required between each VPC that must communicate with one another. Transit Gateway supports transitive routing. Traffic is routed among all the connected networks by using route tables. Transit Gateway has additional costs, whereas VPC peering doesn’t (only the regular data-transfer costs apply). Our recommendation is to use VPC peering if you connect up to 10 VPCs, otherwise, you should consider Transit Gateway.

To prevent code modification after a global datastore failover, you can create an Amazon Route 53 custom DNS record to resolve the endpoint of the primary cluster, then add this custom DNS record in the code of the application in both us-west-1 and us-west-2. In case of failover, the endpoint modification can be done at the DNS level.

The DNS automation

In this section, we introduce an additional functionality for automation.

You can implement an AWS Lambda function to automatically update the custom DNS record in the Route 53 private zone upon global datastore failover. The Lambda function uses an Amazon Simple Notification Service (Amazon SNS) notification of a global datastore failover as a trigger to update the DNS record. From there, the application in the secondary cluster Region resumes cross-Region write operations through the peering connection. At the same time, the application in the primary cluster Region operates locally. The details of the Lambda function are available later in this post.

You can see the updated architecture with the automation in the following diagram. The core elements of the DNS automation are Amazon SNS and Lambda, which changes the value of the Route 53 DNS record upon the ElastiCache cluster primary failover. Those core elements will also be part of the AWS CloudFormation template provided later in this post.

To validate the failover automation, you can make the secondary cluster a primary cluster that can accept write operations and test this functionality. For more details, see Promoting the secondary cluster to primary.

AWS services used

In this section, we dive deeper into the services used in this solution. We also provide a CloudFormation template in the accompanying GitHub repository that deploys the necessary AWS resources in both Regions to have an end-to-end working solution.

Amazon ElastiCache for Valkey

ElastiCache is a fully managed, Valkey-, Memcached-, and Redis OSS-compatible caching service that delivers real-time, cost-optimized performance for modern applications. ElastiCache scales to millions of operations per second with microsecond response time, and offers enterprise-grade security and reliability.

Valkey is an open source, in-memory key-value data store. It is a drop-in replacement for Redis OSS. It is stewarded by the Linux Foundation and rapidly improving with contributions from a vibrant developer community. AWS is actively contributing to Valkey; to learn more about AWS contributions for Valkey, see Amazon ElastiCache and Amazon MemoryDB announce support for Valkey.

With the ElastiCache for Valkey Global Datastore feature, you can write to your ElastiCache for Valkey cluster in one Region (primary) and have the data available to be read from two other cross-Region secondary clusters. This enables low-latency reads and disaster recovery across Regions. The clusters—primary and secondary—in your global datastore should have the same number of primary nodes, node type, engine version, and number of shards (in case of cluster mode enabled). Each cluster in your global datastore can have a different number of read replicas to accommodate the read traffic local to that cluster.

For this solution, the VPC for the primary ElastiCache for Valkey cluster and the VPC for the secondary cluster must use a different network CIDR.

Amazon Route 53

Route 53 is a highly available and scalable cloud DNS web service. To reduce application code changes after a failover, this solution uses a DNS private zone accessible by the two VPCs connected with VPC peering. For ElastiCache cluster mode disabled, a DNS record (CNAME) is created in this DNS private zone to resolve the primary endpoint of the primary cluster in the global datastore.

If the global datastore is based off ElastiCache for Valkey clusters with cluster mode enabled, the CNAME must resolve the configuration endpoint of the primary cluster.

Amazon SNS

Amazon SNS is a fully managed messaging service for both application-to-application and application-to-person communication. In this solution, ElastiCache is configured to publish events in an SNS topic. For more details, see Managing ElastiCache Amazon SNS notifications. When a Region is promoted to the role of primary in the global datastore, the following event is published to its associated SNS topic:

ElastiCache:ReplicationGroupPromotedAsPrimary : $ClusterName

AWS Lambda

Lambda is a serverless compute service that lets you run code without provisioning or managing servers, creating workload-aware cluster scaling logic, maintaining event integrations, or managing runtimes.

If a failover is manually triggered in the global datastore, the related notification is published in the SNS topic.

This SNS topic is used as a trigger to run a Lambda function. The code in the function updates the CNAME in the DNS private zone to replace the previous endpoint with the endpoint of the new primary cluster. This automation allows the application in both the primary and secondary Region to reconnect to the primary cluster for the write operations.

Prerequisites

To implement this solution, you need an AWS account with the necessary permissions.

Create the Lambda function and IAM policies

This section walks you through creating the Lambda function and the required AWS Identity and Access Management (IAM) policies. If you are using the CloudFormation template provided in the post, the Lambda function will already be created in your account.

- Create a new IAM policy called ElastiCache_Route53 with the following content:

- Create an IAM role for the Lambda function with the following policies:

AmazonElastiCacheReadOnlyAccessAWSLambdaBasicExecutionRoleElastiCache_Route53

- Create the Lambda function in each Region with the following configuration:

- Runtime Python 3.12

- Set the trigger for Amazon SNS (choose the topic of the local cluster)

- Increase function timeout to 10 seconds

- Lambda variables:

- cname – Custom DNS record to update in the DNS private zone

- endpoint – Primary (or configuration) endpoint of the local cluster

- zone_id – Route 53 private zone ID

Use the following code:

After you promote the secondary Region to become a primary cluster, the SNS notification will trigger the Lambda function, which will update the customer DNS record in Route 53 with the new primary cluster endpoint.

Clean up

To avoid future charges after you have verified the solution, you should delete the CloudFormation stacks in both Regions using the AWS CloudFormation console.

Summary

ElastiCache for Valkey Global Datastore provides a fully managed, fast, reliable, and secure cross-Region replication. You can write to your ElastiCache for Valkey cluster in one Region and have the data replicated to up to two other Regions with latency of typically under one second. This enables low-latency reads and disaster recovery across Regions. The feature is available in 36 of the AWS Regions, 15 of those regions were recently launched.

In this post, we showed how to implement a multi-Region session store with ElastiCache for Valkey Global Datastore and transitioned from a single Region to a multi-Region architecture according to specific business requirements.

To get started with ElastiCache for Valkey Global Datastore, check out AWS What’s Next: ElastiCache Global Datastore Launch with AWS product expert Ruchita Arora and Replication across AWS Regions using global datastores.

About the Authors

Eran Balan is an AWS Senior Solutions Architect based in EMEA. He works with AWS enterprise customers to provide them with architectural guidance for building scalable architecture in AWS environments.

Ben Fields is an AWS Senior Solutions Architect based out of Seattle, Washington. His interests and experience include databases, containers, AI/ML, and full-stack software development. You can often find him out climbing at the nearest climbing gym, playing ice hockey at the closest rink, or enjoying the warmth of home with a good game.

Yann Richard is an AWS ElastiCache Solutions Architect. On a more personal side, his goal is to make data transit in less than 4 hours and run a marathon in sub-milliseconds, or the opposite.

Source: Read More