In today’s data-driven environments, where information is spread across numerous sources, translating complex problem statements into precise SQL code can be a significant challenge. The growth of large language models (LLMs) has helped simplify data access. Text-to-SQL can automatically transform analytical questions into executable SQL code for enhanced data accessibility and streamlined data exploration, from analyzing sales data and monitoring performance metrics to assessing customer feedback.

In this post, we explore how to use Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Bedrock to build a generative AI text-to-SQL chatbot application using Retrieval Augmented Generation (RAG). We’ll also see how we can use Amazon MemoryDB with vector search to provide semantic caching to further accelerate this solution.

We use Amazon RDS for PostgreSQL as our database to store structured data, while leveraging Amazon Bedrock’s foundation models (FMs) for the generative AI capabilities. You can store both your data and embeddings (vector representations of text, images, and audio) in Amazon RDS for PostgreSQL, and use Amazon Bedrock to generate embeddings and translate natural language prompts into SQL queries that interact with your stored data.

Amazon Bedrock is a fully managed service that offers a choice of high-performing FMs from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities to help you build generative AI applications. We will integrate Amazon Bedrock with RDS for PostgreSQL database and use Amazon Bedrock to write SQL queries for the text prompts we provide as input. We use a combination of Streamlit and Amazon API gateway up front to invoke Amazon Bedrock using AWS Lambda.

Best practices for effective text-to-SQL

To deliver the most effective solution tailored to real-world use cases, this solution incorporates text-to-SQL best practices for performance optimization and security. This includes using Amazon MemoryDB with vector search capabilities and the Valkey engine to use the semantic caching technique, which can improve performance and reduce calls to a foundation model. In addition to a semantic cache, this solution will also leverage parameterized SQL, a read only database role for security, and table augmented generation techniques.

Semantic caching strategy

Semantic caching stores data based on its contextual meaning, which means that two queries with the same meaning will return the same result. This can be useful for complex queries involving multiple tables or data sources.

Semantically caching the text-to-SQL query responses when using LLMs enables performance and cost optimization. By semantically caching the query results, the system can retrieve and reuse the previously generated LLM responses when appropriate, significantly improving the overall response times. Furthermore, minimizing the number of LLM inferences through Amazon Bedrock not only improves the response speed but also reduces the operational cost with fewer LLM interactions. This approach allows for improved scalability of the application without a proportional cost increase.

When implementing semantic caching for this solution, our process follows two steps:

- First, we check for an exact textual match in the cache.

- If no exact match exists, we perform a semantic similarity search to find closely related cached queries.

This semantic caching strategy provides an efficient and cost-effective approach to using LLMs for text-to-SQL conversions.

Parameterized SQL

Because we’re dealing with user inputs that use Amazon Bedrock to convert user inputs to a SQL query, it’s crucial to create safeguards against SQL injection to prevent unauthorized access. For reference, see the Open Worldwide Application security project (OWASP) critical security risks to web applications.

In this solution, we use a parameterized query in which the parameter values are separated from the SQL syntax. We achieve this by requesting a parameterized SQL statement in the prompt to the LLM alongside the user input.

Without using parameterized queries, a third party could gain unauthorized access through user input that is executed by the database. By using parameterized queries, the database can first parse and plan the SQL query, and then execute the statement with the provided parameter values. This way, any SQL code in the user input is treated as regular data, not as executable code. Parameterized queries are considered a best practice to avoid SQL injection regardless of which database server or driver you are using.

As an additional layer of protection in this solution, we use a database role for the integration that only has select permissions on the tables in the database.

Table Augmented Generation

Implementing a centralized data catalog with Table Augmented Generation (TAG) approaches offer organizations a powerful tool for enhancing text-to-SQL responses from LLMs. In this solution, we create embeddings of database metadata, including tables, columns, and relationships, and use PostgreSQL’s pgvector functionality to generate searchable embeddings. When a user submits a query, the system uses the embeddings table to identify the most relevant database elements, effectively augmenting the user’s input with crucial structural context. This augmented query is then passed to the LLM, enabling it to generate SQL queries with an understanding of the specific data landscape. By incorporating this table-aware context into the generation process, LLMs can produce highly accurate, relevant, and tailored SQL responses that align precisely with the organization’s data infrastructure. This method not only improves SQL generation or synthesis, but also enhances the system’s ability to describe query results in natural language, providing a more comprehensive and user-friendly data interaction experience.

Solution overview

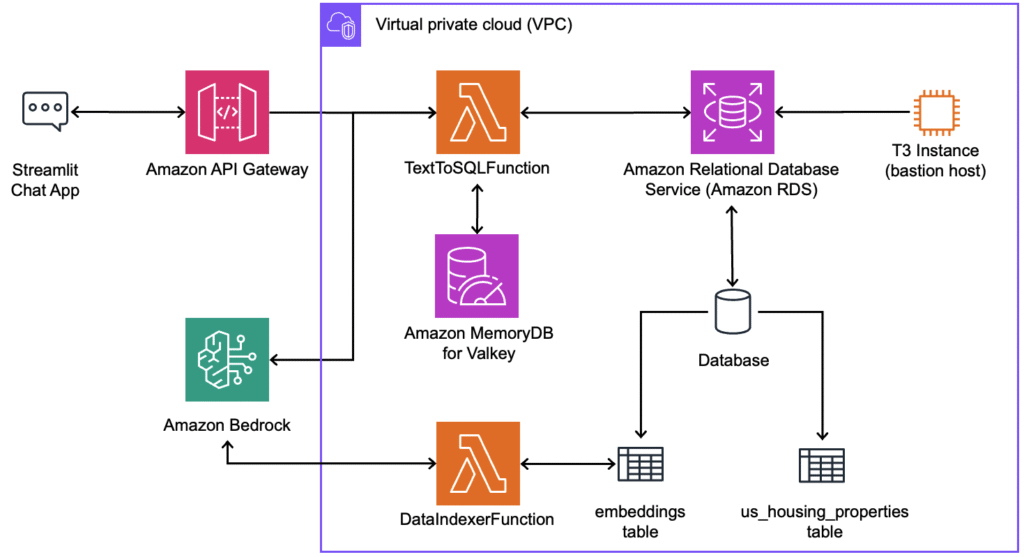

The solution presented in this post uses a chatbot application that uses the following architecture.

This architecture workflow includes the following steps:

- A database is created on an Amazon RDS for PostgreSQL database instance that contains a target dataset. For this example, we’re using US Housing Data (see prerequisites) represented by a single table in PostgreSQL.

- Using a Streamlit chat application, a user submits their query to an API Gateway endpoint with a Lambda function backend.

- The

TextToSQLFunctionchecks Amazon MemoryDB for Valkey to see if a similar semantic cache entry exists for the user’s prompt. If there’s a cache miss, the Lambda function performs a semantic search against the embeddings table (based on a text representation of database tables, columns, and relationships) using pgvector for similar tables to the prompt. - The

TextToSQLFunctionconstructs the prompt using the table information from the previous step, along with relevant instructions for constructing SQL. - The

TextToSQLFunctiontakes the SQL it generated in a previous step and invokes a query against the PostgreSQL database using a role that only has select permissions. The function then uses the query results to again prompt Amazon Bedrock for an interpretation of the results based on the user query.

There’s also a separate DataIndexerFunction that’s created to achieve the TAG design.

- A Lambda function, the

DataIndexerFunction, reads from the information_schemain PostgreSQL. - The

DataIndexerFunctioncreates a text representation of the tables and relationships within the database. - The

DataIndexerFunctionuses the text representation from the previous step to generate the embeddings using the Amazon Titan Embeddings G1 – Text model in Amazon Bedrock. - The embeddings are stored in an embeddings table in the RDS for PostgreSQL database, and retrieved using the pgvector extension, in the event of a cache miss.

Prerequisites

The following are needed to proceed with this post:

- An AWS account

- A Git client to clone the source code provided

- Docker installed and running on the local host or laptop

- Install AWS CDK

- The AWS Command Line Interface (AWS CLI)

- The AWS Systems Manager Session Manager plugin

- Amazon Bedrock model access enabled for Anthropic’s Claude 3.5 Sonnet v1 and Amazon Titan Embeddings G1 – Text in the us-west-2 AWS Region

- Python 3.12 with the pip package manager

- 500,000+ US Homes Data (For Sale Properties)

- License – CC0: Public Domain

Download the dataset and upload to Amazon S3

- Navigate to the 500,000+ US Homes Data dataset, and download the dataset to your local machine.

- Unzip the zip file, which will expand into a file called 600k US Housing Properties.csv.

- Upload this file to an Amazon Simple Storage Service (Amazon S3) bucket of your choice in the AWS account where you’ll be deploying the AWS Cloud Development Kit (AWS CDK) Replace

<bucket-name>with the bucket in your account.

Deploy the solution

- Clone the repository from GitHub.

- Setup the Python environment and install dependencies.

- Deploy the CDK application and specify the S3 bucket name used in the previous section as a parameter. It will take about 20-30 minutes to deploy the MemoryDB and RDS instances. Replace

<account-id>and<bucket-name>with your AWS account ID and bucket name from the previous section.

Note: if you receive an error at this step, ensure Docker is running on the local host or laptop, docker –-version verifies if the service is running locally.

- Use the py script to access the bastion host.

To perform these steps, you must have the Session Manager plugin for the AWS CLI installed as per the prerequisites. You can verify the Session Manager plugin installation by following the steps to Verify the Session Manager plugin installation.

Run the command provided by the script to use Session Manager, a capability of AWS Systems Manager, to access the bastion host. This will also set the SECRET_NAME environment variable for the session to configure the RDS database.

- On the bastion host, install required dependencies.

- Copy the artifact file from the S3 bucket name used in the previous section (Download the dataset and upload to Amazon S3) to the bastion host.

- On the same bastion host terminal session, create the following Python code by copying and pasting the code into your terminal then press enter.

- Run the Python code to input data into the RDS instance from the spreadsheet.

- After the script completes, navigate to the AWS Lambda console from your browser.

- Search for the function named

DataIndexerStack-DataIndexerFunction. - Open the function and navigate to the Test Choose Test. This will populate the embeddings table with database schema information.

- Next, also from the Lambda console, search for the function named

AppStack-TextToSQLFunction. - Open the function, and navigate to the Test Edit the Event JSON with the following:

- Choose Test to trigger the Lambda function to query the RDS instance.

Note: if you receive an error in the initial test execution, the index in MemoryDB for Valkey may still be under construction. Choose Test again to retry, and the attempt should be successful.

Test the text-to-sql chatbot application

To run the Streamlit app, perform the following steps from your local host or laptop. In this section, we generate a Streamlit secrets file to store the API key and capture the API endpoint from the deployed CDK application.

- Use the py script to set up Streamlit on your local machine:

The output will look similar to the following:

- Follow the instructions provided by the setup_helper script, with the four steps to:

- Retrieve the API key for the API Gateway deployment

- Create a

./streamlit/.streamlit/secrets.tomlfile with theapi_keyandapi_endpointvalues - Install Streamlit

- Run the Streamlit app

Try some of the following questions to see the responses from the solution:

- What are the top 5 most expensive properties in San Francisco, CA?

- What key factors influence home prices in the 90210 zip code?

- List top homes in the Bay Area under $700 per sq ft and over 1000 sq ft, sorted by price

Example output for: “What are the top homes in WA where avg sq ft is > $700 and sq ft is greater than 1000”

Example output for: “What are properties in Seattle that are under $600 per sq ft and over 1000 sq ft?”

In rare cases, you may experience a Gateway Timeout (HTTP code 504), which means the app was not able to respond within the 29-second timeout. Recommended actions:

- Retry your prompt, as the result may have already been cached

- Request an increase in the integration timeout for API Gateway

While this demonstration uses a single housing data table, the underlying text-to-SQL application is capable of supporting complex JOINs across multiple tables in more advanced implementations.

Clean up

To clean up the resources, you can use cdk destroy --all. For the sake of infrastructure management best practices, we recommend deleting the resources created in this demonstration.

Conclusion

In this post, we demonstrate a solution that allows you to use natural language to generate complex SQL queries with a variety of resources enabled by Amazon RDS for PostgreSQL and Amazon Bedrock. We also increased the accuracy of the generated SQL queries through effective best practices such as caching, parameterized SQL, and table augmented generation processes. Additionally, we used the metadata in the PostgreSQL’s pgvector to consider the table names asked in the query through the RAG framework. Finally, we then tested the solution in various realistic scenarios with different query complexity levels.

To get started, open the Amazon Bedrock console or review the new APIs in the Amazon Bedrock SDK, and start creating your prompts and flows today.

To learn more about using Amazon RDS for PostgreSQL and pgvector for AI and ML workloads, see Amazon RDS for PostgreSQL support for pgvector for simplified ML model integration.

For customers whose data is stored in Amazon Redshift or Amazon Sagemaker Lakehouse, Amazon Bedrock Knowledge Bases now supports structured data retrieval. More information can be found here Amazon Bedrock Knowledge Bases now supports structured data retrieval.

About the Authors

Frank Dallezotte is a Senior Solutions Architect at AWS and is passionate about working with independent software vendors to design and build scalable applications on AWS. He has experience creating software, implementing build pipelines, and deploying these solutions in the cloud.

Archana Srinivasan is an Enterprise Support Lead within Enterprise Support at Amazon Web Services. Archana provides strategic technical guidance for independent software vendors (ISVs) to innovate and operate their workloads efficiently on AWS cloud.

Source: Read More