This is a guest post co-written with Khurram Naseem, Senior Director of Engineering at Careem and Haziq Masud, Senior Software Engineer at Careem

Careem powers rides, deliveries, and payments across the Middle East, North Africa and South Asia. Their massive platform serves 50 million users in 100 cities using a robust, cloud-centered infrastructure built entirely on Amazon Web Services (AWS).

As Careem grew, so did its data infrastructure challenges. Their monolithic 270 TB Amazon Relational Database Service (Amazon RDS) for MySQL database (DB) consisting of one writer and five read replicas— experienced performance issues due to increased storage utilization, slow queries, high replica lag, and increased Amazon RDS cost.

To ensure efficient data purging process, we focused on the following goals:

- Purge 24 TB of data with minimal downtime

- Maintain system performance and scalability to support business-critical applications

- Optimize costs by reducing storage utilization

In this post, we provide a step-by-step breakdown of how Careem successfully implemented a phased data purging strategy, improving DB performance while addressing key technical challenges. This large-scale optimization reduced the overall Amazon RDS for MySQL storage footprint from 270 TB to 78 TB, significantly improving database efficiency.

Solution overview

We needed to delete 24 TB of historical data from our RDS for MySQL DB without impacting the performance of the current writer DB instance.

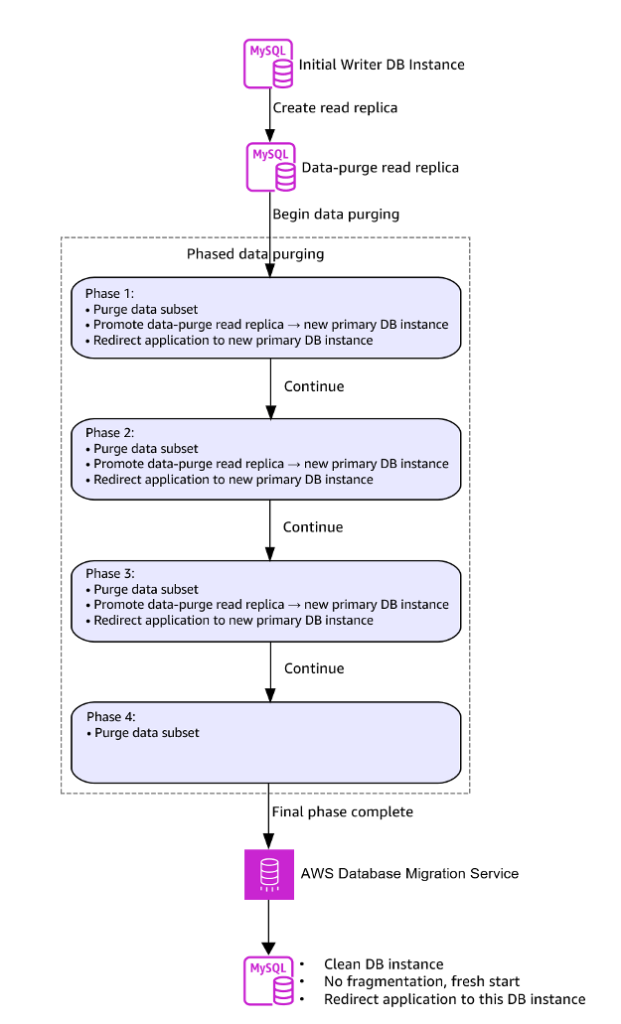

Additionally, we aimed to resolve table fragmentation, improve performance and optimize storage utilization to reduce overall Amazon RDS costs. The following diagram shows the high-level steps flow involved in Careem’s RDS for MySQL optimization journey:

This post outlines Careem’s Amazon RDS for MySQL optimization journey in two key phases:

- Part 1: Strategic data decomposition – We explore a structured, methodical approach of breaking down and purging data in manageable chunks, while making sure the production RDS for MySQL DB instance remained fully operational and unaffected.

- Part 2: DB transformation – We detail how we used AWS Database Migration Service (AWS DMS) Serverless to migrate our purged RDS for MySQL read replica to a new RDS for MySQL DB instance, enhancing performance, scalability, and cost-efficiency.

Careem environment

Before we dive into the migration process, let’s clarify the key DB instances involved in this process:

- Original production primary DB instance – This refers to the source RDS for MySQL DB that handles write operations in the current production environment.

- Original production read replicas – These are the source RDS for MySQL read replicas (numbered 1–5) that handle read operations in the current production environment.

- Data-purge read replicas – This is a series of intermediate read replicas used during each phase of the data purge process.

- New production primary DB instance (Phase 1–4) – These are the target RDS for MySQL DBs that become new production writers after each phase of data deletion.

- New production read replicas (Phase 1–5) – These are the target RDS for MySQL read replicas that handle read operations after each phase of data deletion.

- New primary DB instance – This is the new production DB instance created as part of the migration.

Part 1: Strategic data purging

To efficiently manage historical data, we implemented a phased data purging process, consisting of four iterations. This iterative approach was necessary because of varying data density.

Each iteration followed a structured seven-step process to provide a controlled and safe data purge. At the end of the process, we successfully removed 24 TB of obsolete data from the primary DB instance. This progressive purging significantly reduced the DB storage footprint, enhanced performance, and reduced Amazon RDS for MySQL deployment cost.

At a high level, our Amazon RDS for MySQL deployment consists of a writer DB instance and five read replicas, each provisioned with 45 TB of IO2 EBS storage and 22,000 provisioned IOPS, as illustrated in the following diagram.

To mitigate risk to the production environment, we initiated the process by creating a dedicated read replica for data purging operations. This read replica will be opened into read/write mode to perform data purging.

Each phase of the data purge followed a structured sequence to implement a safe and efficient deletion process on the data-purge read replica:

- Identify data for purging.

- Create and prepare the data-purge read replica.

- Enable

ON DELETE CASCADEfor foreign keys. - Execute data deletion.

- Reset foreign key constraints.

- Conduct thorough testing at the data-purge read replica.

- Promote the data-purge read replica to production.

We walk through these steps in more detail in the following sections.

Step 1: Identify data for purge

The first step in the purging process is to determine which data should be deleted. This involves the following:

- Defining clear purging criteria – Establish specific rules for identifying data eligible for purging.

- Identifying tables for data purge – Select the DB tables for purging. In our case, we identified 43 tables, with the largest table being approximately 2 TB in size.

Step 2: Create and prepare a data-purge read replica

To minimize the impact on the production environment, we first created a data-purge read replica and prepared it for data deletion. This process involves the following steps:

- Create a data-purge read replica to offload the data purging process from the primary DB instance.

- Monitor the

ReplicaLagmetric in Amazon CloudWatch and wait untilReplicaLagreaches0. - Associate a new parameter group with the data-purge read replica:

- On the Amazon RDS console, choose Databases in the navigation pane.

- Select the read replica and choose Modify.

- Choose the updated parameter group.

- Apply the changes and reboot the instance without failover after the status changes to Available and Pending-reboot.

- Convert the data-purge read replica into read/write mode by turning off read-only settings:

- On the Amazon RDS console, choose Parameter groups in the navigation pane.

- Select the relevant parameter group and choose Edit.

- Set

read_only = 0, then save the changes.

At this stage, the primary DB instance continued to replicate. We still had not performed data purge.

- Wait until

ReplicaLagreaches0and then stop replication using the following SQL command:

At this point, the primary DB instance stopped replicating the data change to the data-purge read replica.

Step 3: Enable ON DELETE CASCADE on foreign keys

In our data-purge read replica, several child tables had foreign keys without the ON DELETE CASCADE option, according to our business requirements. However, these tables were part of the data purge process, and we needed to make sure that deleting records from primary tables also removed the corresponding child tables’ records automatically.

Activating ON DELETE CASCADE consisted of the following steps:

- Retrieve the existing foreign key constraint using the

SHOW CREATE TABLEcommand:Look for the

CONSTRAINTdefinition in the output to identify the foreign key name. - Remove the current constraint before adding a new one:

Add ON DELETE CASCADE option to the foreign key constraints to automate deletion of child table records when their parent records are deleted.

Step 4: Execute data deletion

Our objective was to remove historical data from the data-purge read replica without causing referential conflicts. Since this data was static and no longer actively updated on the primary database instance, we could safely proceed with deletion on the read replica.

To manage this cleanup efficiently, we set up a data purge job using Go Programming Language (Go) running on an Amazon Elastic Compute Cloud (Amazon EC2) instance. Go was selected because it offers native capabilities for running multithreaded applications. The job used SQL queries to remove old records in batches. This approach allowed us to:

- Minimize impact on the system

- Gradually remove data

- Track progress with monitoring, logging, and error handling

Here’s the high-level logic behind the deletion:

Data purge visual flow:

[Date Range] ── [Fetch IDs Daily] ──

[Fetch IDs Daily] ── [Split Into Batches] ──

[Split Into Batches] ── [Delete Each Batch]

[Delete Each Batch]

(1 quarter) (such as, 2000 IDs) (such as, 2 x 1000) (SQL delete)

Step 5: Reset foreign key constraints

MySQL replication from the original writer DB instance to the data-purge read replica requires the schemas of both DBs to be fully aligned. Because we had temporarily enabled ON DELETE CASCADE in Step 3, we reverted this change to restore the original foreign key constraints using the following SQL command:

Step 6: Prepare the data-purge read replica for promotion to new primary writer DB instance

We resumed replication at the data-purge read replica by executing the following SQL command:

The replication catch-up time varied based on the data gap, but in our case, it took approximately 7 hours for the data-purge read replica to fully synchronize with the production writer DB instance.

We first assigned the data-purge read replica to the same parameter group as the current production writer DB instance.

To assess the read performance of the data-purge replica, we gradually shifted 5% of the read traffic from the production read replicas to the data-purge read replica. Throughout this process, we closely monitored the following:

- Application performance of the production application.

- Read latency metrics to comply with application read-focused SLAs. The application SLA for read latency was 1 millisecond. We achieved an average latency of 0.52 millisecond, as shown in the following figure.

Because the data-purge read replica was still in read-only mode, we were unable to test write latency at this stage. However, based on successful read latency validation, we proceeded to the next phase.

After confirming the stability and performance of the data-purge read replica, we reverted the read traffic back to the production DB. This incremental testing approach provided a low-risk validation of the data-purge replica without impacting the live production environment.

Step 7: Promote the data-purge read replica to the new production writer DB instance

After successfully completing testing, we promoted the data-purge read replica to become the new production writer DB instance by following a structured approach:

- Convert the single-Availability Zone (AZ) data-purge read replica to a Multi-AZ deployment to enhance fault tolerance.

- Create five nested read replicas using the data-purge read replica as the source, as shown in the following diagram.

- Confirm the data-purge read replica is at 100% sync status and temporarily stop the application during off-peak business hours.

- Promote the data-purge read replica to new primary DB instance

- Redirect the application to the newly promoted writer DB instance.

- Restart the application and conduct comprehensive health checks to validate system stability.

- Take an Amazon RDS snapshot of the previous writer DB instance for backup and compliance.

- Retire the former RDS for MySQL instances, optimizing costs by eliminating redundant infrastructure.

we purged 1.5 TB of data. The following table illustrates how each iteration progressively purged data and transitioned to a new writer DB instance.

| Iteration | Data purged | Previous writer | New writer |

| 1 | 1.5 TB | Original primary writer DB instance | Data-purge read replica (Phase 1) |

| 2 | 4 TB | Data-purge read replica (Phase 1) | Data-purge read replica (Phase 2) |

| 3 | 6 TB | Data-purge read replica (Phase 2) | Data-purge read replica (Phase 3) |

| 4 | 12.5 TB | Data-purge read replica (Phase 3) | Data-purge read replica (Phase 4) |

At this stage, disk space had not been reclaimed at the EBS volumes across both the writer and read replica instances. The data purging process focused strictly on deleting data from the DB while maintaining infrastructure integrity.

However, the project faced a significant challenge during Phase 4 post-data purge completion.

Phase 4 challenges

Although Phase 4 successfully decommissioned 12.5 TB of data at the data-purge read replica, making it the largest single-phase purge, it also introduced critical performance issues that impacted system stability.

We observed a sharp increase in both read and write latency, exceeding 20 milliseconds, far beyond the business application SLA requirement of 1 millisecond. Despite deploying db.r7g.16xlarge instance with optimized settings – innodb_purge_threads=32 and innodb_purge_batch_size=5000 – the data-purge read replica experienced severe performance degradation, even without an active workload.

The following figure illustrates the data-purge read replica (Phase 4) MySQL InnoDB history list length (HLL).

The performance degradation was traced to MySQL InnoDB’s handling of rows marked for deletion in the undo log. The InnoDB purge process involves reading deleted rows into the buffer pool, cleaning and purging them, and finally flushing them back to storage.

The intensive I/O requirements of the purge operation necessitated scaling our io2 EBS provisioned IOPS from 22,000 to 30,000, and subsequently to 50,000 IOPS when the initial increase proved insufficient.

Additionally, the data deletion operation generated approximately 5.7 TB of undo logs, creating further complications. Managing undo log file rotation was important to reduce IO2 EBS storage costs, and we aimed to optimize the undo file size to 30 GB.

Addressing these challenges required careful resource scaling and tuning to stabilize DB performance and achieve a smooth transition to the final production state.

Evaluating solutions: Overcoming InnoDB HLL bottlenecks

While assessing potential fixes, we considered natural MySQL InnoDB HLL reduction. However, this method was impractical, because it would take approximately 3.5 months to reduce HLL to 100,000 entries from 5.87 billion, far exceeding our project deadlines. Because of the unacceptable 20-millisecond read latency of application’s queries, we couldn’t promote the data-purge read replica (Phase 4) to the new production writer DB instance (Phase 4) for production use.

To summarize, at this step we still faced the following challenges:

- 5.87 billion MySQL InnoDB HLL, causing severe performance degradation

- 24 TB table-level fragmentation, impacting query performance

- 5.7 TB of undo log files, adding significant storage overhead

- EBS disk space still un-reclaimed, increasing storage costs

Given these constraints, we needed to re-evaluate our strategy and improve performance before proceeding with the final cutover.

Part 2: DB transformation

After a thorough evaluation in partnership with AWS, we determined that the most efficient path forward was to create a new RDS for MySQL instance (new writer DB instance) and migrate all data from the data-purge read replica (Phase 4) using AWS DMS Serverless. This approach effectively addressed the three critical challenges we faced:

- 24 TB of table fragmentation

- 5.87 billion MySQL InnoDB HLL entries

- Reduce the 5.7 TB undo file

- Reduce RDS storage size

At this stage, the data-purge read replica contained approximately 9 TB of tables’ data, yet it was using 45 TB of IO2 EBS storage. The following table shows current tables’ data and storage size with each DB instance:

| DB Instance | Tables’ Data Size | DB Instance Storage Size |

| Current Wrier DB Instance | 21.5 TB | 45 TB |

| Each Read Replica | 21.5 TB | 45 TB |

| Data Purge Read Replica | 9 TB | 45 TB |

We walk through the steps to implement this solution in the following sections.

Step 1: Reset foreign key constraints

At phase 4 data-purge read replica we had temporarily enabled ON DELETE CASCADE in Step 3 of Part1, we reverted this change to restore the original foreign key constraints using the following SQL command:

Step 2: Set up a new primary writer DB instance

Setting up a new writer DB instance consisted of the following steps:

- We created a new writer Single-AZ DB instance with 13 TB of IO2 EBS storage, significantly smaller than the 45 TB used by the data-purge read replica (Phase 4).

- The application-related schema was copied from the new production read replica (Phase 3) and used to create an empty DB at the new writer DB instance.

- Schema export from production replica:

- Schema import to new writer DB instance:

This step created all tables, indexes, constraints, and structure in the newly created RDS for MySQL DB instance without data.

- To facilitate replication, we configured the current production writer DB instance to retain binlogs for 7 days (the maximum limit). This made sure that enough long duration binlog files were available when needed for external replication to the new writer DB instance. This was achieved using the following SQL command:

- The replication process on the data-purge read replica was stopped manually, and the last synchronization point with the writer DB instance was recorded using the following command:

From the output of the preceding command, we captured

Relay_Master_Log_File, which shows the binlog file name from the writer DB instance that the data-purge read replica is currently processing, andExec_Master_Log_Pos, which represents the position within the current writer’s binlog file that the data-purge read replica has applied. This was key information that we used in the next few steps. - The recorded binlog file and position that we captured served as the starting point for initiating replication to the new writer DB instance, avoiding the loss of business transaction data from the current writer DB instance to the new writer DB instance.

Note: We are only focusing on the current primary DB instance, data-purge read replica, and the new primary DB instance, so we omitted the current production read replicas for read simplicity:

The following diagram illustrates the AWS DMS Serverless replication to the newly created RDS for MySQL DB instance from the data-purge read replica.

Step 3: Data transfer with AWS DMS

To migrate data efficiently, we used AWS DMS Serverless to perform a full data load from the data-purge read replica to the new writer DB instance, as shown in the preceding diagram. This approach made sure that the data-purge read replica and new writer DB instance had identical data.

When the AWS DMS full load task for data migration was complete, database user accounts from the production read replica were also copied to the new RDS for MySQL instance using a custom script.

Step 4: Establish MySQL external replication to the newly created MySQL DB instance

One of the most critical steps in this migration approach was setting up MySQL external replication from the production writer DB instance to the newly created standalone RDS for MySQL DB instance. Using the binlog file and position captured earlier, we configured replication with the following command:

After the configuration was complete, we initiated replication using the following command:

With this setup, the new RDS for MySQL DB instance became a new replica of the production writer DB instance, but with significant improvements:

- Eliminated table fragmentation

- Alleviated excessive MySQL InnoDB HLL

- Reduced undo log file to approximately 5.7 GB

- Reduced RDS storage size

The following diagram illustrates the setup for MySQL external replication from the production writer DB instance to the newly created RDS for MySQL DB instance.

The newly created RDS for MySQL instance took approximately 11 hours to catch up with the production writer DB instance by applying 3 days’ worth of differential binlogs.

Step 5: Rigorous testing at the new writer DB instance

To validate the performance and efficiency of the new writer DB instance, we conducted thorough testing before finalizing the migration:

- Configuration consistency – We made sure that the new writer DB instance used the same parameter group as the production writer DB instance, maintaining identical settings for seamless compatibility.

- Gradual read traffic shift – To assess real-world performance, we initially routed 5% of the production read traffic to the newly created new writer DB instance. After observing stable results, we gradually increased the read traffic, monitoring for anomalies.

- Performance monitoring – We closely tracked application service health metrics and DB performance, targeting a 1-millisecond read response time. Impressively, the new writer DB instance, deployed as a Single-AZ instance, achieved an even lower read latency of 0.01 milliseconds.

- Traffic rollback and final validation – After successfully validating the performance, we routed all read traffic back to the production replica, achieving a smooth and controlled rollback.

Step 6: Readiness of the new writer DB instance

It was important that the new writer DB instance follow the same DB topology as the current production RDS for MySQL deployment in terms of Multi-AZ of the writer DB instance and having five more read replicas, as shown in the following diagram. This includes the following steps:

- Upgrade to Multi-AZ for high availability – The new writer DB instance was first converted into a Multi-AZ instance, providing high availability and fault tolerance

- Scale the read replicas – To match the existing production DB setup, we created five single-AZ nested read replicas of the new writer DB instance, maintaining the same DB structure as before

Step 7: Cut over to the new writer DB instance

To perform the cut over, we followed a carefully planned process during off-peak business hours:

- Before initiating the switchover, we validated that the new writer DB instance was fully in sync with the current production writer.

- To prevent data inconsistencies during the switch, we temporarily stopped the application during the least busy hours.

- With the application paused, we performed a final check to confirm there was no replication lag between the current production writer DB instance and the new writer DB instance.

- When synchronization was confirmed, we stopped MySQL external replication between the current production writer DB instance and the new writer DB instance, effectively making the new writer DB instance the new source of truth.

- We updated the application configurations to point to the new writer DB instance, making sure future DB transactions were directed to the new production writer DB instance.

- With the new setup in place, we restarted the application and performed a comprehensive application health check.

The following diagram illustrates the process of promoting the new writer DB instance to become the new production writer DB instance.

Step 8: Clean up

Cleanup consisted of the following steps:

- We dropped the old RDS for MySQL writer instance and its five read replicas.

- We took a snapshot of the previous writer DB instance for backup and recovery purposes.

- After the transition was validated, the decommission of the old production environment, reducing operational costs.

Outcome

This solution yielded the following results:

- Successfully purged 24 TB of historical data

- Reduced DB footprint from 270 TB to 78 TB (spanned over one writer DB instance and five read replicas)

- Reduced Provisioned IOPS from 50,000 to 12,000

- Achieved read latency of 0.54 milliseconds (better than 1-millisecond SLA)

- Experienced almost zero downtime during migration

- Resolved application table fragmentation and MySQL InnoDB HLL issues

We also achieved the following key benefits:

- Significant cost reduction through optimized storage and infrastructure

- Improved system performance and stability

- Better scalability for future growth

- Enhanced operational efficiency

- More efficient resource utilization with right-sized infrastructure

- Simplified DB management and maintenance

Key observations and challenges

As part of building this solution, we gained the following takeaways:

- Impact of data volume on InnoDB HLL:

- Observation – Initial small-scale deletions had minimal effect on InnoDB HLL, whereas later large-scale deletions (several TB) caused InnoDB’s HLL to surge to 5.87 billion.

- Explanation – InnoDB HLL tracks transactional changes. Large-scale deletions generate excessive undo logs, significantly increasing HLL.

- Lesson learned – The scale of data deletion directly affects InnoDB HLL. Strategic planning is required to manage large purges effectively.

- High InnoDB HLL and increased read and write latency:

- Observation – Read latency increased from 1 millisecond to 20 milliseconds when InnoDB HLL reached 5.87 billion on the data-purge read replica.

- Explanation – A high HLL increases the workload on the InnoDB storage engine, creating an I/O bottleneck during the purge process.

- Lesson learned – HLL should be actively monitored and controlled to prevent performance degradation.

- Provisioned IOPS scaling limitations:

- Observation – Scaling Provisioned IOPS from 22,000 to 30,000 to 50,000 helped but didn’t fully resolve latency issues.

- Explanation – Although increasing Provisioned IOPS improved throughput, InnoDB’s purge process remained the bottleneck because of long HLL still required to be cleaned up

- Lesson learned – Performance optimization must target root causes, such as the efficiency of the purge process.

- Time constraints for purging high InnoDB HLL:

- Observation – Estimated 3.5 months to reduce InnoDB’ HLL was unacceptable due to project deadlines.

- Explanation – InnoDB purge operations scale linearly with HLL size, making large data purges time-consuming.

- Lesson learned – Proactive planning is necessary to mitigate delays in large-scale data decommissioning.

- Binlog retention risks and data synchronization:

- Observation – The 7-day binlog retention limit on the writer DB instance risked creating a large replication gap.

- Explanation – If the read replica fell too far behind, replication to the reader instance would fail, due to missing binlogs.

- Lesson learned – Binlog retention strategies must align with long-running data purges to avoid synchronization issues.

- Undo log file size and EBS storage reclamation:

- Observation – The undo file unexpectedly grew to 5.7 TB (far beyond the planned 20 GB), and tables became severely fragmented, consuming 24 TB of space.

- Explanation – Large-scale deletions generate massive undo logs, and table fragmentation leaves unused storage blocks for InnoDB purge thread cleanup.

- Lesson learned – Performing routine table maintenance helps optimize both storage usage and DB performance.

- Read replica becomes unusable due to high latency:

- Observation – Due to MySQL InnoDB HLL purge operations application query latency increased and rendered the read replica unusable for business applications..

- Explanation – Business applications require low-latency responses. Increased latency can degrade user experience and impact performance SLAs.

- Lesson learned – Thorough performance testing is important before promotion to production.

Future plans

We’re transitioning from monolithic RDS deployments to a service-based DB architecture, while implementing table partitioning. This modernization will enhance our scalability, performance, cost efficiency, and operational excellence.

Conclusion

In this post, we demonstrated how Careem successfully optimized a 270 TB RDS for MySQL deployment, spread across one writer and five read replicas, using a strategic data purge approach and AWS services, reducing storage by 162 TB (27 TB * 6). Our journey highlights the importance of strategic data management and using cloud technologies.

Share your feedback in the comments section.

About the Authors

Kanhaiya Lal is a Senior Database Specialist TAM at AWS, with deep expertise in Amazon Aurora and RDS for PostgreSQL and MySQL. He works closely with enterprise customers, providing strategic guidance on operational excellence, workload optimization, and database best practices. Kanhaiya is passionate about helping organizations unlock the full potential of their AWS database solutions.

Kanhaiya Lal is a Senior Database Specialist TAM at AWS, with deep expertise in Amazon Aurora and RDS for PostgreSQL and MySQL. He works closely with enterprise customers, providing strategic guidance on operational excellence, workload optimization, and database best practices. Kanhaiya is passionate about helping organizations unlock the full potential of their AWS database solutions.

Khurram Naseem is a seasoned technology leader with over 25 years of experience in engineering and management. He currently serves as Senior Director of Engineering at Careem, where he drives digital transformation, team leadership, and technological innovation. Khurram has a proven track record of building scalable systems, fostering high- performance teams, and delivering enterprise-grade solutions across diverse industries.

Khurram Naseem is a seasoned technology leader with over 25 years of experience in engineering and management. He currently serves as Senior Director of Engineering at Careem, where he drives digital transformation, team leadership, and technological innovation. Khurram has a proven track record of building scalable systems, fostering high- performance teams, and delivering enterprise-grade solutions across diverse industries.

Imane ZEROUAL is Senior Cloud Operations Architect at Amazon Web Services. With long industry experience, including roles in networking, Telco, cloud operations, and systems engineering. In her current role, she helps AWS customers run resilient, secure, sustainable, and cost-efficient workloads on AWS.

Imane ZEROUAL is Senior Cloud Operations Architect at Amazon Web Services. With long industry experience, including roles in networking, Telco, cloud operations, and systems engineering. In her current role, she helps AWS customers run resilient, secure, sustainable, and cost-efficient workloads on AWS.

Haziq Masud is a Senior Software Engineer specializing in database management at Careem. With three years of experience on the Storage Team, he focuses on AWS RDS optimization and automation, ensuring robust database performance while facilitating seamless developer workflows.

Haziq Masud is a Senior Software Engineer specializing in database management at Careem. With three years of experience on the Storage Team, he focuses on AWS RDS optimization and automation, ensuring robust database performance while facilitating seamless developer workflows.

Source: Read More