In this post, we explore a streamlined solution that uses AWS Lambda and Python to read and ingest CSV data into an existing Amazon DynamoDB table. This approach adheres to organizational security restrictions, supports infrastructure as code (IaC) for table management, and provides an event-driven process for ingesting CSV datasets into DynamoDB.

For many organizations operating in highly regulated environments, maintaining comprehensive audit trails of data processing is not just beneficial—it’s often mandatory for compliance. This solution addresses that need by automatically documenting both successful transactions and failed records, providing the transparency required for regulatory validation and reconciliation activities. By creating distinct outputs for processed and unprocessed items, the system provides the evidence necessary to satisfy auditors across financial services, healthcare, and other highly regulated industries where data handling documentation may be required.

Key requirements this solution addresses include:

- Programmatically ingest CSV data into DynamoDB using an extract, transform, and load (ETL) pipeline

- Continuously append data to an existing DynamoDB table

- Extend the solution to on-premises environments, not just AWS

- Use an event-driven approach as new data is available for ingestion

- Alleviate dependence on the AWS Management Console or manual processes

- Use audit trails for point-in-time snapshots of data transformed

This solution is ideal for small to medium sized datasets (1k-1M+ rows per file). If your requirements include ability to resume interrupted import tasks, ability to ingest large datasets, and compute time per execution longer than 15 minutes, consider using an AWS Glue ETL job instead of AWS Lambda.

Solution Overview

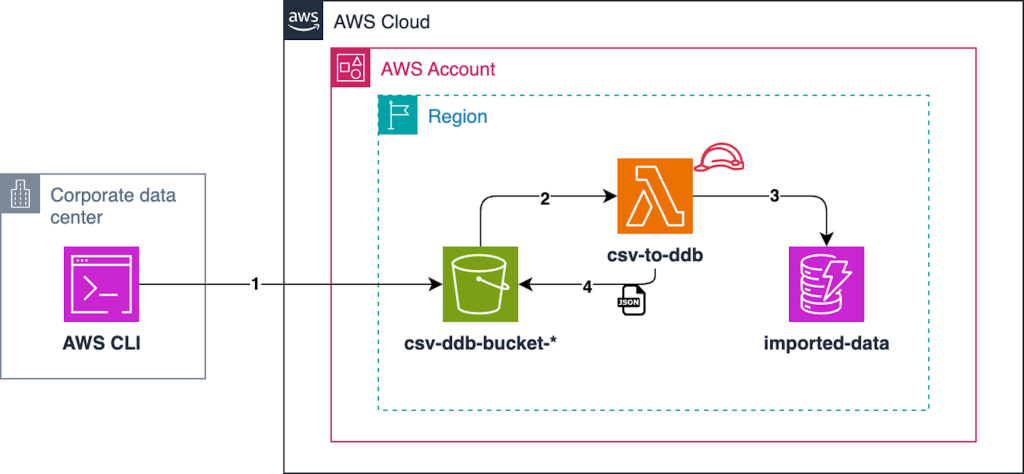

The data ingestion workflow is as follows:

- Use the AWS Command Line Interface (AWS CLI) or schedule a file transfer to upload CSV data to an Amazon Simple Storage Service (Amazon S3) bucket.

- Amazon S3 Event Notifications triggers a Lambda function.

- The Lambda function reads the CSV file from the S3 bucket, appends the data to an existing DynamoDB table, and persists transformed data to a JSON object in the original bucket.

The following diagram shows the end-to-end data ingestion workflow.

To set up the solution, use the following high-level steps:

- Create an AWS Identity and Access Management (IAM) role.

- Create a Lambda function and upload the Python code.

- Create an S3 bucket and event notification to trigger the Lambda function.

- Create a DynamoDB table.

- Generate a sample CSV file.

- Upload to the S3 bucket to import the CSV file to the DynamoDB table.

- Explore the DynamoDB table items.

In this example, we are using small resource sizing. As you scale the dataset size, consider the following:

- Increase Lambda memory up to 10GB (max). This will also boost CPU allocation and network bandwidth.

- Set the Lambda timeout to a maximum of 15 minutes (900 seconds).

- Increase Lambda ephemeral storage to 10GB if processing large CSV files temporarily.

*During testing this solution was able to ingest a CSV file containing 1 million rows in 9 minutes with 5120MB of memory configured.

If you require larger files to process in less than 15 minutes, have complex transformations to perform, or need to resume interrupted jobs, use an AWS Glue ETL job.

Prerequisites

To deploy this solution, you can use a development computer or AWS CloudShell. The following must be installed:

- At a minimum, AWS CLI 2.23.12. For instructions, see Getting started with the AWS CLI.

- At a minimum, Python 3.13.1.

- This walkthrough uses Linux operating system syntax and commands. You will need to translate the commands to PowerShell/Microsoft Windows.

- Set your default AWS Region in the AWS CLI:

You will need an IAM role with the appropriate permissions to configure Lambda, Amazon S3, IAM, and DynamoDB.

Create an IAM role

To create a project folder on your development computer, run the following code:

To create an IAM role, follow these steps:

- Gather and set the environment variables. Be sure to replace your DynamoDB table name and desired Lambda function name:

- Create a trust policy for the Lambda execution role:

- Create the IAM role using the trust policy:

- Run the follow commands to create and attach a least privilege policy to the Lambda execution role. This policy is scoped down to only the permissions needed to read from Amazon S3 and write to DynamoDB.

Create a Lambda function and upload the Python code

To create the Lambda function and upload the Python code, follow these steps:

- Define environment variables with the newly created IAM role’s Amazon Resource Name (ARN). This will be used when creating your Lambda function.

- Using your development computer, make a project folder and run the following code snippet to create your Python script. This Python code will be invoked by the Lambda function designed to process a CSV file uploaded to an S3 bucket, convert it into a list of dictionaries, write the data to a DynamoDB table, and then upload a JSON representation of the data back to the S3 bucket. The function is triggered by an S3 event and operates in an event-driven manner:

This Lambda function implements an ETL pipeline triggered by CSV file uploads to Amazon S3. The function extracts data from the triggering CSV file using the Amazon S3 client, and performs data transformation by parsing the CSV content with the DictReader class and sanitizing it through removal of empty values and null fields. It then executes a parallel load operation as it iterates through the rows of your CSV file and writes them in batches of 25 at a time to your table. If any of the writes fail they are retried up to 3 more times using an exponential backoff strategy before finally being written to S3 to log all unprocessed items. The implementation uses AWS SDK batch operations for DynamoDB writes to optimize throughput, incorporates error handling with logging, and maintains a fully serverless architecture pattern by using environment variables for configuration management. The code demonstrates serverless integration patterns by chaining AWS services (Amazon S3 to Lambda to DynamoDB to Amazon S3) and implementing a dual-destination data pipeline.

- Zip your Python code so you can create your Lambda function with it:

- Create your function using the zip file that contains your Python code:

- Add environment variables to your Lambda function:

Create an S3 bucket and event notification to trigger the Lambda function

To create an S3 bucket and event notification to trigger the Lambda function, follow these steps:

- Create the S3 bucket for importing your CSV data:

- Add permissions to the Lambda function to be invoked by the S3 bucket ARN:

- Create the S3 bucket notification configuration:

Create a DynamoDB table

To create a DynamoDB table, use your development computer with the necessary prerequisites installed to run the following command:

This command will work on Linux operating systems. For Windows, replace the backslash with `.

The command creates an on-demand table with a partition key named account and a sort key named offer_id. Primary keys in DynamoDB can be either simple (partition key only) or composite (partition key and sort key). It is important that the primary key is both unique for each record, as well as provides uniform activity across all partition keys in a table. For our use case, we will be using account number and offer ID, as they can be combined to give each item a unique identifier that can be queried.

Since this is a batch use-case, an on-demand table will allow you to only pay for the requests that you make during the processing. On-demand tables scale automatically to accommodate the levels of traffic that you need, so you don’t have to worry about managing scaling policies or planning capacity. By default, on-demand tables are provisioned to support 4,000 Write Capacity Units (WCU) and 12,000 Read Capacity Units (RCU). If you plan on exceeding these values from the start, you can explore using warm throughput as a solution.

Generate a sample CSV file

To generate a sample data.csv file, follow these steps:

- Using your development computer, create the following Python script:

- Run the following Python script:

A file called

data.csvwith 1,500 rows will be created in your project folder. The following code shows an example:account,offer_id,catalog_id,account_type_code,offer_type_id,created,expire,risk

43469653,626503640435,649413151048141733,GE,07052721,2024-07-22T16:23:06.771968+00:00,2025-04-15T16:23:06.771968+00:00,high

Upload to the S3 bucket to import the CSV file to the DynamoDB table

To upload to the S3 bucket to import the CSV file to the DynamoDB table, run the following command:

To explore the table items, use these two CLI commands. These will return a count of all of the items inserted as well as the first 10 items from your table:

The following screenshots show an example of the table queries.

You have just built a serverless ETL pipeline that automatically imports CSV data into DynamoDB. The solution uses Lambda and Amazon S3 event triggers to create a zero-maintenance data ingestion workflow.

Monitoring and Optimization

Once you have provisioned all of the resources, it is important to monitor both your DynamoDB table’s performance as well as your ETL Lambda. Use CloudWatch Metrics to monitor table-level metrics such as RCUs and WCUs consumed, Latency, and Throttled requests, while using CloudWatch Logs for debugging of your function through the error logging that is stored. This will provide insight into the performance of your DynamoDB table as well as Lambda function as the ETL process is running.

As the size of your input file grows, it is important to also scale the memory and ephemeral storage allotted to your Lambda function accordingly. This ensures that the Lambda function will run consistently and efficiently. When sizing your Lambda function, you can use the AWS Lambda Power Tuning tool to test different memory configurations to optimize for both performance and cost.

Clean up

When you’ve finished, clean up the resources associated with the example deployment to avoid incurring unwanted charges:

Conclusion

In this post, we presented a solution combining the power of Python for data manipulation and Lambda for interacting with DynamoDB that enables data ingestion from CSV sources into a DynamoDB table. We also demonstrated how to programmatically ingest and store structured data in DynamoDB.

You can use this solution to integrate data pipelines from on premises into an AWS environment for either migration or continuous delivery purposes.

About the Author

Bill Pfeiffer is a Sr. Solutions Architect at AWS, focused on helping customers design, implement, and evolve secure and cost optimized cloud infrastructure. He is passionate about solving business challenges with technical solutions. Outside of work, Bill enjoys traveling the US with his family in their RV and exploring the outdoors.

Mike Wells is a Solutions Architect at AWS who works with financial services customers. He helps organizations design resilient, secure cloud solutions that meet strict regulatory requirements while maximizing AWS benefits. When not architecting cloud solutions, Mike is an avid runner and enjoys spending time with family and friends.

Source: Read More