As organizations seek to modernize their database infrastructure, many migrate their workloads from Oracle to PostgreSQL. However, migrations involve understanding the relationships between the different capabilities of each system, which may require taking additional steps to complete the transaction. This can slow down the migration. One example is the Oracle nested table feature, which supports multi-valued attributes through sub-tables. The differences between Oracle and PostgreSQL in their handling of user-defined types (UDTs) and associated member functions require careful consideration during the migration process due to conceptual differences.

Oracle offers 3 different ways to implement UDTs; we’ll explore two of them in this blog post. A single-level UDT is a basic data structure with no nested parts, making its migration straightforward. You can migrate a single-level UDT using the open source ora2pg tool; refer to Migrating user-defined types from Oracle to PostgreSQL. Migrating a multi-nested UDT is more complex because of its hierarchical structure, where one UDT can contain other UDTs as attributes. This nesting creates multiple layers of data that must be navigated and understood during migration.

In Oracle, UDTs can have member functions written in PL/SQL that are integrated directly into the UDT. In contrast, PostgreSQL currently doesn’t allow member functions within UDTs. As a result, when migrating UDTs from Oracle to PostgreSQL, you must separately handle these member functions and implement their functionality using different methods in PostgreSQL.

In this post, we dive deep into these differences and provide guidance for a smooth migration, helping ensure that the integrity of your data models is maintained throughout the process. We will also walk you through the details of converting complex member type functions in the multi-nested UDT from Oracle to PostgreSQL. After conversion is complete, we show how you can use AWS Database Migration Service (AWS DMS) to migrate the data for the tables that refer to the UDTs.

Prerequisites

To implement this solution, you will need the following prerequisites:

While these resources are necessary for hands-on implementation, you can still follow along with the conceptual steps and processes described in this guide without having the actual environment set up.

In the following sections, we discuss two use cases with single layer nested and multiple layers of nested UDTs and show how to migrate the tables from Oracle to PostgreSQL.

- Scenario 1 – Entertainment company managing movie data

- Structure – Movie synopses with a nested

movie_synopses_list_t

- Scenario 2 – Movie artists data

- Structure – The Artists table has roles which in turn have a nested

artist_role_t and artist_role_list_t UDTs and location attributes. location attributes have nested artist_local_t and artist_local_list_t

Scenario 1 – Single layer nested UDT

In this scenario, we see how the single layer nested UDT data is extracted and transformed before migrating into PostgresSQL database table.

Table MOVIE_SYNOPSES_TBL has column SYNOPSES, which is user defined data type. The following is the definition of the type and table involved.

Oracle definition of the UDT and table:

CREATE OR REPLACE TYPE movie_synopsis_list_t

AS TABLE OF movie_synopsis_t;

CREATE OR REPLACE TYPE movie_synopsis_t

AS OBJECT (

"seqNo" number,

"language" varchar2(20),

"region" char(2),

"synopsis" varchar2(2000),

map member function get_string

return varchar2

);

CREATE TABLE movie_synopsis_tbl

( movie_spec VARCHAR2(20 BYTE),

Release_key NUMBER,

hash NUMBER,

created DATE DEFAULT sysdate,

synopses movie_synopsis_list_t,

CONSTRAINT movie_synopsis_pk PRIMARY KEY (movie_spec, release_key, hash)

) NESTED TABLE synopses STORE AS movie_synopsis;

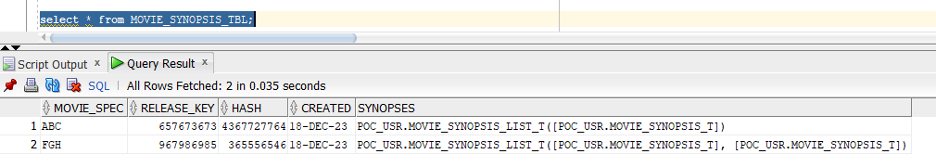

The following screenshot shows the data stored in the MOVIE_SYNOPSIS_TBL in Oracle.

The following screenshot shows the data stored in the table column type MOVIE_SYNOPSIS_LIST_T.

SQL query:

SELECT * FROM movie_synopsis_tbl;

Output:

| MOVIE_SPEC |

RELEASE_KEY |

HASH |

CREATED |

SYNOPSES (seqNo, language, region, synopsis) |

| ABC |

657673673 |

4367727764 |

18-DEC-23 |

MOVIE_SYNOPSIS_LIST_T(MOVIE_SYNOPSIS_T(1, ‘en’, ‘US’, ‘In a vast desert, explorers discover a hidden oasis. Mysterious ruins hold the key to ancient secrets. As they unravel the past, a malevolent force awakens. The oasis transforms into a perilous battleground. Teamwork is crucial as they face deadly traps and supernatural foes. Racing against time, they must find the legendary artifact, a beacon of hope. “Lost Oasis” is an adrenaline-fueled adventure, blending mystery, danger, and teamwork in a race against ancient forces.’)) |

| FGH |

967986985 |

365556546 |

18-DEC-23 |

MOVIE_SYNOPSIS_LIST_T(MOVIE_SYNOPSIS_T(1, ‘en’, ‘US’, ‘In a secluded town, a family moves into an old Victorian mansion, hoping to make a fresh start after a tragic event in their past. As they settle in, they’re unnerved by mysterious whispers and eerie shadows that seem to dance in the corners. Uncovering the mansion’s dark history, they find themselves entangled with a malevolent force that preys on their deepest fears. The once-loving home transforms into a nightmarish battleground, testing the family’s strength and unity. Desperate to unravel the mystery and save their family, they delve into the mansion’s grim past, uncovering a series of gruesome events that connect them to the malevolent force. In a race against time, they must confront their darkest fears and find a way to break the curse before it consumes them entirely.”The Haunting Shadows” is a chilling tale of suspense, psychological terror, and supernatural horror, making it a spine-tingling experience for those who dare to enter its twisted corridors.’), MOVIE_SYNOPSIS_T(2, ‘fr’, ‘FR’, ‘Dans une ville isolΘe, une famille emmΘnage dans un vieux manoir victorien, espΘrant faire un nouveau dΘpart aprΦs un ΘvΘnement tragique dans leur passΘ. Alors qu”ils s”installent, ils sont perturbΘs par des chuchotements mystΘrieux et des ombres inquiΘtantes qui semblent danser dans les coins. En dΘcouvrant l”histoire sombre du manoir, ils se retrouvent mΩlΘs α une force malveillante qui se nourrit de leurs plus grandes peurs. La maison autrefois chaleureuse se transforme en un champ de bataille cauchemardesque, mettant α l”Θpreuve la force et l”unitΘ de la famille.DΘsespΘrΘs de dΘmΩler le mystΦre et de sauver leur famille, ils plongent dans le pass Θ sinistre du manoir, dΘcouvrant une sΘrie d”ΘvΘnements macabres qui les lient α la force malveillante. Dans une course contre la montre, ils doivent affronter leurs plus sombres peurs et trouver unmoyen de rompre la malΘdiction avant qu”elle ne les consume entiΦrement. “Les Ombres HantΘes” est un rΘcit glaτant de suspense, de terreur psychologique et d”horreur surnaturelle, en faisant une expΘrience α donner des frissons α ceux qui osent pΘnΘtrer dans ses couloirs

tordus.’))

|

We use AWS Schema Conversion Tool (AWS SCT) to convert existing schema objects from one engine to another, which in this case is from Oracle to PostgreSQL. In Scenario 1, we convert the tables and UDT using AWS SCT. AWS SCT ignores member functions as it currently doesn’t support converting those when PostgreSQL is target.

The following screenshot shows the SQL Code after SCT Conversion.

The following screenshot is for the table conversion using SCT.

After conversion, the Data Definition Language (DDL) looks like the following:

CREATE TABLE IF NOT EXISTS movie_synopsis_tbl

(

movie_spec character varying(20) COLLATE pg_catalog."default" NOT NULL,

release_key bigint NOT NULL,

hash bigint NOT NULL,

created timestamp(0) without time zone DEFAULT (clock_timestamp())::timestamp(0) without time zone,

synopses movie_synopsis_list_t,

CONSTRAINT movie_synopsis_ pk PRIMARY KEY (movie_spec_type, release_key, hash)

);

From the table definition, notice that synopses is a text field in the table movie_synopsis_tbl and contains a brief summary of the movie that includes punctuation. Because of the punctuation in the values, it’s difficult to extract this data as array type with comma separated values, which is traditionally done using tools such as ora2pg. To extract the data, we can customize the data extraction using a combination of characters such as $^$ as a separator.

Use the following procedure to process the data from source table into intermediate table.

Create the stored procedure called MOVIE_SYNOPSIS_LOAD_V1 to extract the data from base nested table and transform into array and loaded to staging tables.

CREATE OR REPLACE PROCEDURE MOVIE_SYNOPSIS_LOAD_V1

IS

l_movie_spec MOVIE_SYNOPSIS_TBL.MOVIE_SPEC%TYPE;

l_release_key MOVIE_SYNOPSIS_TBL.RELEASE_KEY%TYPE;

l_hash MOVIE_SYNOPSIS_TBL."HASH"%TYPE;

l_created MOVIE_SYNOPSIS_TBL.CREATED%TYPE;

nested_obj_array MOVIE_SYNOPSIS_TBL.SYNOPSES%TYPE := MOVIE_SYNOPSIS_LIST_T();

extracted_data VARCHAR2(32767);

processed_data VARCHAR2(32767);

processed_data2 VARCHAR2(32767);

type extracted_array is table of varchar2(32767);

extracted extracted_array := extracted_array();

arr_len NUMBER;

CURSOR c1 is

select MOVIE_SPEC, RELEASE_KEY, "HASH", CREATED, synopses

from MOVIE_SYNOPSIS_TBL;

BEGIN

open c1;

LOOP

fetch c1 into l_movie_spec, l_release_key, l_hash, l_created, nested_obj_array;

EXIT WHEN c1%NOTFOUND;

arr_len := nested_obj_array.count;

extracted := extracted_array();

processed_data := '';

processed_data2 := '';

IF arr_len = 0 THEN

processed_data2 := null;

elsif arr_len = 1 THEN

processed_data := '';

processed_data2 := '';

DBMS_OUTPUT.put_line('Array length: ' || arr_len);

For i in 1..nested_obj_array.count LOOP

processed_data := nested_obj_array(i)."seqNo" ||'#$%^"'||nested_obj_array(i)."language"||'"#$%^"'||nested_obj_array(i)."region"||'"#$%^"'||nested_obj_array(i)."synopsis" ||'"';

processed_data2 := '(' || REGEXP_REPLACE(REGEXP_REPLACE(processed_data, '"', '\"'), ',', '\,') || ')';

END LOOP;

elsif arr_len > 1 THEN

processed_data := '';

processed_data2 := '';

DBMS_OUTPUT.put_line('Array length: ' || arr_len);

For i in 1..nested_obj_array.count LOOP

extracted.extend;

extracted(extracted.count) := nested_obj_array(i)."seqNo" ||'#$%^"'||nested_obj_array(i)."language"||'"#$%^"'||nested_obj_array(i)."region"||'"#$%^"'||nested_obj_array(i)."synopsis" ||'"';

END LOOP;

For i in extracted.first..extracted.last LOOP

processed_data := REGEXP_REPLACE(REGEXP_REPLACE(processed_data,'"', '\"'), ',', '\,') || '$#$' || '(' || extracted(i) || ')';

processed_data2 := REGEXP_REPLACE(processed_data, '$#$', '', 1, 1);

END LOOP;

END IF;

DBMS_OUTPUT.put_line(l_movie_spec || ';' || l_release_key || ';' || l_hash || ';' || l_created || ';' || processed_data2);

insert into MOVIE_SYNOPSIS_INT_TBL values (l_movie_spec, l_release_key, l_hash, l_created, processed_data2);

processed_data2 := '';

END LOOP;

close c1;

commit;

END;

This procedure processes records from the MOVIE_SYNOPSIS_TBL table, extracts and formats nested data (synopsis), and inserts the processed data into a staging table (MOVIE_SYNOPSIS_INT_TBL).

It handles cases for empty arrays, single-element arrays, and multi-element arrays differently, ensuring the data is appropriately formatted before insertion.

Special characters like quotes and commas are escaped using REGEXP_REPLACE to avoid issues during insertion.

Scenario 2 – Multiple layers of nested UDT

In this scenario, we look at extracting data from multi nested UDTs and loading into PostgreSQL table.

- SQL to create

movie_artist_t object type:

CREATE OR REPLACE TYPE movie_artist_t AS OBJECT (

"name" varchar2(1536),

"memberType" varchar2(255),

"roles" artist_role_list_t,

"locationattr" artist_local_list_t,

"clubMember" char(1),

"seqNo" number,

"isMember" char(1),

constructor function movie_artist_t (

p_name varchar2,

p_member_type varchar2,

p_club_member char,

p_is_member char,

p_seq_no number,

p_main_artist_in char,

p_member_type_ky number,

p_movie_ky number,

p_performance_ky number,

p_language_keys nkeys,

p_genre varchar2,

p_release_spec_type varchar2,

p_release_spec_version varchar2

) return self as result,

map member function get_string

return varchar2

);

- SQL to create a table type for

movie_artist_t:

CREATE OR REPLACE TYPE movie_artist_list_t

AS TABLE OF movie_artist_t;

The following type has more columns, roles, and location attributes, which are further nested UDTs.

- SQL to create

artist_role_t object type:

CREATE OR REPLACE TYPE artist_role_t AS OBJECT (

"name" varchar2(255),

"experienced" char(1),

"type" varchar2(255),

map member function get_string

return varchar2

);

- SQL to create table type for artist_role_t:

CREATE OR REPLACE TYPE artist_role_list_t

AS TABLE OF artist_role_t;

- SQL to create

artist_local_t object type:

CREATE OR REPLACE TYPE artist_local_t AS OBJECT (

"language" varchar2(20),

"studios" varchar2(1536),

"funding" varchar2(1536),

"location" varchar2(1536),

map member function get_string

return varchar2

);

- SQL to create table type for

artist_local_t:

CREATE OR REPLACE TYPE artist_local_list_t

AS TABLE OF artist_local_t;

Table DDL:

CREATE TABLE MOVIE_ARTIST_TBL

( "ARTIST_SPEC_TYPE" VARCHAR2(20 BYTE) ,

"ARTIST_KEY" NUMBER,

"HASH" NUMBER,

"CREATED" DATE DEFAULT sysdate,

"ARTISTS" MOVIE_ARTIST_LIST_T,

"ARTIST_FEATURE_ID" NUMBER,

"PLAY_ROLE_ID" NUMBER,

CONSTRAINT "MOVIE_ARTIST_TBL_PK" PRIMARY KEY ("ARTIST_SPEC_TYPE", "ARTIST_KEY", "HASH")

USING INDEX PCTFREE 10 INITRANS 2 MAXTRANS 255 COMPUTE STATISTICS NOLOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS" ENABLE NOVALIDATE

) SEGMENT CREATION IMMEDIATE

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

NOCOMPRESS LOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS"

NESTED TABLE "ARTISTS" STORE AS "ARTIST_TBL"

(( PRIMARY KEY ("NESTED_TABLE_ID", "seqNo") ENABLE)

ORGANIZATION INDEX PCTTHRESHOLD 50

COMPRESS 1 PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS"

NESTED TABLE "roles" STORE AS "ARTISTS_ROLE"

(( PRIMARY KEY ("NESTED_TABLE_ID", "name") ENABLE)

ORGANIZATION INDEX PCTTHRESHOLD 50

COMPRESS 1 PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS" ) RETURN AS VALUE

NESTED TABLE "locationattr" STORE AS "ARTISTS_LOCAL"

(( PRIMARY KEY ("NESTED_TABLE_ID", "language") ENABLE)

ORGANIZATION INDEX PCTTHRESHOLD 50

COMPRESS 1 PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS" ) RETURN AS VALUE) RETURN AS VALUE;

The following screenshot shows data stored in the table MOVIE_ARTIST_TBL in Oracle.

The following screenshot shows data stored in the field ARTISTS, which is defined as a custom data type.

The following screenshot shows the SQL Code after SCT conversion.

The following screenshot shows the SQL code for table conversion.

To create stored procedure called MOVIE_ARTISTS_INT_TBL_LOAD_V1 which processes data from a table called MOVIE_ARTIST_TBL and inserts transformed data into a staging table called MOVIE_ARTIST_INT_TBL use the following code.

CREATE OR REPLACE PROCEDURE MOVIE_ARTISTS_INT_TBL_LOAD_V1

IS

-- Define variables to store values from MOVIE_ARTIST_TBL

l_artist_spec_type MOVIE_ARTIST_TBL.ARTIST_SPEC_TYPE%TYPE;

l_artist_key MOVIE_ARTIST_TBL.ARTIST_KEY%TYPE;

l_hash MOVIE_ARTIST_TBL."HASH"%TYPE;

l_created MOVIE_ARTIST_TBL.CREATED%TYPE;

l_artist_feature_id MOVIE_ARTIST_TBL.ARTIST_FEATURE_ID%TYPE;

l_play_role_id MOVIE_ARTIST_TBL.PLAY_ROLE_ID%TYPE;

nested_obj_array MOVIE_ARTIST_TBL.ARTISTS%TYPE := MOVIE_ARTIST_LIST_T();

extracted_data VARCHAR2(32767);

processed_data VARCHAR2(32767);

processed_data2 VARCHAR2(32767);

typ2processed_data VARCHAR2(32767);

typ2processed_data2 VARCHAR2(32767);

f_processed VARCHAR2(32767);

f_processed2 VARCHAR2(32767);

f_processed3 VARCHAR2(32767);

processed_roles VARCHAR2(32767);

processed_loc_names VARCHAR2(32767);

arr_len_roles NUMBER;

arr_len_loc_names NUMBER;

-- Define a type and array to hold extracted data

TYPE extracted_array IS TABLE OF VARCHAR2(32767);

extracted extracted_array := extracted_array();

-- Define variables for nested table elements

l_roles ARTIST_ROLE_T;

l_loc_names ARTIST_LOCAL_T;

cur_main MOVIE_ARTIST_TBL.ARTISTS%TYPE;

cur_type1 ARTIST_ROLE_T;

-- Cursor to select all records from MOVIE_ARTIST_TBL

CURSOR c1 IS SELECT * FROM MOVIE_ARTIST_TBL;

BEGIN

OPEN c1;

LOOP

FETCH c1 INTO l_artist_spec_type, l_artist_key, l_hash, l_created, nested_obj_array, l_artist_feature_id, l_play_role_id;

EXIT WHEN c1%NOTFOUND;

-- Initialize variables for each record

processed_data := '';

processed_data2 := '';

typ2processed_data := '';

typ2processed_data2 := '';

l_roles := NULL;

l_loc_names := NULL;

f_processed := '';

f_processed2 := '';

f_processed3 := '';

-- Loop through nested ARTISTS array

FOR i IN nested_obj_array.FIRST .. nested_obj_array.LAST LOOP

arr_len_roles := nested_obj_array(i)."roles".COUNT;

arr_len_loc_names := nested_obj_array(i)."locationattr".COUNT;

extracted := extracted_array();

-- Process roles

IF arr_len_roles = 0 THEN

processed_data2 := NULL;

ELSIF arr_len_roles = 1 THEN

processed_data := '';

processed_data2 := '';

FOR j IN 1..nested_obj_array(i)."roles".COUNT LOOP

l_roles := nested_obj_array(i)."roles"(j);

processed_data := REGEXP_REPLACE(l_roles."name", '([[:punct:]])', '\1') || '#$' || l_roles."experienced" || '#$' || REGEXP_REPLACE(l_roles."type", '([[:punct:]])', '\1');

processed_data2 := '[{' || processed_data || '}]';

END LOOP;

ELSIF arr_len_roles > 1 THEN

processed_data := '';

processed_data2 := '';

FOR j IN 1..nested_obj_array(i)."roles".COUNT LOOP

l_roles := nested_obj_array(i)."roles"(j);

extracted.EXTEND;

extracted(extracted.COUNT) := REGEXP_REPLACE(l_roles."name", '([[:punct:]])', '\1') || '#$' || l_roles."experienced" || '#$' || REGEXP_REPLACE(l_roles."type", '([[:punct:]])', '\1');

END LOOP;

FOR k IN extracted.FIRST..extracted.LAST LOOP

processed_data := processed_data || '$#' || '{' || extracted(k) || '}';

processed_data2 := '[' || REGEXP_REPLACE(processed_data, '$#', '', 1, 1) || ']';

END LOOP;

END IF;

extracted := extracted_array();

-- Process location attributes

IF arr_len_loc_names = 0 THEN

typ2processed_data2 := NULL;

ELSIF arr_len_loc_names = 1 THEN

typ2processed_data := '';

typ2processed_data2 := '';

FOR l IN 1..nested_obj_array(i)."locationattr".COUNT LOOP

l_loc_names := nested_obj_array(i)."locationattr"(l);

typ2processed_data := REGEXP_REPLACE(l_loc_names."language", '([[:punct:]])', '\1') || '#$' || REGEXP_REPLACE(l_loc_names."studios", '([[:punct:]])', '\1') || '#$' || NVL(REGEXP_REPLACE(l_loc_names."funding", '([[:punct:]])', '\1'), 'NULL') || '#$' || NVL(REGEXP_REPLACE(l_loc_names."location", '([[:punct:]])', '\1'), 'NULL');

typ2processed_data2 := '[{' || typ2processed_data || '}]';

END LOOP;

ELSIF arr_len_loc_names > 1 THEN

typ2processed_data := '';

typ2processed_data2 := '';

FOR l IN 1..nested_obj_array(i)."locationattr".COUNT LOOP

l_loc_names := nested_obj_array(i)."locationattr"(l);

extracted.EXTEND;

extracted(extracted.COUNT) := REGEXP_REPLACE(l_loc_names."language", '([[:punct:]])', '\1') || '#$' || REGEXP_REPLACE(l_loc_names."studios", '([[:punct:]])', '\1') || '#$' || NVL(REGEXP_REPLACE(l_loc_names."funding", '([[:punct:]])', '\1'), 'NULL') || '#$' || NVL(REGEXP_REPLACE(l_loc_names."location", '([[:punct:]])', '\1'), 'NULL');

END LOOP;

FOR m IN extracted.FIRST..extracted.LAST LOOP

typ2processed_data := typ2processed_data || '$#' || '{' || extracted(m) || '}';

typ2processed_data2 := '[' || REGEXP_REPLACE(typ2processed_data, '$#', '', 1, 1) || ']';

END LOOP;

END IF;

-- Format and concatenate processed data

f_processed := '(' || REGEXP_REPLACE(nested_obj_array(i)."name", '([[:punct:]])', '\1') || ',' || REGEXP_REPLACE(nested_obj_array(i)."memberType", '([[:punct:]])', '\1') || ',' || processed_data2 || ',' || typ2processed_data2 || ',' || nested_obj_array(i)."clubMember" || ',' || nested_obj_array(i)."seqNo" || ',' || nested_obj_array(i)."isMember" || ')';

f_processed2 := f_processed2 || '$#$' || f_processed;

f_processed3 := REGEXP_REPLACE(f_processed2, '$#$', '', 1, 1);

END LOOP;

-- Insert transformed data into the staging table

INSERT INTO MOVIE_ARTIST_INT_TBL VALUES (l_artist_spec_type, l_artist_key, l_hash, l_created, f_processed3, l_artist_feature_id, l_play_role_id);

END LOOP;

CLOSE c1;

COMMIT;

END;

Migrate data extracted using AWS DMS

Now, the extracted data from the intermediate staging table needs to be migrated to PostgreSQL tables.

We use AWS DMS to migrate the data as-is to a PostgreSQL database staging table, which is then further processed using the string_to_array function and moved into the PostgreSQL main tables.

The following screenshots show the table data in the intermediate tables in the source after transformation using PL/SQL.

MOVIE_SYNOPSIS_INT_TBL

MOVIE_ARTIST_INT_TBL

Data in these tables is migrated using AWS DMS. Here is a sample JSON for the AWS DMS migration task setting.

{

"rules": [

{

"rule-type": "transformation", transformation rules

"rule-id": "458315677",

"rule-name": "458315677",

"rule-target": "column",

"object-locator": {

"schema-name": "POC_USR",

"table-name": "MOVIE_SYNOPSIS_INT_TBL",

"column-name": "%"

},

"parallel-load": null,

"rule-action": "convert-lowercase",

"value": null,

"old-value": null

},

{

"rule-type": "transformation",

"rule-id": "458299409",

"rule-name": "458299409",

"rule-target": "table",

"object-locator": {

"schema-name": "POC_USR",

"table-name": "MOVIE_SYNOPSIS_INT_TBL"

},

"parallel-load": null,

"rule-action": "convert-lowercase",

"value": null,

"old-value": null

},

{

"rule-type": "transformation",

"rule-id": "458278494",

"rule-name": "458278494",

"rule-target": "schema",

"object-locator": {

"schema-name": "POC_USR"

},

"parallel-load": null,

"rule-action": "convert-lowercase",

"value": null,

"old-value": null

},

{

"rule-type": "selection", selection rule

"rule-id": "458252722",

"rule-name": "458252722",

"object-locator": {

"schema-name": "POC_USR",

"table-name": "MOVIE_SYNOPSIS_INT_TBL"

},

"rule-action": "include",

"filters": [],

"parallel-load": null,

"isAutoSegmentationChecked": false

}

]

}

After the data is moved to the staging tables through AWS DMS, it’s then loaded into the PostgreSQL database using string_to_array to cast the type as a custom data type as shown in the following.

Do

$$

declare

v_rec record;

v_synopses varchar;

v_element varchar;

v_arr poc_usr.movie_synopsis_list_t;

v_arr_txt varchar[];

begin

for v_rec in select * from poc_usr.movie_synopsis_int_tbl

loop

v_arr_txt:= string_to_array(v_rec.synopses,'$#$');

for i in 1..coalesce(array_length(v_arr_txt,1),0)

loop

v_synopses := rtrim(ltrim(trim(v_arr_txt[i]::text),'('),')');

v_arr:= array_append(v_arr,

(row(split_part(v_synopses,'#$%^',1),

split_part(v_synopses,'#$%^',2),

split_part(v_synopses,'#$%^',3),

split_part(v_synopses,'#$%^',4))

)::poc_usr.movie_synopsis_t);

end loop;

insert into poc_usr.movie_synopsis_tbl

select v_rec.movie_spec,v_rec.release_key,v_rec.hash, v_rec.created,

v_arr;

end loop;

end;

$$

language plpgsql;

For more complex nested types, we extract the data from the staging table into array format and then load it into the target table using exclusive type casting.

DO

$$

DECLARE

C1 CURSOR FOR SELECT * from poc_usr.movie_artist_int_tbl;

v_rec record;

v_arr poc_usr.movie_artist_list_t;

v_artist_type_text poc_usr.movie_artist_t_text;

v_artist_type poc_usr.movie_artist_t;

v_arr1 poc_usr.movie_artist_t_text[];

v_arrin1 poc_usr.artist_role_list_t;

v_arrin2 poc_usr.artist_local_list_t;

BEGIN

OPEN C1;

LOOP

FETCH C1 INTO v_rec;

EXIT WHEN NOT FOUND;

IF v_rec.ARTISTS is not null THEN

raise notice 'v_rec.ARTISTS %',v_rec.ARTISTS;

SELECT string_to_array(v_rec.ARTISTS,'$#$')::poc_usr.movie_artist_t_text[]

INTO v_arr1

FROM poc_usr.movie_artist_int_tbl as tab

WHERE tab.artist_spec_type=v_rec.artist_spec_type

and tab.artist_key=v_rec.artist_key

and tab.hash = v_rec.hash;

raise notice 'v_arr1: %',v_arr1;

IF array_length(v_arr1,1)>0 THEN

FOR i in COALESCE(array_lower(v_arr1,1),1).. array_upper(v_arr1,1)

LOOP

v_artist_type_text:=v_arr1[i];

IF v_artist_type_text.roles is not null THEN

v_artist_type_text.roles := replace(replace(replace(v_artist_type_text.roles,'{','('),'}',')'),'#$',',');

v_artist_type_text.roles := trim(trim(v_artist_type_text.roles,']'),'[');

SELECT string_to_array(v_artist_type_text.roles,'$#')::poc_usr.artist_role_list_t INTO v_arrin1;

raise notice 'v_arr1: %',v_arr1;

END IF;

IF v_artist_type_text.locationattr is not null AND v_artist_type_text.locationattr <> '' THEN

v_artist_type_text.locationattr := replace(replace(replace(v_artist_type_text.locationattr,'{','('),'}',')'),'#$',',');

v_artist_type_text.locationattr := trim(trim(v_artist_type_text.locationattr,']'),'[');

SELECT string_to_array(v_artist_type_text.locationattr,'$#')::poc_usr.artist_local_list_t INTO v_arrin2;

END IF;

IF array_length(v_arr,1)>0 THEN

v_arr := ARRAY_APPEND(v_arr,NULL);

FOR i in coalesce(array_lower(v_arrin1,1),1)..coalesce(array_upper(v_arrin1,1),0)

loop

v_arrin1[i].name:=coalesce(v_arrin1[i].name,'');

v_arrin1[i].experienced:=coalesce(v_arrin1[i].experienced,'');

v_arrin1[i].type:=coalesce(v_arrin1[i].type,'');

end loop;

FOR i in coalesce(array_lower(v_arrin2,1),1)..coalesce(array_upper(v_arrin2,1),0)

loop

v_arrin2[i].language:=case v_arrin2[i].language when 'NULL' then '' else v_arrin2[i].language end;

v_arrin2[i].studios:=case v_arrin2[i].studios when 'NULL' then '' else v_arrin2[i].studios end;

v_arrin2[i].funding:=case v_arrin2[i].funding when 'NULL' then '' else v_arrin2[i].funding end;

v_arrin2[i].location:=case v_arrin2[i].location when 'NULL' then '' else v_arrin2[i].location end;

raise notice 'v_arrin2[i] %',v_arrin2[i];

end loop;

v_arr[ARRAY_UPPER(v_arr,1)]:=(v_artist_type_text.name,

v_artist_type_text.membertype,

v_arrin1::poc_usr.artist_role_list_t,

v_arrin2::poc_usr.artist_local_list_t,

v_artist_type_text.clubmember,

v_artist_type_text.seqno,

COALESCE(v_artist_type_text.ismember,''))::poc_usr.movie_artist_t;

ELSE

FOR i in coalesce(array_lower(v_arrin1,1),1)..coalesce(array_upper(v_arrin1,1),0)

loop

v_arrin1[i].name:=coalesce(v_arrin1[i].name,'');

v_arrin1[i].experienced:=coalesce(v_arrin1[i].experienced,'');

v_arrin1[i].type:=coalesce(v_arrin1[i].type,'');

end loop;

FOR i in coalesce(array_lower(v_arrin2,1),1)..coalesce(array_upper(v_arrin2,1),0)

loop

v_arrin2[i].language:=case v_arrin2[i].language when 'NULL' then '' else v_arrin2[i].language end;

v_arrin2[i].studios:=case v_arrin2[i].studios when 'NULL' then '' else v_arrin2[i].studios end;

v_arrin2[i].funding:=case v_arrin2[i].funding when 'NULL' then '' else v_arrin2[i].funding end;

v_arrin2[i].location:=case v_arrin2[i].location when 'NULL' then '' else v_arrin2[i].location end;

raise notice 'v_arrin2[i] %',v_arrin2[i];

end loop;

v_arr:=ARRAY[(v_artist_type_text.name,

v_artist_type_text.membertype,

v_arrin1::poc_usr.artist_role_list_t,

v_arrin2::poc_usr.artist_local_list_t,

v_artist_type_text.clubmember,

v_artist_type_text.seqno,

COALESCE(v_artist_type_text.ismember,''))]::poc_usr.movie_artist_list_t;

END IF;

END LOOP;

END IF;

END IF;

update poc_usr.movie_artist_tbl

set artists = v_arr

where artist_spec_type=v_rec.artist_spec_type

and artist_key = v_rec.artist_key

and hash = v_rec.hash;

END LOOP;

END;

$$

LANGUAGE plpgsql;

Using the preceding custom procedures, we load the data from staging tables into the actual tables in the target Amazon RDS for PostgreSQL database.

Clean up

To avoid incurring future charges, complete the following steps:

- Terminate the EC2 instance (if your source Oracle database was deployed on one).

- Clean up the AWS DMS tasks created.

- Delete the AWS DMS replication instance.

- Delete the RDS for PostgreSQL.

Conclusion

In this post, we discussed strategies for effectively extracting and transforming complex multi-nested UDTs from Oracle’s nested tables and migrating them to RDS for PostgreSQL. By addressing the intricacies highlighted in the problem statement—such as the hierarchical nature of multi-nested UDTs—we provided methods for managing and converting these data structures.

If you have any comments or questions, leave them in the comments section.

About the authors

Sujitha Sasikumaran is a Lead Database Consultant and Migration Expert with extensive experience in optimizing and transitioning complex database systems. Specializing in both traditional database management and modern cloud technologies, Sujitha helps organizations streamline their data environments and execute flawless migrations. Her role also involves integrating the latest advancements in data management to drive innovation and efficiency.

Sujitha Sasikumaran is a Lead Database Consultant and Migration Expert with extensive experience in optimizing and transitioning complex database systems. Specializing in both traditional database management and modern cloud technologies, Sujitha helps organizations streamline their data environments and execute flawless migrations. Her role also involves integrating the latest advancements in data management to drive innovation and efficiency.

Tirumala Rama Chandra Murty Dasari is a Database Consultant with AWS Professional Services (Hyderabad, India). With extensive knowledge on relational and non-relational databases, he helps the customers migrate to the AWS Cloud. He’s curious to learn and implement new technologies, which is reflected in customer success. He uses relevant technologies to automate manual processes and enhance existing automation.

Tirumala Rama Chandra Murty Dasari is a Database Consultant with AWS Professional Services (Hyderabad, India). With extensive knowledge on relational and non-relational databases, he helps the customers migrate to the AWS Cloud. He’s curious to learn and implement new technologies, which is reflected in customer success. He uses relevant technologies to automate manual processes and enhance existing automation.

Source:

Sujitha Sasikumaran is a Lead Database Consultant and Migration Expert with extensive experience in optimizing and transitioning complex database systems. Specializing in both traditional database management and modern cloud technologies, Sujitha helps organizations streamline their data environments and execute flawless migrations. Her role also involves integrating the latest advancements in data management to drive innovation and efficiency.

Sujitha Sasikumaran is a Lead Database Consultant and Migration Expert with extensive experience in optimizing and transitioning complex database systems. Specializing in both traditional database management and modern cloud technologies, Sujitha helps organizations streamline their data environments and execute flawless migrations. Her role also involves integrating the latest advancements in data management to drive innovation and efficiency. Tirumala Rama Chandra Murty Dasari is a Database Consultant with AWS Professional Services (Hyderabad, India). With extensive knowledge on relational and non-relational databases, he helps the customers migrate to the AWS Cloud. He’s curious to learn and implement new technologies, which is reflected in customer success. He uses relevant technologies to automate manual processes and enhance existing automation.

Tirumala Rama Chandra Murty Dasari is a Database Consultant with AWS Professional Services (Hyderabad, India). With extensive knowledge on relational and non-relational databases, he helps the customers migrate to the AWS Cloud. He’s curious to learn and implement new technologies, which is reflected in customer success. He uses relevant technologies to automate manual processes and enhance existing automation.