In today’s fast-paced digital landscape, businesses are constantly seeking ways to optimize their data management systems to keep up with the ever-growing demands of modern applications. As the volume and complexity of data continue to increase, many organizations find themselves dealing with the limitations of their existing high-throughput relational databases which is a database specifically designed to handle a large volume of data requests quickly and efficiently, meaning it can process a high number of read and write operations per second. These databases have been carefully maintained over the years to support critical business applications but maintaining these complex infrastructures on premises can be costly and come with limitations.

Moving these high-throughput databases to AWS presents significant technical challenges, from maintaining transaction throughput during migration, ensuring data consistency, and replicating complex performance optimizations in the cloud environment. In this post, we explore key strategies and AWS tools to help you successfully migrate your high-throughput relational database while minimizing business disruption.

Planning Your High-Throughput Database Migration to AWS

High-throughput online transaction processing (OLTP) relational databases are designed such that they don’t compromise on availability or durability in a cloud-scale environment. A high-throughput database separates storage and compute resources, unlike traditional databases where these are tightly coupled. This separation allows independent scaling of processing power and storage capacity. With all I/O written over the network, the fundamental constraint is the network. As a result, we need to focus on techniques that relieve the network and improve throughput. These databases rely on models that can handle the complex and correlated failures that occur in large-scale cloud environments and avoid outlier performance penalties, log processing to reduce the aggregate I/O burden, and asynchronous consensus to eliminate chatty and expensive multi-phase synchronization protocols, offline crash recovery, and checkpointing in distributed storage. It’s important to understand these fundamental architectural differences and plan your migration accordingly.

Despite a thorough understanding of these underlying patterns, migrating to and from a high-throughput relational database is not without its challenges. It requires careful planning, a deep understanding of your current database architecture, and a strategic approach to achieve a smooth transition. A systematic migration strategy is crucial for success while also keeping business disruption minimal.

Your migration strategy should include the following phases and considerations:

- Discovery and planning

- Engaging the right resources

- Selecting your migration approach

- Architecture and environment design

- Database fine-tuning and performance reports

- Testing and quality assurance

- Key performance requirements

- Supportability

- Pricing

- Documentation

Let’s dive in and discover how you can effectively manage the transition from your high-throughput relational database to the powerful and flexible AWS platform.

Discovery and planning

It’s essential conduct a careful discovery phase to understand the current architecture and design a future architecture that meets or exceeds your requirements. Working with your database specialist is critical to any migration.

Conduct a full inventory of existing databases along with their inter-dependencies, data models, access patterns, and performance profiles. This informs the target AWS database selection, whether you’re using Amazon Aurora PostgreSQL-Compatible Edition, Amazon Aurora MySQL-Compatible Edition, Amazon RDS for PostgreSQL, Amazon RDS for MySQL, Amazon RDS for MariaDB, Amazon RDS for SQL Server, Amazon RDS for Oracle, and Amazon RDS for Db2. Estimate the change impact on any interconnected systems. You can build a business case with n-year TCO projections using the AWS Pricing Calculator.

The discovery process includes answering the following questions:

- What is the current architecture for the database?

- What is the performance requirement for production and non-production environments?

- What are the IOPS and throughput requirements during peak and non-peak conditions?

- Is there a low latency requirement?

- Is there caching in place?

- What is the total size of the data stored, and what is the archival process?

- What are the support and licensing requirements?

- How are the applications integrated with the databases?

- What are the long-term objectives? You want to make sure the proposed architecture meets your long-term requirements, which can include:

- Future acquisitions that may impact the current design.

- Potential planned modernization or architecture changes.

- Growth of your existing customer base, which may add more load to the current systems.

Engaging the right resources

To achieve optimal performance and scalability, it’s essential to design a high-throughput database environment. One key aspect of this process is engaging the right resources. Using the right set of teams is critical to successfully design a high-throughput database environment.

This team should include:

- Technology and industry expertise – This includes industry specialists that understand the business objectives and use cases.

- Product teams – For particularly high-performance requirements that may meet or exceed current service offerings, it’s important to have product and business development managers who work closely with key features . With this, you can build a solution that meets the current needs and can also scale to meet long-term requirements, including any proactive quota updates.

- Cloud economics team – A cloud economist can review and model the costs. A cloud economist architect who specializes in specific databases can also help compare the costs among different database options and configurations.

- Migration specialists – Independent migration specialists who are not tied to any specific technology solution can provide unbiased advice on migration strategies and approaches. This expertise is particularly valuable since most organizations may lack this specialized knowledge in-house.

Selecting your migration approach

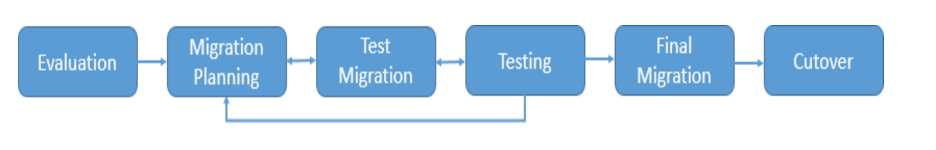

The following diagram illustrates the migration workflow, starting with evaluation to migration planning, running a test migration, determining your final migration approach, and finally cutting over to the new database.

Figure 1 : Migration workflow

You can choose between the following high-level approaches:

- Big-bang – In this approach, you migrate everything at once. This is faster overall, but higher risk. It’s better for smaller, well-understood migrations.

- Incremental – An incremental approach of migrating in batches means a longer timeline, but less downtime risk. You can optimize the process based on learnings. This is better for larger, complex migrations.

When prioritizing databases for migration, consider:

- Traditional approach – Consider business criticality and technical complexity when prioritizing. While migrating less complex databases first can provide quick wins, this approach may lead to data gravity challenges if mission-critical systems lag too far behind.

- Data gravity considerations – Many organizations now choose to migrate critical solutions earlier in the process, once the migration path is well understood and tested. This helps avoid integration complexities and data synchronization challenges that can arise when dependent systems are separated during a prolonged migration.

Choose your strategy based on your specific environment, dependencies between systems, and organizational capabilities. The key is to maintain a balance between managing risk and avoiding the complications of delayed critical system migrations.

Consider the following methods based on your source database’s compatibility with the target database:

- Homogeneous migration – If your source database is complaint with your target database, then migration is straightforward. You should evaluate if your application can handle a predictable length of downtime during off-peak hours, in which case migration with downtime is the simplest option and is a highly recommended approach. In some cases, you might want to migrate your database with minimal downtime, such as when your database is relatively large and the migration time using downtime options is longer than your application maintenance window, or when you want to run source and target databases in parallel for testing purposes.

- Heterogeneous migration – Your source database might not be compatible with the target database, for example from SQL to NoSQL or non-PostgreSQL to PostgreSQL compliant. In this case, evaluate the various options available, such as the DMS Schema Conversion

DMS Schema Conversion with generative AI feature is now available. For more information, see Viewing your database migration assessment report for DMS Schema Conversion and Converting database schemas in DMS Schema Conversion.

Architecture and environment design

A database represents a critical component in the architecture of most applications. Migrating your database to a new platform is a significant event in an application’s lifecycle and will have an impact on application functionality, performance, and reliability. Consider the following:

- Application compatibility – Although most applications can be architected to work with many high-throughput relational database engines, make sure your application continues to work. Check if the code, applications, drivers, and tools that are currently being used are compatible with the target database with little or no change. For example, due to the managed nature of databases such as Aurora MySQL or PostgreSQL, SSH access to database nodes is restricted, which may affect your ability to install third-party tools or plugins on the database host if you have a requirements or doing so in current environment.

- Database performance – This is a key consideration when migrating a database to a new platform. For a high-throughput database migration, benchmarking and performance evaluations are crucial initial steps. One architecture pattern to consider is integration of the database engine with an SSD-based virtualized storage layer designed for database workloads. While SSD storage is now standard in cloud environments, optimizing storage architecture remains crucial for high-performance database workloads. For example, specialized storage solutions like Amazon FSx, combined with dedicated hosts, can deliver exceptional performance – in some cases exceeding traditional on-premises solutions. This reduces writes to the storage system, minimizes lock contention, and eliminates delays created by database process threads. Sharding and read replica considerations can also be evaluated at this stage.

- Reliability considerations – An important consideration with databases is high availability and disaster recovery. Determine the Recovery Time Objective (RTO) and Recovery Point Objective (RPO) requirements of your application. Based on your target database, you can choose an approach such as synchronous multi-AZ read replicas, active-active multi-AZ deployments with multi-write capability, cross-Region disaster recovery, and DB snapshot restore. Each option offers different trade-offs between recovery time, data consistency, and operational complexity to help achieve your availability objectives.

- Cost and licensing – Owning and running databases comes with associated costs. Before planning a database migration, an analysis of the TCO of the new database platform is imperative. If you’re running a commercial database engine (Oracle, SQL Server, Db2, and so on), a significant portion of your cost is database licensing.

- Other migration considerations – It’s important to estimate the amount of code and schema changes that you need to perform while migrating your database. The AWS Schema Conversion (also with generative AI support) can help you estimate that effort. Consider your application availability and the impact of the migration process on your application and business before starting with a database migration.

Database fine-tuning and performance reports

You should also allow for time to review your database performance reports. Database fine-tuning is key because it directly impacts the performance, efficiency, and scalability of applications that depend on database systems. As the volume of data and the number of users grow, databases can become bottlenecks, leading to slow query responses and poor user experience.

Fine-tuning involves optimizing queries, indexing strategies, memory allocation, and other configuration parameters to make sure the database operates at its optimal capacity. This process not only enhances the speed and efficiency of data retrieval and manipulation, but also effectively uses hardware resources, reducing operational costs. Additionally, a well-tuned database minimizes downtime and enhances reliability, which is important for business continuity and great customer experience. It also provides a path forward when you start considering migration. In cloud environments, fine-tuning becomes particularly critical as it directly impacts costs, unlike on-premises environments where you might solve performance issues by simply adding more hardware resources. When migrating to the cloud, understanding your workload’s performance characteristics and optimization opportunities becomes essential for both performance and cost management. This shift from “scale up” to “optimize first” mindset is a key consideration in cloud migration planning.

The following are some of the metrics you can start with:

- Average database CPU usage

- Peak database CPU usage

- Average throughput (MBps)

- Peak throughput (MBps)

- Average IOPS

- Peak IOPS

Testing and quality assurance

As discussed earlier, we recommending adopting one of the migration approach mentioned above, starting with lower environments such as development and test, followed by staging, and finally production workloads. At each database migration milestone, run a comprehensive suite of tests, including the following:

- Functional testing to validate application functionality and end-to-end business processes

- Integration testing to verify interactions between systems, data flows, and connected applications

- Performance testing to measure response times, concurrency, and resource utilization under peak loads

- Security testing to verify access controls, data encryption, network isolation, and compliance with policies

- Disaster recovery testing like backup restore, failover to replicas, RPO, and RTO

- Acceptance testing to verify that the migrated database system meets all business requirements and success criteria before final cutover

- Data validation testing to validate the integrity and consistency of your migrated data. Use tools to reconcile source and target databases. Verify that query performance meets established SLAs by comparing key queries before and after migration.

Conduct multiple test cycles to identify and resolve any migration defects or performance issues. Make sure all acceptance criteria are met and stakeholders approve production readiness before cutover. Have a rollback plan in place. Perform a final round of testing and validation immediately after go-live to verify success.

Key performance requirements

In addition to Amazon RDS, Amazon Elastic Block Store (Amazon EBS) optimized instances use an optimized configuration stack and provide additional dedicated capacity for Amazon EBS I/O. This optimization provides the best performance for EBS volumes by minimizing contention between Amazon EBS I/O and other network traffic. EBS-optimized instance throughput depends on the maximum throughput per volume attached to the instance. For example, you may require the following performance parameters:

- Maximum EBS bandwidth for Amazon Elastic Compute Cloud (Amazon EC2) instance types

- io1/io2 EBS volumes

- General Purpose SSD (gp3) volumes which are latest generation of General Purpose SSD volumes, and the lowest cost SSD volume offered by Amazon EBS

- EBS Provisioned IOPS (PIOPS) to reach maximum throughput

- EBS multi-attach support and which EBS volumes are supported

Supportability

It’s important to check the support for the following and prepare in advance for a smoother migration:

- License mobility (if the license from on premises can be brought into the cloud)

- License cost in case mobility is not possible

- Support from the software vendor

- Patching and security updates responsibilities

- The chain of escalation when a support issue occurs

Pricing

One of the essential factors is cost. The following are the most crucial pricing components for this type of workload:

- PIOPS drive the throughput for the workload, so in addition to the cost for storage capacity, PIOPS is charged separately

- Bring Your Own Licenses

- On-Demand Instances

- Reserved Instances

- Saving Plans

Other factors might include data transfer out (DTO) and storage.

Documentation

Migrating an enterprise database environment to AWS involves many technical and organizational considerations. Before doing an actual database migration, it may be a good idea to first build a proof of concept on AWS to validate assumptions and demonstrate feasibility. When designing a proof of concept, it’s critical to take the following steps:

- Create a detailed POC architecture document – A well-defined architecture document serves as a blueprint, providing clarity, alignment, and a shared understanding of the proof of concept scope and objectives among all stakeholders

- Conduct required walkthroughs with teams and establish acceptance criteria – Engaging teams and setting acceptance criteria provides buy-in, addresses concerns, and aligns everyone towards a common goal, reducing the risk of miscommunication and project delays

- Act as a single-threaded owner – Having a dedicated owner streamlines decision-making, facilitates effective communication, and provides a central point of accountability, increasing the chances of proof of concept success

- Document configuration recipes – Documenting proven configurations and best practices captures valuable knowledge, enables reproducibility, and serves as a reference for the actual migration, saving time and effort

Conclusion

A strategic approach encompassing discovery, planning, architectural design, implementation, testing, monitoring, and communication aspects is key to migrating high-throughput databases. We believe the central constraint in high-throughput data processing has moved from compute and storage to the network. Understanding the architectural differences in relational database to address this constraint along with a strategic approach to migration will provide a seamless experience.

Refer to Database Migration Step-by-Step Walkthroughs and Database migrations to learn more.

About the Author

Nitin Eusebius is a Principal Enterprise Solutions Architect at AWS, experienced in Software Engineering, Enterprise Architectures, and AI/ML. He is deeply passionate about exploring the possibilities of next generation cloud architectures and generative AI. He collaborates with customers to help them build well-architected applications on the AWS platform, and is dedicated to solving technology challenges and assisting with their cloud journey.

Nitin Eusebius is a Principal Enterprise Solutions Architect at AWS, experienced in Software Engineering, Enterprise Architectures, and AI/ML. He is deeply passionate about exploring the possibilities of next generation cloud architectures and generative AI. He collaborates with customers to help them build well-architected applications on the AWS platform, and is dedicated to solving technology challenges and assisting with their cloud journey.

Dhara Vaishnav is Solution Architecture leader at AWS and provides technical advisory to enterprise customers to leverage cutting-edge technologies in Generative AI, Data, and Analytics. She provides mentorship to solution architects to design scalable, secure, and cost-effective architectures that align with industry best practices and customers’ long-term goals.

Dhara Vaishnav is Solution Architecture leader at AWS and provides technical advisory to enterprise customers to leverage cutting-edge technologies in Generative AI, Data, and Analytics. She provides mentorship to solution architects to design scalable, secure, and cost-effective architectures that align with industry best practices and customers’ long-term goals.

Source: Read More