In this post, we discuss how Heroku migrated their multi-tenant PostgreSQL database fleet from self-managed PostgreSQL on Amazon Elastic Compute Cloud (Amazon EC2) to Amazon Aurora PostgreSQL-Compatible Edition. Heroku completed this migration with no customer impact, increasing platform reliability while simultaneously reducing operational burden. We dive into Heroku and their previous self-managed architecture, the new architecture, how the migration of hundreds of thousands of databases was performed, and the enhancements to the customer experience since its completion.

An Overview of Heroku

Heroku is a fully managed platform as a service (PaaS) solution, built on Amazon Web Services, Inc, that makes it straightforward to deploy, operate, and scale apps and services. Heroku was founded in 2007 and acquired by Salesforce in 2010. Today, Heroku is the chosen platform for over 13 million applications, ranging from developers and operations teams at small startups to enterprises with large-scale deployments.

Heroku does more than simplifying application deployment and scaling with Dynos (Heroku-managed containers that provide secure, scalable compute for applications to run on). They offer fully managed data solution add-ons through Heroku Data Services, including Heroku Postgres, Apache Kafka on Heroku, and Heroku Key-Value Store. They handle security, server patching, failovers, backups, and other complex configurations, helping customers spend more of their time developing applications, and not worrying about data infrastructure management. All Heroku Data Services add-ons can be provisioned with a single CLI command or click, enhancing the development process with ease of integration, reliability, and scalability.

One of the Data add-ons is Heroku Postgres. This provides a scalable and cost-effective PostgreSQL database with automatic backups, database management, performance optimization, and everything else necessary to operate a database. Heroku customers benefit from fully managed, simple-to-integrate, and reliable data solutions that support the creation of innovative applications quickly and drive business success. An example is the Heroku Connect tool, which uniquely and seamlessly synchronizes Salesforce data with Heroku Postgres.

Keeping customer applications running smoothly requires significant investment. In early 2025, Heroku’s Data Services team migrated their multi-tenant Postgres offering, Heroku Postgres Essential databases, from a self-managed environment on Amazon EC2 to Amazon Aurora. The move removed undifferentiated heavy lifting and allows Heroku’s developers to focus on innovating and building the best experience for their customers, without the hassle of database management.

Heroku’s Previous Self-Managed PostgreSQL Architecture and Challenges

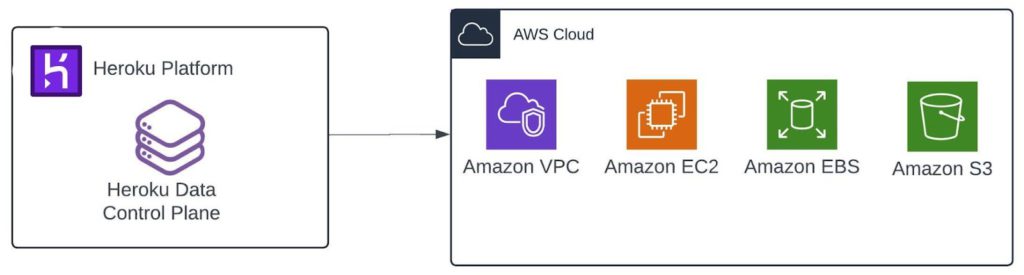

The following diagram illustrates Heroku’s legacy architecture.

The Heroku Data Team operated several control planes to manage customer resources. Heroku’s primary control plane accepted requests from customers and created resources on their behalf. When a customer created a Heroku Postgres add-on, the control plane created and managed the appropriate resources to craft the managed database experience. A provision request made several API requests to AWS to create a virtual private cloud (VPC), EC2 instances, Amazon Elastic Block Store (Amazon EBS) volumes, and Amazon Simple Storage Service (Amazon S3) paths according to the add-on plan requirements.

When the infrastructure was ready, the control plane prepared the infrastructure for the PostgreSQL service and set up management of its timeline, credentials, and continuous protection. Heroku had automation to connect to the instances, drop configuration files, and execute commands to complete the database setup. When the database became available, they pushed the connection string details into the Heroku application configuration variables. After the setup, the control plane continuously monitored instance and service health, database usage, and other telemetry to maintain availability and performance.

This architecture served Heroku for well over 10 years. However, managing a fleet of database instances at scale was increasing in complexity and operational burden. As a managed database provider, it’s Heroku’s responsibility to keep the infrastructure and underlying services available, up to date, and secure. This involves writing a lot of code to observe, detect, and remediate issues with operating system patching, PostgreSQL updates, and hardware failures.

Heroku Data has gone to great lengths to automate much of the infrastructure lifecycle management. However, Heroku’s engineers began to see issues where they were spending more time and effort on maintaining the infrastructure that powers the services, instead of focusing on creating new customer experiences for their Data Services. When seeking out alternatives, it quickly became obvious that Aurora would be an excellent replacement for Heroku’s customers and Heroku Data’s future direction.

Heroku’s New PostgreSQL Architecture With Aurora

The following diagram illustrates their new architecture.

An underlying advantage built into Heroku Data’s control plane is the efficiency of using and working with AWS Cloud services. Heroku’s system is inspired by the finite-state machine abstraction. Every resource exists in a state where certain actions are performed and the resource is optionally transitioned to a new state. For example, they provision an EC2 instance with a specific set of parameters and the instance enters a pending state, where it remains until AWS completes the provision request. Heroku regularly polls the API and monitors for the next state change. When the instance enters the running state, they initiate additional automation to configure the instance with services and tooling needed to operate the database. Later, if Heroku detects further changes to the instance state, they respond with additional workflow automation to handle remediation or other lifecycle operations.

By selecting Aurora as the backend infrastructure for Heroku Postgres, Heroku Data no longer has to build, manage, and maintain server infrastructure. This includes creating and maintaining custom Amazon Machine Images (AMIs), installing and regularly patching the operating system and its libraries, testing and migrating to new instance types when they become generally available. They can use many of the patterns and automation we’ve described to effectively operate Aurora at global scale.

Now, when a customer submits a provision request, Heroku Data simply needs to create and set up an Aurora cluster. The underlying instances, disk, replication, and snapshot internals are handled by AWS. This speaks to a core value at Heroku: ephemeralization. By using Aurora, Heroku is able to do more with less. By reducing the complexity and effort of managing a bespoke database infrastructure, they can focus their efforts on generating more customer value in the way of value-added features like enhanced observability, intelligent database assistance, and increased data interoperability and mobility.

How Heroku Migrated Over 200,000 Databases

Heroku Data faced the challenge of migrating over 200,000 self-managed PostgreSQL databases to the fully managed Aurora service. This was a massive undertaking, but they were able to complete the migration with minimal impact to end customers in just four months.

While the Heroku Data team are experts at PostgreSQL, they were new to Amazon Aurora. AWS partnered with Heroku to fill in their knowledge gaps and create a custom training plan for their engineering and customer support teams. Over the course of several days, over forty Heroku resources were trained on Amazon Aurora by Amazon specialist solution architects.

After upskilling on Aurora, the key to the team’s success was the architecture in place, which used two separate control planes: the legacy control plane that managed a large portion of the infrastructure, and a modern control plane that handled the new Aurora based infrastructure.

When it came time to migrate the databases, Heroku Data evaluated several options, including using its existing managed pg_dump/pg_restore system. After performing technical discovery, they settled on building a new, specialized transfer system inside the modern control plane. This system used a tool called pgccopydb to capture the data from the legacy PostgreSQL databases and migrate them to new Aurora database instances. When considering the solution, utilizing parallel operations proved to be a great improvement over the existing pg_dump/pg_restore pattern. Once built, a transfer process would lock the database, copy the data, update the database pointer, and then unlock it – all of which typically took less than 2.2 minutes (p50) to complete.

To achieve a smooth migration, the Heroku Data team developed comprehensive testing capabilities. This allowed the end-to-end simulation of the entire migration process, verifying correct data transfer and that there were no issues with the new Aurora databases. This provided them confidence in the migration process. The migrations ran continuously, with an average of 2,000 databases migrating each day. By rigorously testing the migration flow, they identified and resolved potential issues before they affected end customers.

When the transfer system was ready for production, customers had two migration paths:

- Self-serve migration – Customers could opt in to migrate their database on demand by requesting to change their service plan. This would trigger the automated transfer process.

- Automated migration – An internal process that systematically migrated the remaining databases a small number at a time to avoid disruption.

The Heroku team successfully migrated hundreds of thousands of databases from self-managed PostgreSQL to the fully managed Aurora PostgreSQL. This migration happened with minimal impact to end customer. This was the result of months of hard work, during which the team built the right architecture and tooling to seamlessly implement this complex migration. We also leveraged AWS Countdown, an AWS Enterprise Support engagement for preparation and execution of planned events including migrations and launches, assisting with service quotas management, capacity planning and operational support.

Advantages and Benefits of the New Architecture

Shifting to the new database platform architecture is an investment made by Heroku as a commitment to continuously deliver a world-class experience to their customers. Heroku simplicity, or “the magic moment,” is what Heroku strives to deliver in every aspect of their platform, no less on data products.

As mentioned, Heroku operating their own fleet of PostgreSQL databases on EC2 instances required significant engineering effort that competed with developing new features, the “magic” their customers enjoy. The new architecture on Aurora reduces the operational burden on Heroku engineers. It removes the need to manage on-instance software updates, patching, and maintenance, like the operating system, as well as PostgreSQL service and other supporting applications and libraries. Knowing that AWS is keeping their customer databases safe and secure and continuously available, Heroku can now focus on building new features, having more opportunities to listen to their customers, and further strengthening the partnership with AWS.

The new architecture gives Heroku Data the ability to deliver additional features in the near future, including AI-enabled database administrator, auto scaling, sleep mode, near-zero downtime, increased database connections, scaling database storage without the compute constraints all the way to 128 TB, and more. And with Heroku’s simplicity, attaching a database as powerful as this to an application is achieved with a single line of code.

The migration to Amazon Aurora enhances Heroku’s security posture through built-in encryption at rest using AWS Key Management Service (AWS KMS) and in transit using SSL/TLS, automated security patching without maintenance windows, and improved security controls through AWS Identity and Access Management (IAM) authentication and network isolation. AWS CloudTrail provides comprehensive audit capabilities through automated activity logging. These enterprise-grade security features are delivered automatically as part of Amazon Aurora, helping Heroku maintain a strong security posture without additional configuration overhead.

Conclusion

Heroku’s migration from self-managed PostgreSQL on Amazon EC2 to Aurora is a testament to their engineering team’s preparation and execution. Partnering with AWS, Heroku performed an analysis of their previous architecture and the steps required to migrate at scale. This analysis included architecture, capacity, features, performance testing, and enablement. Together, AWS and Heroku determined a path to deliver a key feature, delegated extension support, on Aurora. After the general availability of Heroku’s new Aurora-based product, they migrated their multi-tenant customer base of hundreds of thousands of database instances. Heroku is now working on the next step: migration of their single-tenant customer base, on Heroku’s Private Spaces, to Aurora in late 2025.

About the Authors

Stefan Pieterse is a Principal Customer Solutions Manager at AWS who has helped multiple strategic customers migrate to, and modernize workloads in, the cloud. In his current role, he helps Heroku achieve success by delivering their strategic goals and growing the partnership through enablement, customer advocacy, and earning trust. When not working on improving his customer’s business, he clears his head jogging and playing with his three-year-old son.

Stefan Pieterse is a Principal Customer Solutions Manager at AWS who has helped multiple strategic customers migrate to, and modernize workloads in, the cloud. In his current role, he helps Heroku achieve success by delivering their strategic goals and growing the partnership through enablement, customer advocacy, and earning trust. When not working on improving his customer’s business, he clears his head jogging and playing with his three-year-old son.

John Nichols, also known as Rocket, is a Solutions Architect at AWS, where he helps some of the largest customers on the planet build for resilience, performance, and cost optimization. A former Chief Architect and Director of Cloud, Rocket brings deep experience in designing systems and leading high-performing teams. When he’s not thinking about computers, he enjoys producing livestream events and making wine at his own winery.

John Nichols, also known as Rocket, is a Solutions Architect at AWS, where he helps some of the largest customers on the planet build for resilience, performance, and cost optimization. A former Chief Architect and Director of Cloud, Rocket brings deep experience in designing systems and leading high-performing teams. When he’s not thinking about computers, he enjoys producing livestream events and making wine at his own winery.

Jonathan K. Brown is a Sr. Product Manager at Heroku, a Salesforce company, covering all of the Data Services business. A technology leader with experience in human-centered design, cloud architecture, AI applications, and engineering across diverse industries, from aerospace to high-tech startups, Jonathan deeply cares about delivering success to customers. He holds BS/MS Eng. and MBA from UC Berkeley, and enjoys paragliding any chance he gets.

Jonathan K. Brown is a Sr. Product Manager at Heroku, a Salesforce company, covering all of the Data Services business. A technology leader with experience in human-centered design, cloud architecture, AI applications, and engineering across diverse industries, from aerospace to high-tech startups, Jonathan deeply cares about delivering success to customers. He holds BS/MS Eng. and MBA from UC Berkeley, and enjoys paragliding any chance he gets.

Justin Downing is a Software Engineer Architect at Salesforce, where he focuses on the intricacies of Heroku and its Data Services. With a background in building scalable and reliable cloud architectures, Justin is passionate about sharing his knowledge and collaborating with others to build sustainable solutions. When he steps away from the keyboard, you can find him exploring the mountains, hiking, or skiing.

Justin Downing is a Software Engineer Architect at Salesforce, where he focuses on the intricacies of Heroku and its Data Services. With a background in building scalable and reliable cloud architectures, Justin is passionate about sharing his knowledge and collaborating with others to build sustainable solutions. When he steps away from the keyboard, you can find him exploring the mountains, hiking, or skiing.

Source: Read More