Transformers have revolutionized natural language processing, and now they are transforming computer vision as well. Vision Transformers (ViTs) apply the power of self-attention to image processing, offering state-of-the-art performance in tasks like classification, object detection, and image segmentation. But how do these models work under the hood? If you’ve ever wanted to build a Vision Transformer from scratch, this course is the perfect opportunity to dive in.

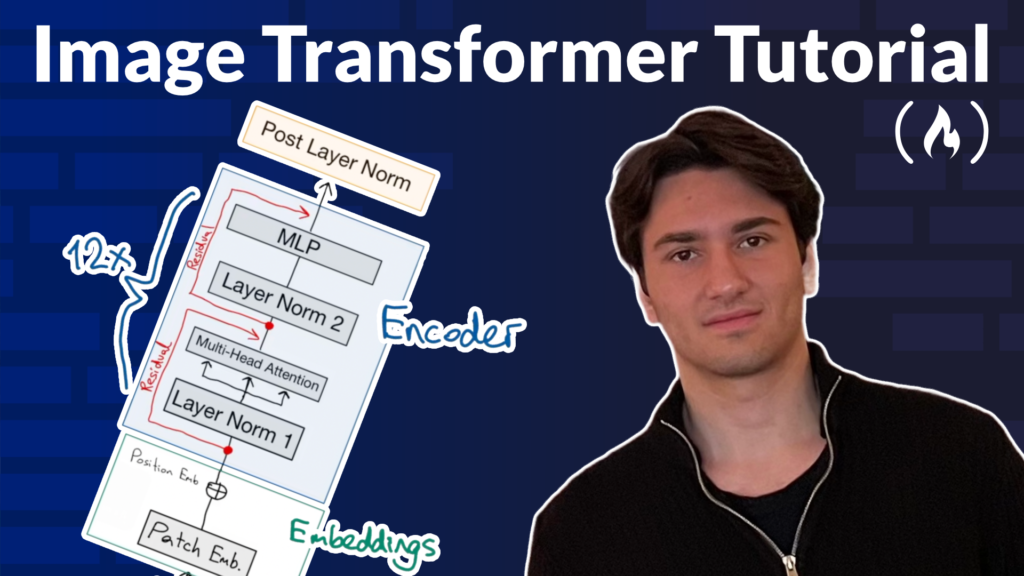

We just published a course on the freeCodeCamp.org YouTube channel that will teach you how to build a Vision Transformer from the ground up. Tunga Bayrak, an experienced machine learning instructor, will guide you through the core concepts and hands-on implementation of ViTs. By the end of the course, you’ll have a deep understanding of how AI models process visual data, along with practical skills to develop and experiment with your own Vision Transformer models.

What You’ll Learn

This course covers the fundamental concepts and components that make up a Vision Transformer. Here’s what you’ll explore:

-

Introduction to Vision Transformers – Understand the motivation behind ViTs and how they differ from traditional convolutional neural networks (CNNs).

-

CLIP Model – Learn about OpenAI’s CLIP model and how it bridges vision and language tasks.

-

SigLIP vs CLIP – Compare SigLIP and CLIP to see how different models approach vision-language learning.

-

Image Preprocessing – Discover how to prepare image data for a Vision Transformer.

-

Patch Embeddings – Learn how images are divided into patches and converted into vector embeddings.

-

Position Embeddings – Explore how Transformers maintain spatial information through positional embeddings.

-

Embeddings Visualization – Gain insights into how embeddings represent image features.

-

Embeddings Implementation – Implement the embedding process in code.

-

Multi-Head Attention – Understand and build the core self-attention mechanism that enables Transformers to capture complex relationships in images.

-

MLP Layers – Learn about the feedforward layers that refine feature representations in a ViT.

-

Assembling the Full Vision Transformer – Put everything together to build a working Vision Transformer model.

-

Recap – Review key takeaways and reinforce your understanding.

Why Learn Vision Transformers?

Vision Transformers are rapidly gaining popularity in AI research and industry applications. Unlike CNNs, which rely on local feature extraction, ViTs can capture long-range dependencies in images, making them highly effective for complex vision tasks. Understanding how to build a Vision Transformer from scratch will give you a strong foundation in deep learning, self-attention mechanisms, and modern AI architectures.

This course will equip you with the knowledge and practical skills to work with Vision Transformers. Watch the full course on the freeCodeCamp.org YouTube channel.

Source: freeCodeCamp Programming Tutorials: Python, JavaScript, Git & MoreÂ